Can [AI] be the all in one Assistant?

I started with streamlit with some sample apps for route gpx tracking

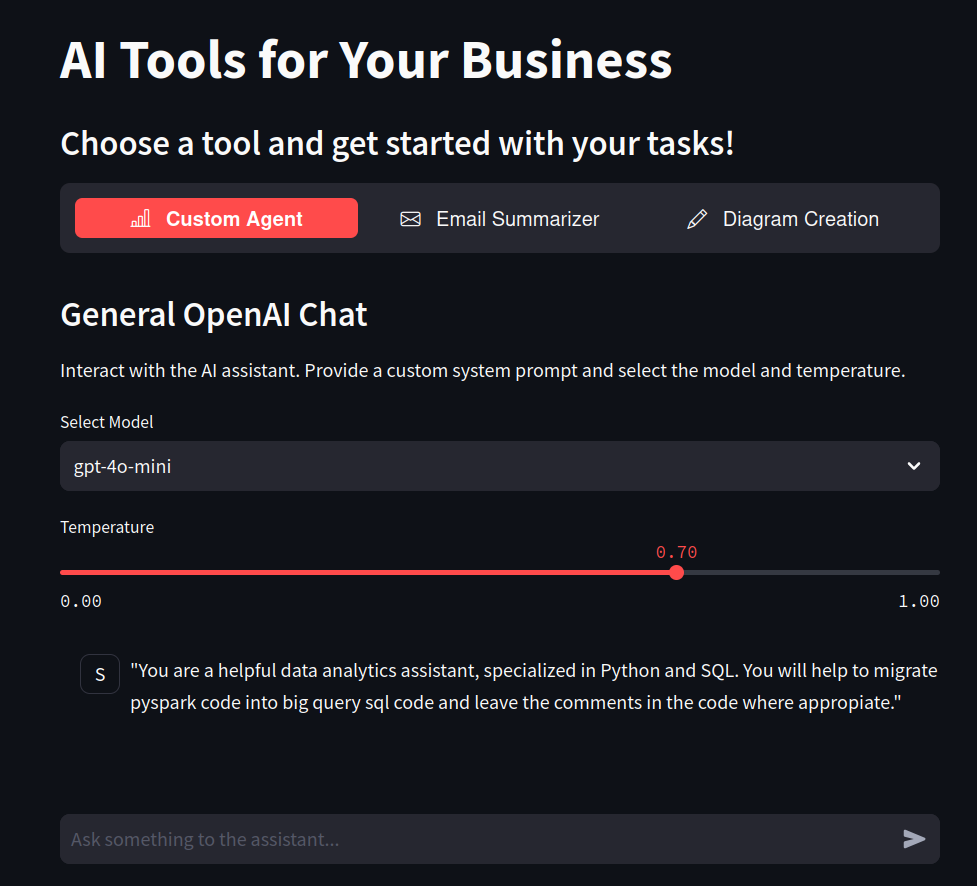

But this year I have been testing more features with the Streamlit MultiChat Summary.

Anyways, it was time to level up my Streamlit, again.

And why not doing it while making some Business value driven projects!

Looking at Streamlit Roadmap, more ideas will pop up.

Its a streamlit webapp!

- Z_ST_AIssistant_v2.py

- .env

- OpenAI_MDSummarizer_v1.py

- OpenAI_email.py

- OpenAI_Neuromkt.py

- Z_OpenAI_GeneralChatv1.py

- OpenAI_mermaid_v2c.py

- OpenAI_plotly_v1b.py

- prompts_mdsummarizer.md

- prompts_email.md

- prompts_neuromkt.md

- FAQ_MDSummarizer.md

- FAQ_Email.md

- FAQ_MermaidJS.md

- Streamlit_OpenAI.py

Trying to keep it modular

Via the agents section, I tried to bring LLM prompt + feature.

Via the FAQS, each module can have its own section attached as markdowns.

Thanks to the investigations during

Let it be leverage

For Entrepreneurs

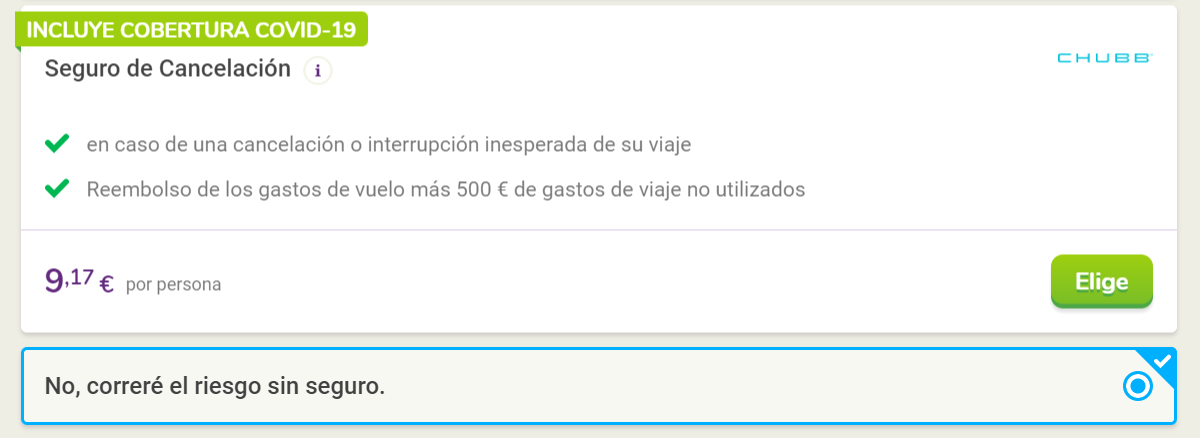

A Neuromarketing Agent

It is hard to be a solo-preneur…

unless you have some help.

Like the help of EntrepreAI

CTAs Examples that Ive found 📌

- Quieres el premium? No, correre el riesgo!

- You don’t have to miss out

- They all laugh when…

- What sounds better than privacy?

- Is your Business performing at 100%? Here is the best/easiest way to find out

- Measure, Check, Act

Many of these were just Tests during Streamlit-Multichat few months ago

Automatic Blog Creation

Using already a SSG for your websites?

If a LLM can write markdown…

why not using it to create posts assisted with AI?

Youtube Stuff

There are great videos out there.

But they are hidden between…too much fluff…

YT Summarizer and More

But the PhiData team put together an awsome idea that I decided to Fork and bundle automatically in a Container for further reach…

…a youtube AI summarization tool which uses Groq for very fast inference.

I explained the process at this blog post

And now have been testing a little bit more the GroqAPI.

Of course there are new models: LLaMa3.1’s and LLaMa 3.2’s

GroqAPI Tinkering - Available LLMs and more 📌

With this code you can see all the models available at groqAPI.

And with this sample streamlit app - you can query each available model.

I was testing how each of them can perform to sample questions.

docker pull ghcr.io/jalcocert/phidata:yt-groq #https://github.com/users/JAlcocerT/packages/container/package/phidataDeploy the PhiData Youtube Summarizer together with Streamlit MultiChat 📌

I called this my z_aigen stack in Portainer:

- The Container Image

- This is my AI-Gen docker Compose

#version: '3.8'

services:

phidata_service:

image: ghcr.io/jalcocert/phidata:yt-groq #phidata:yt_summary_groq

container_name: phidata_yt_groq

ports:

- "8502:8501"

environment:

- GROQ_API_KEY="gsk_dummy-groq-api" # your_api_key_here

command: streamlit run cookbook/llms/groq/video_summary/app.py

restart: always

networks:

- cloudflare_tunnel

streamlit_multichat:

image: ghcr.io/jalcocert/streamlit-multichat:latest

container_name: streamlit_multichat

volumes:

- ai_streamlit_multichat:/app

working_dir: /app

command: /bin/sh -c "\

mkdir -p /app/.streamlit && \

echo 'OPENAI_API_KEY = "sk-dummy-openai-key"' > /app/.streamlit/secrets.toml && \

echo 'GROQ_API_KEY = "dummy-groq-key"' >> /app/.streamlit/secrets.toml && \

echo 'ANTHROPIC_API_KEY = "sk-dummy-anthropic-key"' >> /app/.streamlit/secrets.toml && \

streamlit run Z_multichat_Auth.py

ports:

- "8501:8501"

networks:

- cloudflare_tunnel

restart: always

# - nginx_default

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434" #Ollama API

volumes:

#- ollama_data:/root/.ollama

- /home/Docker/AI/Ollama:/root/.ollama

networks:

- ollama_network

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" # 3000 is the UI port

environment:

- OLLAMA_BASE_URL=http://192.168.3.200:11434

# add-host:

# - "host.docker.internal:host-gateway"

volumes:

- /home/Docker/AI/OllamaWebUI:/app/backend/data

restart: always

networks:

- ollama_network

networks:

cloudflare_tunnel:

external: true

ollama_network:

external: false

# nginx_default:

# external: true

volumes:

ai_streamlit_multichat:But that is old school already.

I have created an improved agent that allows us not just to summarize the YT Video - but to chat with a YouTube video content.

And it can be used via the groq API too.

More about GroqAPI 📌

- https://console.groq.com/docs/examples

- https://github.com/groq/groq-api-cookbook

- https://console.groq.com/playground

It has Speech capabilities like transcriptions & Translations!

And ofc, vision, with Llama 3.2 Vision at this moment.

- Cool sample: Ask questions with Groq + Function Calling + DuckDB SQl Queries- Example

You can also check this project - yt2doc - for YT to markdown transcription

Creating Audio for YT

Imagine that you have recorded some procedure that you want to share with the rest of the world.

But you want to automate the process of explaining it.

How about using AI to generate a .mp3 that will be explaining the video for you?

And actually…that text could be also AI generated. Also the Youtube Video Description.

Initially, I was using these simple scripts.

But these are much better…

Oh, and it uses OpenAI Text2Speech

You can also have realistic AI audio (TTS) generated with 11ElevenLabs or…Local Realtime voice AI con Ichigo

Video as a Code

Is it even possible to create a full .mp4 with AI?

In the meantime, I will stay with real life with the DJI Action cam and my repo for putting the videos together

Work Companion

Slides Creation Agent

There are really cool infographics out there.

Like infograpia.com or others

But better to have a ppt than nothing at all.

Well, but this wont be just “a powerpoint”. They can be sleek. And done with code.

Presentations as a code, noways means AI driven ppt’s.

SliDev is an awsome project to generate PPT as code - leveraging VueJS.

I love the SliDev project so much, that I forked it.

- OpenAI PPT with Slidev

- SliDev PPT for Streamlit Multichat - Deployed here using GH Actions

Diagrams with AI

Because mermaidJS is so cool not to use it also with AI.

You can use those diagrams with SSG (like HUGO, Astro…) and also for Slides creation with SliDev.

Interesting MermaidJS Features:

- Make it look handDrawn

- Markdown Strings

- Support for FontAwsome Icons

flowchart TD

B["Mermaid with fa:fa-robot "]

B-->C["`The **cat**

in the hat - fa:fa-ban forbidden`"]

B-->D(fa:fa-spinner loading)

B-->E(A fa:fa-camera-retro perhaps?)Ticket Creation with AI

Email with Ai

CV with AI

It all started with CV with OpenAI

Thanks to this app I have been able to level up my Streamlit with many new features.

And there are people already making money with similar tools:

Speech Rater with AI

It all started with a friend doing public speaking courses.

Then, OpenAI made easy to do T2S and S2T (Transcription).

And this idea appeared…

Which I will cover on a future post.

And since OpenAI can transcribe (Audio to Text)…

Using whisper model with OpenAI to have Audio2Text 📌

And Text can be summarized / be asked questions…

Then just use a regular query to a LLM via: OpenAI/Groq/Anthropic/Ollama… 📌

flowchart TD

A[User opens Streamlit app] --> B[User records audio]

B --> C[Audio sent to Whisper model for transcription]

C --> D[Transcribed text]

D --> E[Text sent to OpenAI GPT LLM]

E --> F[Generated response from GPT]

F --> G[User engages in live chat to improve speech]Conclusions

Potentially, you can choose any other LLMs via API to reply to you.

Its all about getting proper API calls to each of them.

I have chosen OpenAI API for the project as the default.

But you have sample API calls to other models (via APIs) at the Streamlit Multichar project.

Making Streamlit Better

Better Docker-Compose for Streamlit Apps 📌

For passing important variables (like API keys)

- We could do it via the

./streamlit/secrets.tomlfile, with this kind of command at the docker compose - But, they can also be passed as environment variables, with this kind of docker-compose

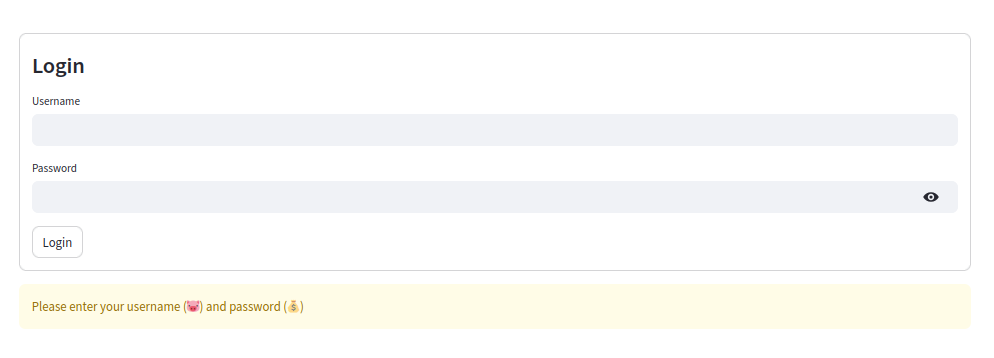

User Authentication

There will be some diagrams here, with Mermaid ofc.

Simple User/Pass Challenge for Streamlit 👇

Pros

- It just require a single additional file to bring Auth to the App

- Completely Free and no dependencies with 3rd parties Cons

- Hardcoded values (~Okeish for a PoC)

- Example in Streamlit-Multichat - A very simple one that allow certain user/passwords to access an app

Thanks to Naashonomics and the code

flowchart TD

Start([User Arrives at App 🙊]) --> LoginPrompt{User enters

username & password}

LoginPrompt -->|Input redentials| AuthCheck["Check credentials

against Auth_functions.py"]

AuthCheck -->|Valid credentials| Welcome["Display Welcome Message

and access to the app"]

AuthCheck -->|Invalid credentials| LoginPrompt

Welcome -->|Logout| LoginPromptBut to place real users fast into the features loop, we need a way to identify them.

It is interesting at least to get the interested users to provide the email in exchange for getting early access:

Anyways, if they are not interested to get access to features by giving their email, definitely they wont pay for your solution.

Email Challenge for Streamlit based on MailerLite API 👇

- Pros

Integrated with MailerLite API: With double opt-in, you will get just verified emails

No hardcoded values in the app

Cons MailerLite Free Tier finishes at 1k subs

Example Code in CV-Check - A very simple one that allow certain user/passwords to access an app

flowchart TD

Start([User Arrives at App 🙊]) --> DevModeCheck{Check DEV_MODE}

DevModeCheck -->|DEV_MODE=True| MainOptions["App Features Access 😊 "]

DevModeCheck -->|DEV_MODE=False| EmailEntry["Prompt user for email"]

EmailEntry -->|Email Entered| SubscriptionCheck["Check email subscription with MailerLite API"]

EmailEntry -->|No Email Entered| EndInfo["Show 'Please enter email' message"]

SubscriptionCheck -->|Subscribed| SuccessWelcome["Welcome Message and Log Success"]

SubscriptionCheck -->|Not Subscribed| Warning["Show warning: Email not subscribed and Log Failure"]

SuccessWelcome --> MainOptions

MainOptions --> OptionSelected{User selects an option}

OptionSelected -->|Analyze CV Template| AnalyzeCV["Run Analyze CV function"]

OptionSelected -->|CV To Offer| AdaptCV["Run Adapt CV to Offer function"]

OptionSelected -->|Offer Overview| OfferOverview["Run Offer Overview function"]

Warning --> StartFormBricks is an open source Survey Platform….

Email Challenge for ST based on MailerLite+FormBricks 👇

You can make very interesting polls and embedd them or reference them with a link into your Apps. The Survey Type Link Survey was very helpful for this case.

It has very cool integrations, like with GSheets, which you can use:

- Pros

- Integrated with MailerLite API

- With MailerLite double opt-in, you will get just verified emails

- You can use Formbricks as another EmailWall with the GSheets integration

- No hardcoded values in the app

- Cons MailerLite Free Tier finishes at 1k subs

Example Code here. This module for MailerLite and this one for FormBricks via GSheets

flowchart TD

Start([User Arrives at App 🙊]) --> DevModeCheck{Back-End Checks DEV_MODE}

DevModeCheck -->|DEV_MODE=True| MainOptions["App Features Access 😍"]

DevModeCheck -->|DEV_MODE=False| EmailEntry["Prompt user for email"]

EmailEntry -->|Email Entered| SubscriptionCheck

EmailEntry -->|No Email Entered| EndInfo["Show 'Please enter email' message"]

%% Subscription checks surrounded in a box

subgraph Back-endElements [BE Subscription Logic]

SubscriptionCheck["Check email subscription with MailerLite API"]

SubscriptionCheck -->|Subscribed via MailerLite| SuccessWelcome["Welcome Message and Log Success"]

SubscriptionCheck -->|Not in MailerLite| FormBricksCheck["Check email in FormBricks Google Sheet"]

FormBricksCheck -->|Subscribed in FormBricks| SuccessWelcome

FormBricksCheck -->|Not Subscribed in Either| Warning["Show warning: Email not subscribed and Log Failure"]

end

SuccessWelcome --> MainOptions

MainOptions --> OptionSelected{User selects an option}

OptionSelected -->|Analyze CV Template| AnalyzeCV["Run Analyze CV function"]

OptionSelected -->|CV To Offer| AdaptCV["Run Adapt CV to Offer function"]

OptionSelected -->|Offer Overview| OfferOverview["Run Offer Overview function"]

Warning --> StartBut…how about paywalls?

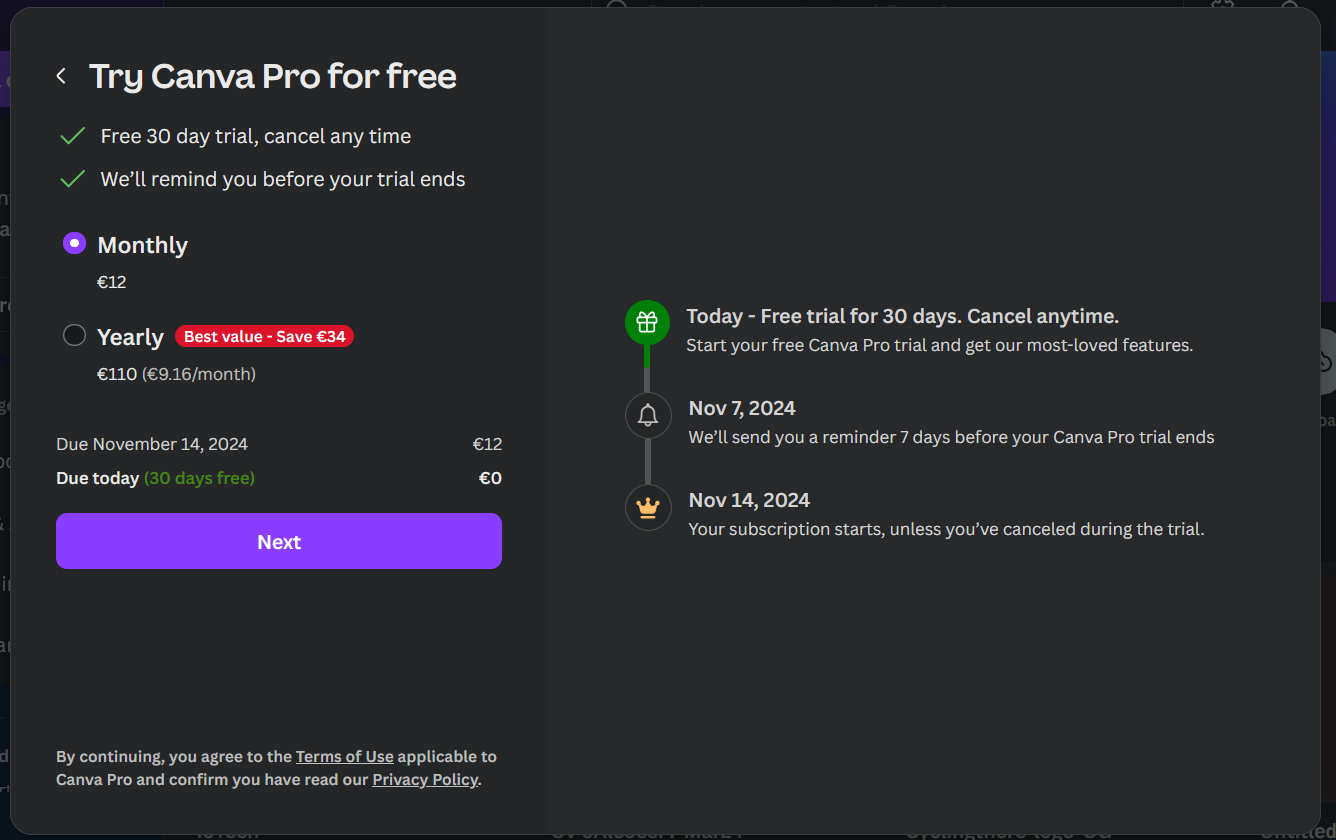

Some time ago I tried this streamlit paywall simple example

but it didnt quite work for me.

It was the time to check Stripe API together with Streamlit

Stripe Emails with Active Subscription Paywall for Streamlit 📌

- Pros

- Integrated with Stripe API

- No hardcoded values in the app, get all the clients

- You can check if a certain mail has any active/trialing subscription

- Or if it has a specific subscription

- Cons

- 3rd parties dependencies

- No email verification - but card verification

- No password verification for a given email

This module checks if a given email has ever been a customer / have active subscription / have particular products_id subs

In the end, I made a EmailWall Module that combines the 3 checks, according to what the user wants to check agains in the .env variable

graph TD

A[is_email_subscribed]

A -->|Check MailerLite| ML[check_mailerlite_subscription]

ML -->|Subscribed| ML_Yes["MailerLite: Subscribed"]

ML -->|Not Subscribed| ML_No["MailerLite: Not Subscribed"]

A -->|Check FormBricks| FB[check_formbricks_subscription]

FB -->|Subscribed| FB_Yes["FormBricks: Subscribed"]

FB -->|Not Subscribed| FB_No["FormBricks: Not Subscribed"]

A -->|Check Stripe| Stripe

subgraph Orchestrator Stripe Check

Stripe[orchestrator_stripe]

Stripe -->|Check if customer email exists| SE[check_stripe_email]

SE -- Yes --> SS[has_specific_active_or_trialing_subscription]

SE -- No --> SNE["Stripe: Customer does not exist"]

SS -->|Active/Trialing subscription found| SAT["Stripe: Subscribed"]

SS -->|No active or trialing subscription found| SNA["Stripe: Not Subscribed"]

endSupabase as Streamlit Auth 👇

I read that it is a little bit tricky to implement.

Maybe they are not there yet?

Emails for Registered Users - AWS SES and more 👇

If you are on a PoC stage, having a very simple and realiable way to bypass from any auth an email, is really helpful.

This is why I added such option with the module described below.

Email bypass for the EmailWall - PoC Simple Auth Setup 📌

Streamlit New Features

Big thanks to Fanilo Andrianasolo for the great Streamlit Videos

- Keep up to date with the latest streamlit utilities

- And streamlit components

Upcoming & Cool Streamlit Features 📌

- https://github.com/kajarenc/stauthlib

- Google Auth done easy?

- Folium Maps Selections with pydeck_chart and apparently working with streamlit-folium too

- https://github.com/streamlit/streamlit/pull/9377

- Sample in the docs, which i tried and it works with pydeck

- Pure html to streamlit app - Ready from v1.40.0

- st.html content is not iframed. Executing JavaScript is not supported at this time.

- Charts Selections!!

- There are now widgets for both, audio_input and camera_input

- It resonates a lot with Speech-Rater and with Computer Vision

https://github.com/streamlit/cookbook, I tried many of these during the testing phase for AIssistant

There is also this video explaining whats going to be released…now.

What Ive learnt with this one

#streamlit run st_scrapnsummarize.py --server.address 100.104.tail.scale

#streamlit run st_scrapnsummarize.py --server.address 100.104.tail.scale --server.port 8533Better Python PKG management with requirements.txt… 📌

A Trick for seeing which packages have actually been installed from the ones at requirements.txt

#pip install -r requirements.txt

#pip freeze | grep -E '^(anthropic|streamlit)=='

#pip freeze | grep -Ff requirements.txt

pip freeze | grep -E "^($(paste -sd '|' requirements.txt))=="How to load a .env in memory with CLI 📌

source .env

#export GROQ_API_KEY="your-api-key-here"

#set GROQ_API_KEY=your-api-key-here

#$env:GROQ_API_KEY="your-api-key-here"

echo $GROQ_API_KEY $OPENAI_API_KEY $ANTHROPIC_API_KEYInteresting Projects - More Diagrams, LocalStack, Code2Prompt 📌

Ive summarized some interesting articles. Using this script (FireCrawl+OpenAI)

More Diagrams: GraphViz

If MermaidJs, and Python Diagrams was not enough.

Well, and DrawIO…

Then we have GraphViz.

Overview: Graphviz is a powerful open-source graph visualization software that uses the DOT language to define graphs. It is widely used for generating complex network, flow, and structural diagrams.

Primary Focus: Graphviz is focused on graph-based representations, such as flowcharts, state diagrams, network graphs, organizational charts, and dependency graphs.

Syntax: Graphviz uses the DOT language, which is a declarative, text-based format. It describes the nodes and edges in a graph with rich options for styling.

There is a live editor for GraphViz: http://www.webgraphviz.com/

Features:

- Complex Layouts: Graphviz supports automatic layout algorithms to position nodes, such as hierarchical, radial, and circular layouts.

- Advanced Styling: It offers a variety of options for styling nodes, edges, and labels, including colors, shapes, and gradients.

- Performance: Graphviz is optimized for rendering large graphs, making it suitable for generating complex visualizations.

- Rendering: It can output in various formats such as PNG, SVG, PDF, and more.

LocalStack - A Replacement for AWS 😲

LocalStack is a tool designed for developers who want to test AWS functionalities locally.

LocalStack is crucial for developers needing a local AWS-like environment without the complexities of AWS billing.

Conclusion: LocalStack is essential for developers needing local AWS API testing without unpredictable costs. It simplifies local development workflows.

Code2prompt 😲

code2prompt is a command-line tool designed to transform codebases into structured LLM prompts.

This project addresses the cumbersome task of generating prompts for LLMs from code.

It automates the collection and formatting of code, making it easier to interact with models like GPT and Claude.

In conclusion, code2prompt simplifies the process of creating prompts from codebases, making LLM interactions efficient.

Similar projects include CodeGPT and Code2Flow.

And on the way Ive improved the Article/GH Summarizer with v3b

FAQ

How to Query Different APIs

You can also use OpenRouter API to query many different model providers…

LLMs - OpenAI,Anthropic, Groq…

- https://console.anthropic.com/workbench/

- https://console.groq.com/keys

- https://platform.openai.com/api-keys

Query OpenAI LLM Models >1 📌

A sample API call to OpenAI>1, for example to create tickets

But, the user/system prompts can be better modularized if we go with this approach of the markdown summarizer

Query Claude LLMs via Anthropic API 📌

Sample Anthropic Claude Models API call

See their docs for more examples

Anthropic API calls first tested during StreamlitMultichat

- Opus models are the most powerful, but Sonnet LLMs are a balance between speed/cost/quality of the reply

- There is alrady Vision and Computer Use capabilities in beta…

Normally the latest Anthropic (Claude Models) are accesible very soon on AWS Bedrock API - As they invested a lot in the company

Query LLMs via Groq API - Crazy Inference 📌

Query LLMs via Ollama API - Open LLMs 📌

- Setup Ollama

- Download a LLM via Ollama

- Use this sample script to query the LLM via Ollama Python API

Ollama API calls first tested during StreamlitMultichat

Streamlit CI/CD

- With Github - using

dockerxto get a multiarch (x86 & ARM64) Container Image

To use Github Actions to create automatic container images for your streamlit projects, you will need such configuration file.

- You can also build the container image manually

- Following this sample steps

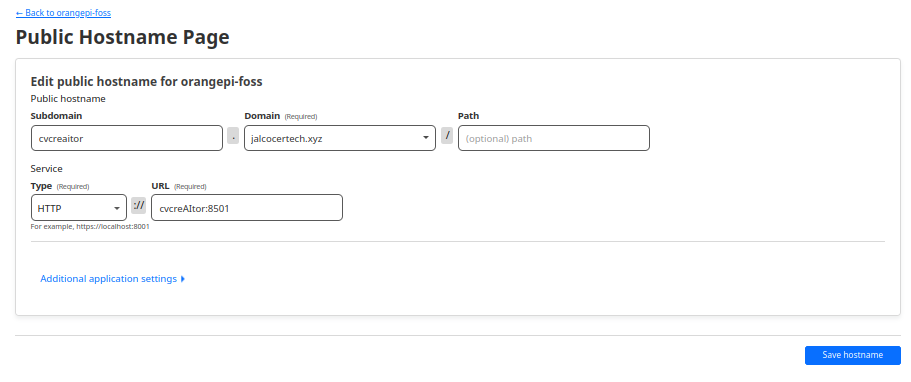

Deploying Streamlit

You can use Streamlit Cloud Services.

But you can also host Streamlit with your own Domain.

And without paying extra.

Remember to use: container_name:container_port, not the port on the host.