AI with Vision - Image2Text

TL;DR

Using AI with vision is a thing now.

Vision with AI

OpenAI

You can use the OpenAI API to feed one image and ask questions about it.

This is Image to Text!

This functionality is primarily offered through the GPT-4 Turbo with Vision model (also referred to as gpt-4-vision-preview or gpt-4o).

Here’s a breakdown of how you can do this and important considerations:

How it Works:

- Multimodal Input: The GPT-4 Turbo with Vision model is a large multimodal model (LMM) that can process both text and image inputs. This allows you to include an image directly in your API request alongside your question.

- Image Formats and Size: The API supports various image formats such as PNG, JPEG (.jpeg and .jpg), and WEBP. Non-animated GIFs are also supported. There’s a size limit of up to 20MB per image.

- Input Methods: You can provide the image to the API in two ways:

- URL: You can provide a publicly accessible URL of the image. The OpenAI servers will then fetch the image from that URL.

- Base64 Encoding: You can encode the image data as a Base64 string and include it directly in the API request. This is useful for local images that are not hosted online.

- Detail Level: When using the API, you can specify the level of detail the model should use when processing the image. The

detailparameter can be set tolow,high, orauto(letting the model decide). Lower detail is faster and cheaper for tasks that don’t require fine-grained understanding, while high detail is necessary for analyzing intricate details. - Cost: The cost of analyzing an image depends on the input image size and the level of detail you specify. Images are processed in 512x512 pixel tiles, and the token cost is calculated based on the number of tiles and the detail level.

Example API Request Structure (Conceptual):

While the exact code will depend on the programming language and OpenAI library you are using, the basic structure of the message content in your API request will look something like this:

{

"model": "gpt-4-vision-preview",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What objects are present in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "https://example.com/your_image.jpg"

}

}

]

}

],

"max_tokens": 300

}Or, if you’re using Base64 encoding:

{

"model": "gpt-4-vision-preview",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Describe the main elements in this picture."

},

{

"type": "image_url",

"image_url": {

"url": "data:image/jpeg;base64,/9j/4AAQSkZJRgABA..."

}

}

]

}

],

"max_tokens": 300

}Key Considerations:

- Model Selection: Ensure you are using a model with vision capabilities, such as

gpt-4-vision-previeworgpt-4o. Standard language models likegpt-3.5-turbocannot process images directly. - API Key: You will need an active OpenAI API key to make requests.

- Libraries and SDKs: OpenAI provides official and community-maintained client libraries in various programming languages (like Python) that simplify the process of making API calls.

- Error Handling: Implement proper error handling in your code to manage potential issues like invalid image URLs, incorrect image formats, or API errors.

- Rate Limits: Be aware of the API rate limits to avoid being temporarily blocked.

- Content Moderation: OpenAI’s content policy applies to both text prompts and images. Inappropriate content may be flagged and result in errors.

- Assistant API: While you can’t directly upload images to the Assistants API in the same way as the Chat Completions API, a workaround involves uploading the image to the API file storage with the purpose “vision” and then including the file ID in a user message within a thread. The Assistant can then access and process the image.

import base64

import requests

import os

def encode_image_to_base64(image_path):

with open(image_path, "rb") as image_file:

encoded_string = base64.b64encode(image_file.read()).decode('utf-8')

return encoded_string

def query_openai_with_local_image(image_path, question):

base64_image = encode_image_to_base64(image_path)

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer YOUR_OPENAI_API_KEY"

}

payload = {

"model": "gpt-4-vision-preview",

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": question},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

# Adjust MIME type if necessary

}

}

]

}

],

"max_tokens": 300

}

response = requests.post("https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

return response.json()

if __name__ == "__main__":

local_image_path = "path/to/your/local/image.jpg" # Replace with the actual path

user_question = "What is the main subject of this image?"

if os.path.exists(local_image_path):

openai_response = query_openai_with_local_image(local_image_path, user_question)

print(openai_response)

else:

print(f"Error: Image not found at {local_image_path}")Understanding OpenAI Vision

OpenAI Vision API Crash Course - Chat with Images (Node)

LLaVa on a Pi:

LLaVa

If you are familiar with local LLMs powered by Ollama…

ollama run llava #https://ollama.com/library/llavaImage Recognition with LLaVa in Python

Conclusion

Now we have 2 ways (local and API based) to get to know context about images!

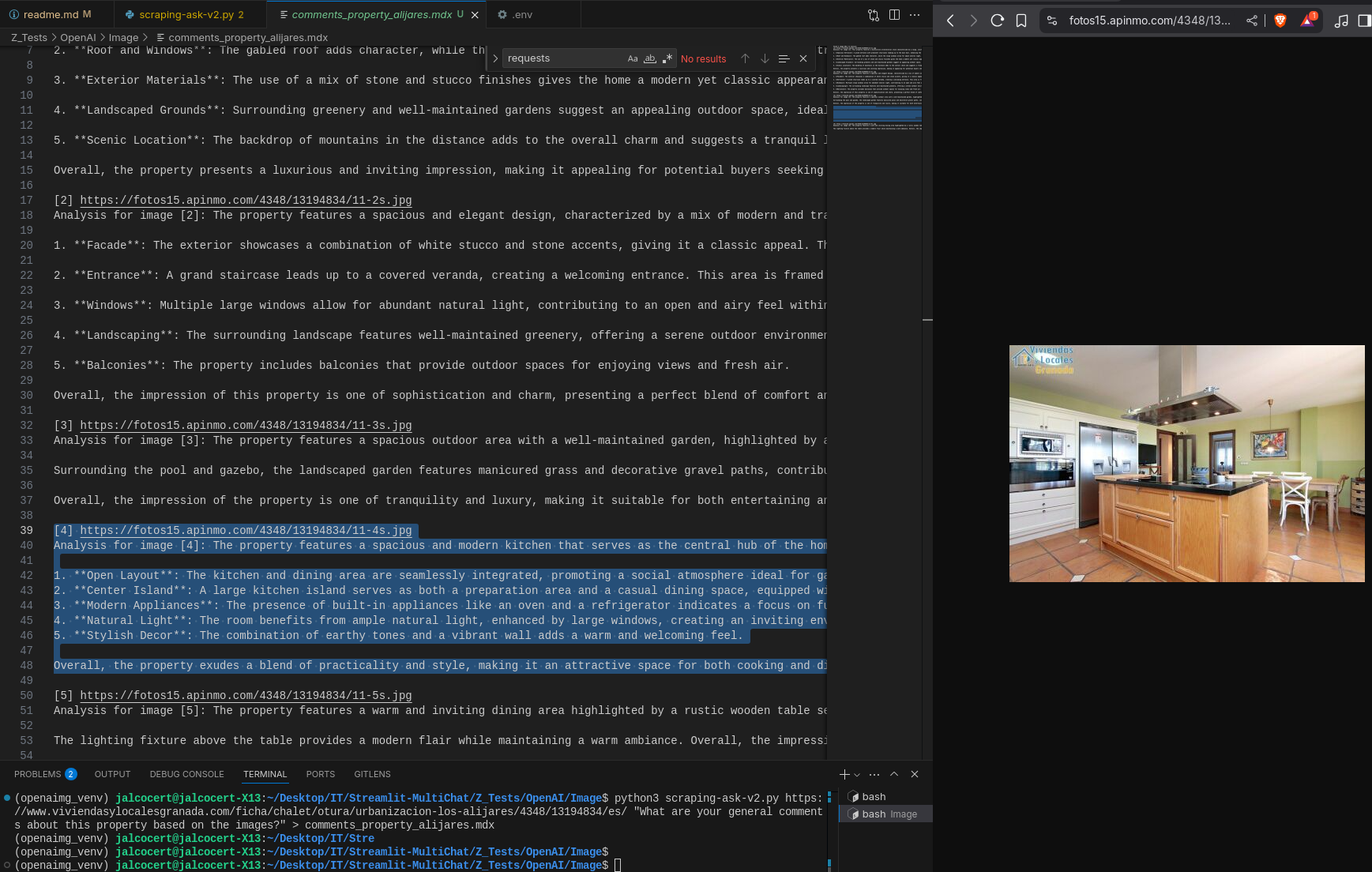

This can also be helpful for the real estate project, where you can ask context about particular property scrapped images:

python3 scraping-ask-v2.py > comments_property_alijares.mdx

RoomGPT for Real Estate

Whats great?

Given a photo of a room and a prompt…you can re-imagine how everything could potentially look like:

MIT | Upload a photo of your room to generate your dream room with AI.

Have a look to such projects if you like home styling!

Text to Image

If what you want is the opposite…

Given a text description of an image, generate the image itself:

See also: https://promptfolder.com/midjourney-prompt-helper/

FAQ

How to apply CV (computer vision) with Python

Computer Vision with PyTorch ⏬

Computer Vision with Yolov8 Models ⏬

Outro

Pixtral (Open source vision model)

ollama run llava #https://ollama.com/library/llavaImage Recognition with LLaVa in Python