BiP with APIFY - Sell shovels

Tl;DR

This is just amazing: https://apify.com/store/categories/lead-generation

Intro

This year, I covered PostIZ for social media automation here and also:

And as it is 2025, postiz support MCP.

A Sales Pipeline

If you read the social media automation post with n8n, you know that there are social media scrapping tools.

APIFY is one of those.

And actually, APIFY can do that and more than you imagine.

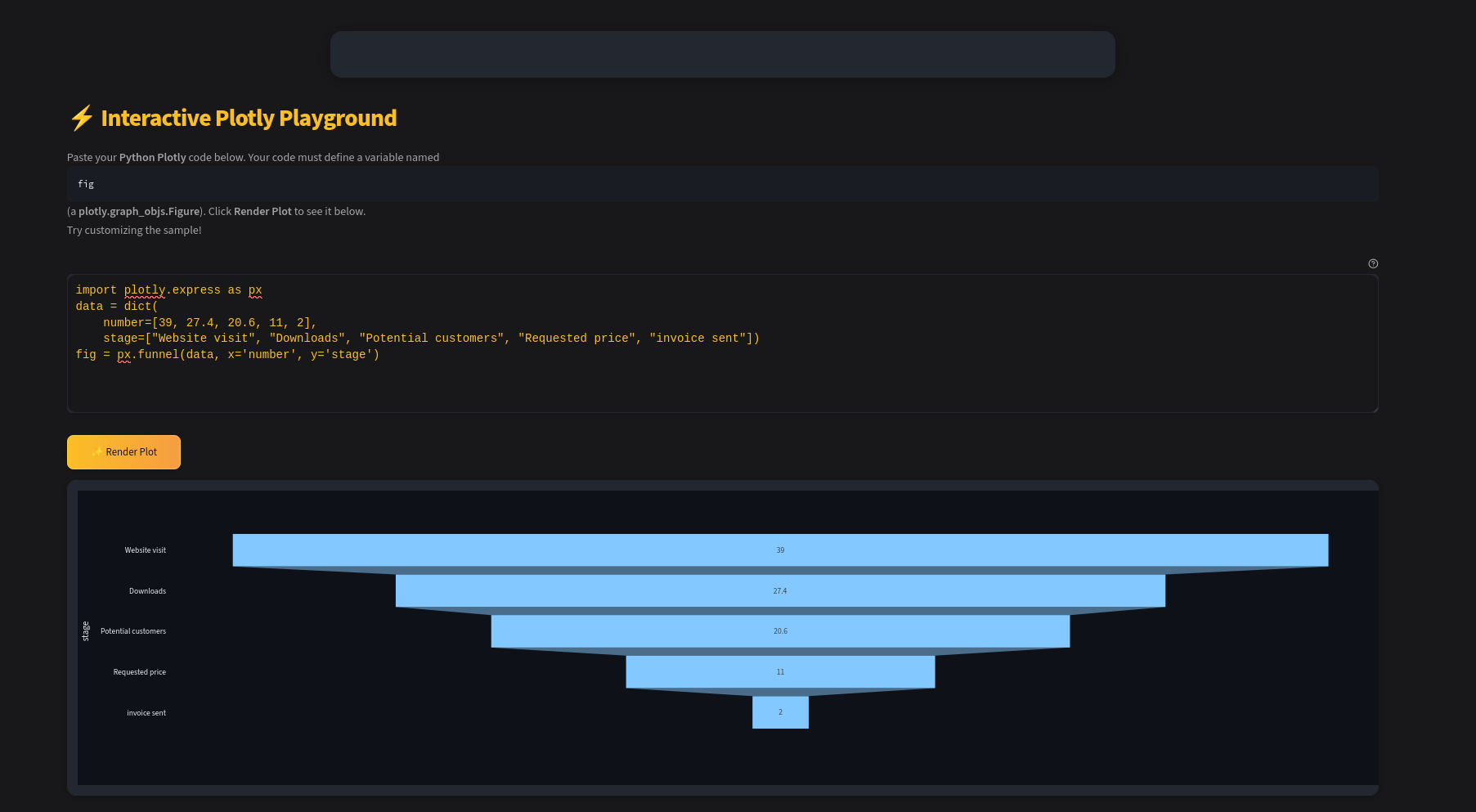

https://apexcharts.com/javascript-chart-demos/funnel-charts/funnel/

https://www.chartjs.org/docs/latest/samples/information.html

https://jalcocert.github.io/JAlcocerT/ai-bi-tools/#pygwalker

https://jalcocert.github.io/JAlcocerT/tinkering-with-reflex/#reflex-sample-apps

https://reflex.dev/docs/library/graphing/charts/funnelchart/

Conclusions

Content for Forums

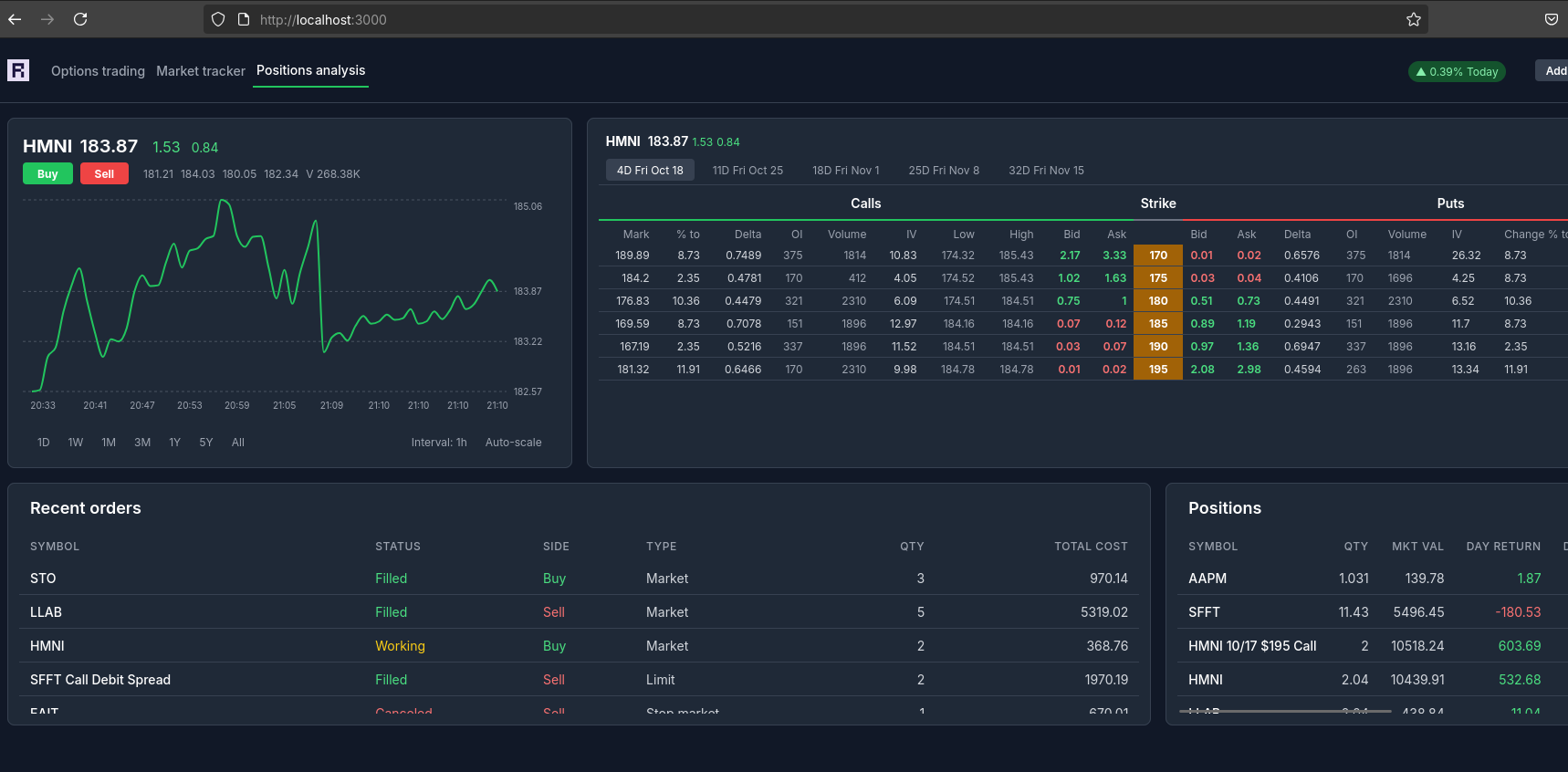

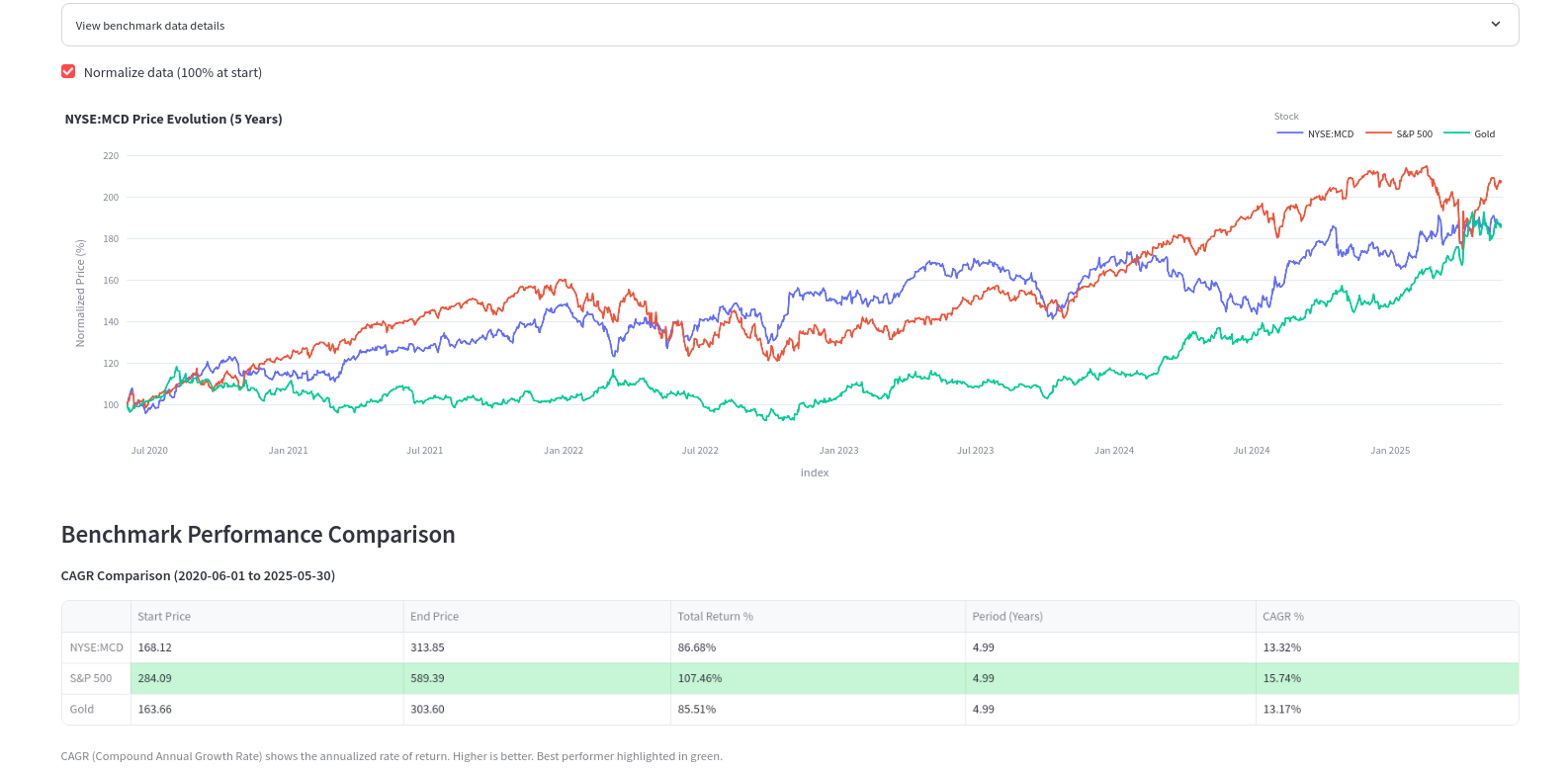

https://jalcocert.github.io/JAlcocerT/python-stocks-webapp/#the-charts

Like…how would you reply to such post?

git clone https://github.com/JAlcocerT/DataInMotion

#git branch -a

git fetch --all --prune && (git switch libreportfolio || git switch --track origin/libreportfolio) && git pull

#WK42Y25

uv run animate_sequential_compare_price_evolution_flex_custom.py SPY O 2020-01-01 10 short

uv run plot_price_and_cumulative_dividends_with_start.py O 2010-01-01

uv run ./tests/flexible_stock_timeseries.py O 2010-01-01 1111 youtube plot

#uv run ./tests/flexible_stock_timeseries.py SPY O 2010-01-01 1111 short animate

uv run tests/flexible_stock_timeseries.py GLD BTC-USD 2024-01-01 1111

uv run animate_sequential_compare_price_evolution_flex_custom.py GLD BTC-USD 2025-01-01 10 short

#uv run streamlit run streamlit_btc_poly.pyVery simple, with such scripts

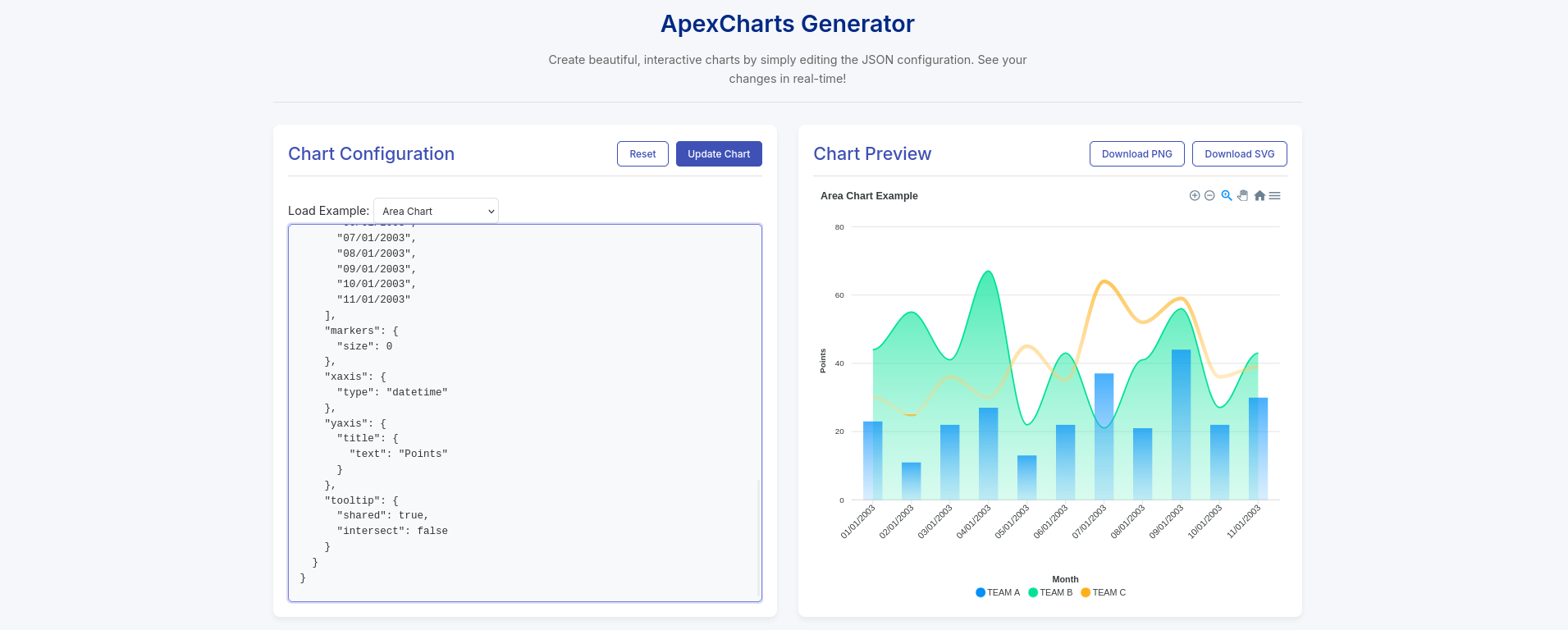

With sth like this ApexChart

Social Media Automation for Forums

Some people seem to be everywhere.

Actually, their agents are.

That’s why you can see them on reddit, twitter/x or other forums…at once.

is it possible to get somehow whats trending on Reddit sub-forums?

Forocoches

Imagine saving interesting posts and commenting automatically:

Cool infographics inspirations:

- https://forocoches.com/foro/showthread.php?t=10455900&page=45

- https://forocoches.com/foro/showthread.php?t=10455900&page=37

If you know whats a hot topic, why not posting automatically about it on few social media platforms?

Turning numbers into motivation!

— Libre Portfolio (@LibrePortfolio) July 3, 2025

See how every payment chips away at your debt—and how much goes to interest.

Stay consistent, and the finish line gets closer every month!#DebtPayoff #Amortization pic.twitter.com/RoiYgqAdBM

FAQ

The video “How to Use AI to Find a $1M Idea [Reddit, Claude]” features Steph France presenting the “Gold Mining Framework,” a step-by-step method to discover a million-dollar business idea and create a high-converting landing page within 45 minutes using AI tools, no coding or copywriting skills required.

Key Highlights

Gold Mining Framework Overview: This framework uses six AI tools and five prompts to mine data from online conversations and validate business ideas based on real customer pain points expressed on platforms like Reddit.

Step 1 - Find a Market: Start within the three core markets—health, wealth, and relationships—where people are willing to spend money. Use AI prompts to expand into deep sub-niches, focusing on areas of personal interest or expertise.

Step 2 - Validate Demand: Use tools like Google Search, keyword analytics, and Google Trends to assess search volumes and trends for chosen sub-niches, validating stable or growing market interest.

Step 3 - Gather Data: Search Reddit using advanced queries to locate active discussions and authentic customer pain points relevant to the niche.

Step 4 - Process Data: Feed conversations to AI models (like Claude) to extract and categorize pain points with actual quotes from users, helping identify real problems faced by the market.

Step 5 - Generate Business Ideas: AI applies frameworks, including market gap and product differentiation, to produce focused business ideas tailored to identified pain points and trends.

Step 6 - Create Landing Page: Using the distilled data and chosen business idea, generate a compelling landing page copy with AI tools like Loable that speaks directly to customers’ pain points with emotionally resonant language.

Example Use Case: They demonstrated this process using the co-parenting niche, uncovering multiple pain points and crafting business ideas such as conflict-focused platforms and child-centric transition tools, complete with landing page features, FAQs, and calls-to-action.

Additional Insights

- The process emphasizes using markdown formatting in prompts for optimal AI understanding.

- Tips include building email waitlists using quizzes for pre-launch validation of interest.

- Steph advocates for iterative testing, user validation, and community engagement to refine ideas.

- The entire workflow showcases how AI can remove common mental blocks and biases in idea generation by automating research and validation.

This method leverages AI’s ability to absorb vast amounts of qualitative data, identify real customer needs, and create tailored marketing content quickly, dramatically accelerating the early stages of startup ideation and go-to-market preparation.[1]

The video “Python Tutorial: Build an AI-assisted Reddit Scraping Pipeline” is a comprehensive course that teaches how to create an automated, resilient system in Python for extracting and analyzing Reddit posts around specific topics using AI and modern tools.

Key Highlights:

Purpose: Build a data extraction and tracking platform to gather Reddit conversations on topics of interest (e.g., camping, AI news) for deeper understanding of real-world user discussions.

Technical Stack: Python, Jupyter notebooks for prototyping, Django for web app and automation coordination, PostgreSQL for database, Redis for caching/queues, Celery and Django QStash for background task/workflow management, Bright Data APIs for search and crawling, LangChain and LangGraph for AI and tool integration, and Cloudflare tunnels for hosting.

Core Capabilities:

- AI-powered Google SERP searches to find relevant Reddit communities.

- Automated scraping of Reddit posts and comments via Bright Data crawl API.

- Resilient background task scheduling and execution with Celery and QStash.

- Structured storing of scraped Reddit data within Django models.

- Use of LLMs (Google Gemini, LangChain) to extract topics, analyze, and make scraping decisions.

- Real-time webhook handlers to process incoming scrape data.

- Tools for fuzzy query matching and automated scraping on content changes.

Demonstration: Shows how to query topics (e.g., camping, VanLife), find and save Reddit communities, and scrape posts and discussions in an automated workflow.

Programming Approach: Starts with Python and Jupyter for experimenting with scraping and AI integration, then transitions into a Django-based project for database-backed, scalable production use with background workers and webhooks.

Security & Best Practices: Demonstrates how to securely manage API keys with environment variables and Git ignore rules.

This tutorial is aimed at developers looking to build advanced, AI-enhanced web scraping and social listening tools, particularly focused on Reddit, but concepts are expandable.

It combines practical Python skills with modern AI libraries and cloud scraping services to automate data extraction for research and insights.