An overview of F/OSS Audio to Text Tools. Speech rAIter

Tl;DR

Creating a streamlit app that rates your speech based on OpenAI TTS/S2T capabilities.

Intro

An overview to the existing open source alternatives for audio to text conversion (also called Speech to Text).

But first: how to create a PoC to help people get better at public speaking.

The Speech Rater

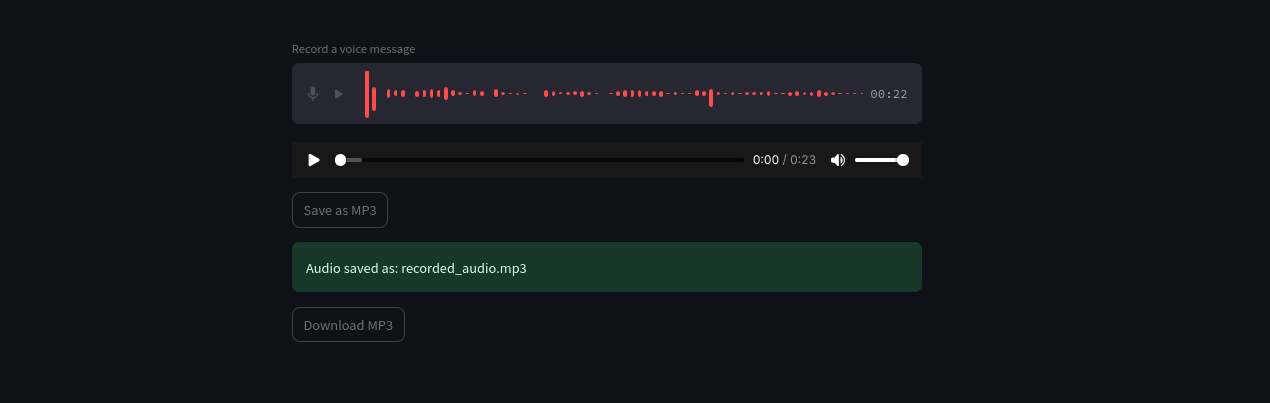

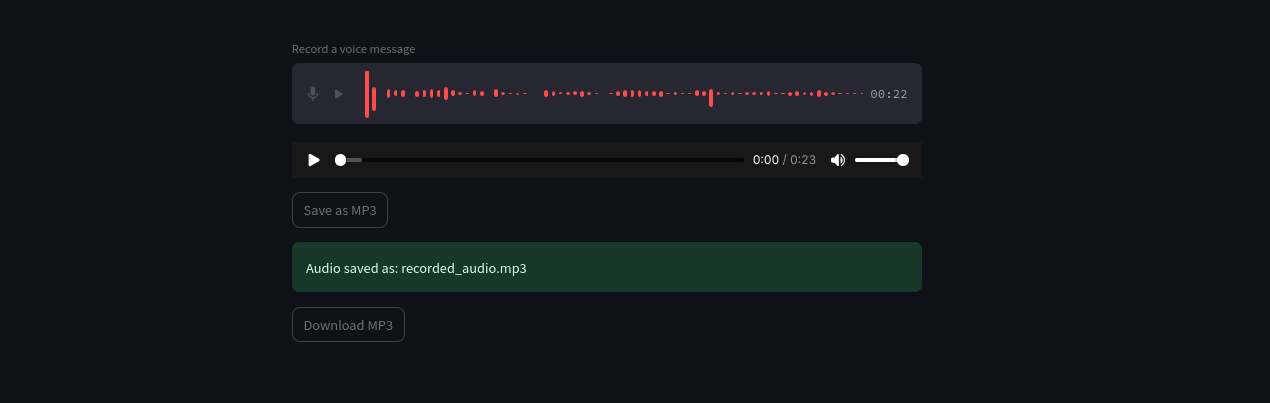

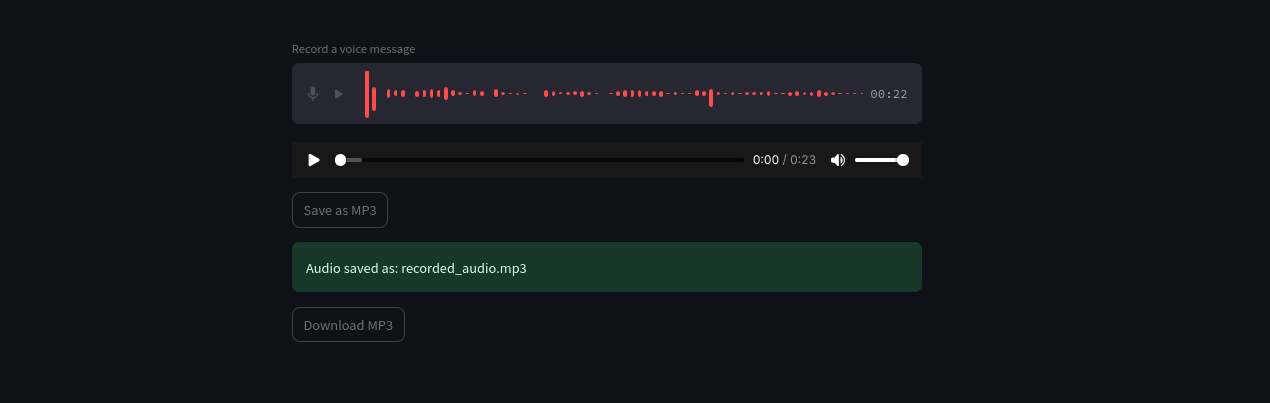

How about using streamlit to input and output audio?

Well, plugging LLMs to that is kind of easy:

OpenAI TTS and Transcription Project

OpenAI TTS and Transcription Project- speechraiter.py

- requirements.txt

- Dockerfile

- README.md

- Auth_functions.py

- Streamlit_OpenAI.py

- readme.md

- openai_t2t.py

- openai-tts.py

- audio-input.py

- audio-input-save.py

It was all about getting the streamlit audio part right.

graph TD

A[User records Audio] --> B(Streamlit receives Audio);

B --> C{OpenAI Transcription};

C --> D[Transcription Inputs to LLM];

D --> E[Text-to-Speech == TTS];S2T

The process of converting spoken words into written text is called transcription.

The output of this process is also often referred to as a transcript.

OpenAI Whisper

This one requires OpenAI API Key.

But its worth to give it a try.

MIT | Robust Speech Recognition via Large-Scale Weak Supervision

Ecoute

- Project Source Code: https://github.com/SevaSk/ecoute

- License: MIT

Ecoute is a live transcription tool that provides real-time transcripts for both the user’s microphone input (You) and the user’s speakers output (Speaker) in a textbox.

git clone https://github.com/SevaSk/ecoute

cd ecoute#python -m venv solvingerror_venv #create the venv

python3 -m venv ecoute_venv #create the venv

#solvingerror_venv\Scripts\activate #activate venv (windows)

source ecoute_venv/bin/activate #(linux)

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpupip install -r requirements.txt

pip install whisper==1.10.0#OPENAI_API_KEY="sk-somekey" #linux

#$Env:OPENAI_API_KEY = "sk-somekey" #PS

#cmdecoute requirements

- Record Audio from speakers: https://github.com/s0d3s/PyAudioWPatch

- OpenAI Whisper: https://pypi.org/project/openai-whisper/#history

Trying to bundle Ecoute 📌

python main.py

# python main.py --api# Use the specified Python base image

FROM python:3.10-slim

# Set the working directory in the container

WORKDIR /app

# Install necessary packages

RUN apt-get update && apt-get install -y \

git \

build-essential

#choco install ffmpeg

# Clone the private repository

RUN git clone https://github.com/SevaSk/ecoute

WORKDIR /app/ecoute

# Copy the project files into the container

COPY . /app

RUN pip install -r requirements.txt

# Keep the container running

#CMD ["tail", "-f", "/dev/null"]Ecoute is a live transcription tool that provides real-time transcripts for both the user’s microphone input (You) and the user’s speakers output (Speaker) in a textbox.

It also generates a suggested response using OpenAI’s GPT-3.5 for the user to say based on the live transcription of the conversation.

docker build -t ecoute .

#docker tag ecoute docker.io/fossengineer/ecoute:latest

#docker push docker.io/fossengineer/ecoute:latest#version: '3'

services:

ai-ecoute:

image: fossengineer/ecoute # Replace with your image name and tag

container_name: ecoute

ports:

- "8001:8001"

volumes:

- ai-privategpt:/app

command: /bin/bash -c "main.py && tail -f /dev/null" #make run

volumes:

ai-ecoute:oTranscribe

MIT | A free & open tool for transcribing audio interviews

WriteOutAI

MIT | Transcribe and translate your audio files - for free

WHISHPER

aGPL | Transcribe any audio to text, translate and edit subtitles 100% locally with a web UI. Powered by whisper models!

Flask Intro

Flask IntroConclusions

Now we have seen the differences between TTS and S2T (Transcription) frameworks out there!

Time to do cool things with them.

Like…putting together a voice assistant with Streamlit:

For TTS, lately OpenAI have made interesting upgrades, with 4o-mini.

- Voice Synthesis: TTS systems use various techniques to create synthetic voices. E

- arly systems used concatenative synthesis (piecing together recorded human speech), while modern systems often use more advanced techniques like statistical parametric synthesis and neural network-based synthesis, which can produce more natural-sounding speech.

Streamlit Audio

With the st.audio_input component, a lot of cool stuff can be done: https://docs.streamlit.io/develop/api-reference/widgets/st.audio_input

See st.audio_input - https://docs.streamlit.io/develop/api-reference/widgets/st.audio_input

Thanks to Benji youtube video: https://www.youtube.com/watch?v=UnjaSkrfWOs

I have added a sample working script at the MultiChat project, here: https://github.com/JAlcocerT/Streamlit-MultiChat/blob/main/Z_Tests/OpenAI/Audio/audio-input.py

See also another way to do T2S with openAI: https://github.com/JAlcocerT/Streamlit-MultiChat/blob/main/Z_Tests/OpenAI/Audio/openai-tts.py

More Audio Generation

TRY Ecoute IN WINDOWS

python3 -m venv ecoutevenv

source ecoutevenv/bin/activate

apt install ffmpeg

git clone https://github.com/SevaSk/ecoute ./ecoute_repo

cd ecoute_repo

python -m pip install -r requirements.txt

chmod +x cygwin_cibuildwheel_build.sh

./cygwin_cibuildwheel_build.sh

#deactivate- LocalAI - With voice cloning! The reason why I dont like to put my voice over the internet :)

Runs gguf, transformers, diffusers and many more models architectures. Allows to generate Text, Audio, Video, Images. Also with voice cloning capabilities.

Apache v2 | Open source, local, and self-hosted Amazon Echo/Google Home competitive Voice Assistant alternative

- Zonos: eleven labs alternative

Zonos-v0.1 is a leading open-weight text-to-speech model trained on more than 200k hours of varied multilingual speech, delivering expressiveness and quality on par with—or even surpassing—top TTS providers.

Found out about it at https://noted.lol/zonos/