How to create a ChatGPT Clone with Streamlit

For the ones who want to use the latest OpenAI Models, but dont want to commit to a monthly subscription, there is an alternative.

To use an amazing and F/OSS Python Streamlit App that communicates with OpenAI API.

You will need the API Keys to OpenAI GPT’s ‘black box’

The Openai-Chatbot Project

The project is available on GitHub ✅

You will need an API key from OpenAI to use the project

- OpenAI API Keys

- Remember that you will be using Closed Sourced Models ❎

Documentation for the Open AI models used can be found here 👇

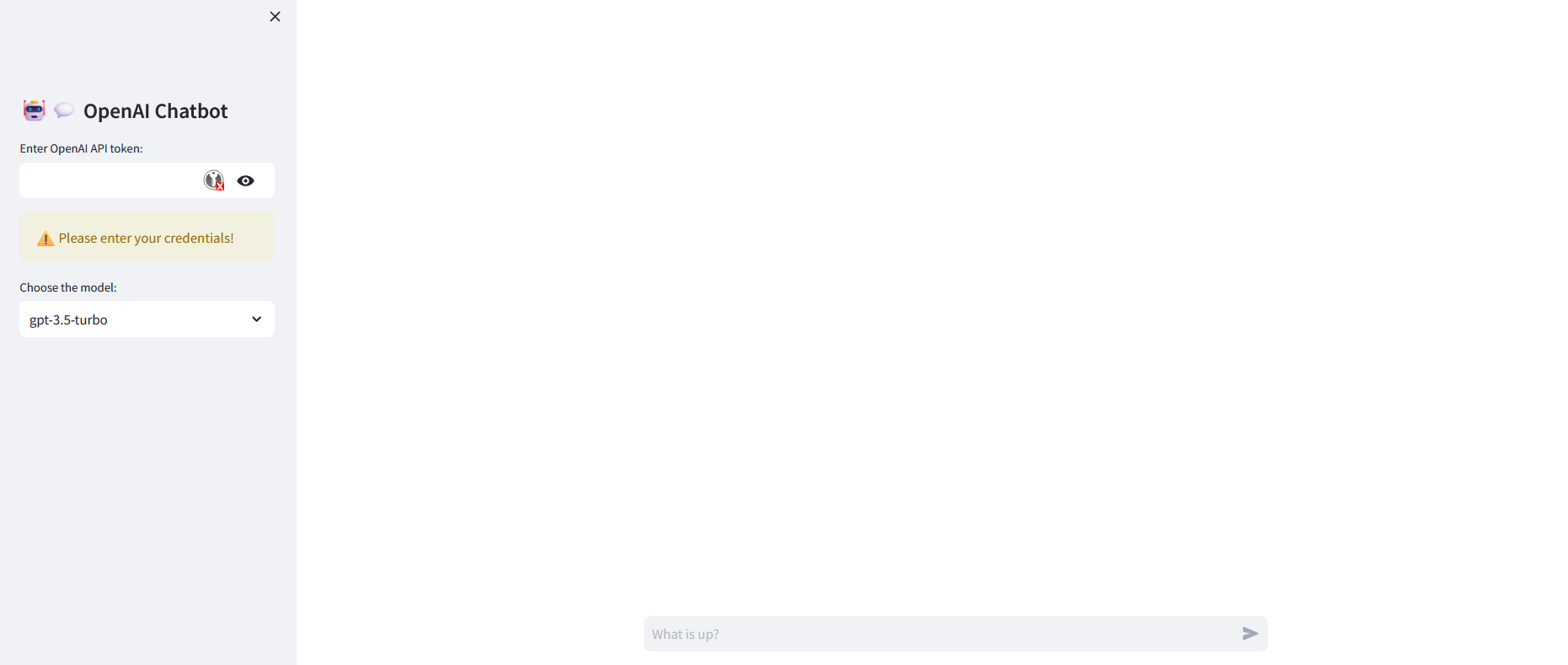

This is what we will get: A Web UI to use Gpt3.5, GPT4 and the latest GPT4-o:

A GPT Clone with Streamlit

If you want, you can try the project, first:

- Install Python 🐍

- Clone the repository

- Get a F/OSS IDE (Optional, but why not)

- And install Python dependencies: We will be using venv first and later create a Docker version for SelfHosting the GenAI App.

- (Optional) - Deploy to Production

git clone https://github.com/JAlcocerT/openai-chatbot

#python --version

python -m venv openaichatbot #create a Python virtual environment

openaichatbot\Scripts\activate #activate venv (windows)

source openaichatbot/bin/activate #(linux)

#deactivate #when you are doneOnce active, you can just install the Python packages as usual and that will affect only that venv:

pip install -r requirements.txt #all at once

#pip list

#pip show streamlit #check the installed versionWe are relying in these 2 Python Packages:

streamlit==1.26.0 #https://pypi.org/project/streamlit/#history

openai==0.28.0 #https://pypi.org/project/openai/#historyNow, to create the container Image: It will make everything (dependencies) simpler.

Really, Just Get Docker 🐋👇

You can install Docker for any PC, Mac, or Linux at home or in any cloud provider that you wish. It will just take a few moments. If you are on Linux, just:

apt-get update && sudo apt-get upgrade && curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

#sudo apt install docker-compose -yAnd install also Docker-compose with:

apt install docker-compose -yWhen the process finishes, you can use it to self-host other services as well. You should see the versions with:

docker --version

docker-compose --version

#sudo systemctl status docker #and the statusWe just need this Dockerfile:

FROM python:3.11

# Install git

RUN apt-get update && apt-get install -y git

# Clone the repository

#RUN git clone https://github.com/JAlcocerT/openai-chatbot

#WORKDIR /openai-chatbot

# Set up the working directory

COPY . ./app

WORKDIR ./app

# Install Python requirements

RUN pip install -r requirements.txt

# Set the entrypoint to a bash shell

CMD ["/bin/bash"]And now we build our image with:

export DOCKER_BUILDKIT=1

docker build --no-cache -t openaichatbot . #> build_log.txt 2>&1Or if you prefer, build it with Podman 👇

You will need to install Podman, a Docker alternative

podman build -t openaichatbot .

#podman run -d -p 8501:8501 openaichatbotOnce built, we can run the image with CLI:

#docker run -p 8501:8501 openaichatbot:latest

#docker exec -it openaichatbot /bin/bash

#sudo docker run -it -p 8502:8501 openaichatbot:latest /bin/bashSelfHosting Streamlit ChatGPT Clone

Or, With Portainer as Stack:

version: '3'

services:

streamlit-openaichatbot:

image: openaichatbot

container_name: openaichatbot

volumes:

- ai_openaichatbot:/app

working_dir: /app # Set the working directory to /app

command: /bin/sh -c "streamlit run streamlit_app.py"

#command: tail -f /dev/null #streamlit run appv2.py # tail -f /dev/null

ports:

- "8507:8501"

volumes:

ai_openaichatbot:Now we have our Streamlit UI at: localhost:8501

For Production deployment, you can use NGINX or Cloudflare Tunnels to get HTTPs

Conclusion

Now you have your own Streamlit Clone to use GPT Models and pay by what you use, instead of paying for a full month up front.

But if now you want to give a try to open LLMs, definitely check:

| Service | Description |

|---|---|

| Ollama | A free and open-source AI service that features local models. |

| Text Generation Web UI | A free and open-source AI service that uses local models to generate text. |

| GPT4All | A robust open-source project supporting interactions with LLMs on local machines. Designed for both CPU and GPU hardware. |

| KoboldCPP Project | A project that allows you to use GGML and GGUF models locally. |

Which LLM is giving you the best results with your Prompts?

Similar F/OSS AI Projects using API’s 👇

By using Streamlit, you can create similar projects to chat with your PDF’s or get summaries of youtube videos:

- Using Streamlit + OpenAI API to chat with your Docs

- Summarize YT Videos with Groq

- Through Groq’s API, you can access open models like LLama3

Non Local Models for Projects ❎👇

You can make some F/OSS Project that delegates the model execution to a third party, for example:

Groq - An AI service that focuses on providing high-performance, computationally efficient systems for machine learning tasks.

Gemini (Google) - An AI service from Google that provides APIs for machine learning and data analysis.

Mistral - An AI service that specializes in creating personalized experiences for users. It uses machine learning algorithms to understand user behavior and preferences. With its open models, you can use their API for a variety of applications.

Anthropic (Claude) - A research-oriented AI service developed by Anthropic. It aims to build models that understand and respect human values. You can manage your settings and keys on their console.

Open AI - Known for its GPT APIs, OpenAI offers a range of AI and machine learning services.

Grok (Twitter) - An AI service developed by Twitter for data analysis and pattern recognition. It’s primarily used for analyzing social media data to provide insights into user behavior.

Vertex AI (Google) - Another AI service from Google, Vertex AI provides tools for data scientists and developers to build, deploy, and scale AI models.

AWS Bedrock - Amazon’s foundational system for AI and ML services. It provides a wide range of tools and services for building, training, and deploying machine learning models.

Where can I download the latest F/OSS LLM’s? 👇

Ways to Evaluate LLMs 👇

- https://lmsys.org/

- LMSYS Chatbot Arena: Benchmarking LLMs in the Wild: https://chat.lmsys.org/

- https://github.com/ray-project/llmperf - a library for validating and benchmarking LLMs

Other Interesting F/OSS Apps built on top of OpenAI API

- MetaGPT is a promising framework that you can SelfHost if you want to explore the potential of LLMs for collaborative software development. It streamlines the process, allows for efficient LLM integration, and facilitates a multi-agent approach for tackling complex projects.

- Focuses on multi-agent collaboration within LLMs. It assigns different roles (product manager, engineer, etc.) to various LLMs, enabling them to work together on a project

- You can run MetaGPT fully local with Ollama

Great for LLM driven complex software development

- Agents Swarm with CrewAI and Docker

- Focuses on building multi-agent systems that leverage LLMs alongside other tools and functionalities. It allows developers to create custom workflows and utilize LLMs for specific tasks within the system.

- Offers a more user-friendly interface and might be easier to pick up for developers with a basic understanding of LLMs and APIs

More flexible and can be used for a wider range of tasks, including data processing, automation of repetitive tasks

Flowise AI - Drag & drop UI to build your customized LLM flows

- Low-code application building with LLMs. It offers a drag-and-drop interface that allows users to visually construct workflows and applications using LLMs without extensive coding.

- User-friendly interface, ideal for beginners or those with limited coding experience. Supports various LLMs and offers pre-built components for common tasks.

- Less flexibility and customization compared to code-based frameworks like crewAI or MetaGPT.

DifyAI - an open-source project that functions as a development platform specifically designed for LLMs

- Provides a user-friendly graphical interface to design and manage complex LLM workflows. You can drag and drop components to visually construct the workflow, making it accessible to users even without extensive coding experience.

- RAG Pipeline Integration: Dify integrates well with Retrieve, Ask, Generate (RAG) pipelines. RAG pipelines allow your LLM workflows to access and process information from the real world through search engines or other external sources, enhancing the capabilities of your LLM applications.

What else can I do with AI? ⏬

Some other interesting repositories to have a look for ideas:

Remember, OpenAI models like GPT4 are closed source ❎

FAQ

F/OSS IDE’s and extensions for AI Projects ⏬

- VSCodium - VS Code without MS branding/telemetry/licensing - MIT License ❤️

- You can support your development with Gen AI thanks to F/OSS extensions

- Continue Dev - Apache License ✅

- You can support your development with Gen AI thanks to F/OSS extensions

Similar Free and Open Tools for Generative AI

If you are looking for an UI to interact with Open Models, you can try any of these:

Open Web UI - previously known as Ollama Web UI

The text generation web UI Project, a SelfHostable Gradio App to interact with local LLMs