Architecture D&A like a Pro

TL;DR

Improving the d&a career docs.

+++ Smart vs Invest

Intro

You might encounter this kind of architectures in your data analytics journey:

What am I talking about?

Well, you might have heard of several big data tools, even BI tools.

Many names, many concepts.

In reality, what organizations want is to have tech processing capabilities, from the enormous amounts of data that today is generated and lands into their data systems:

graph LR

subgraph Landing & Bronze

direction LR

L1 --> B1

L2 --> B2

L3 --> B3

end

subgraph Silver

direction LR

B1 --> S1

B2 --> S2

B3 --> S2

end

subgraph Gold

direction LR

S1 --> G1

S2 --> G1

endMake it compatible with their stack bronze, do some kind of enhancement silver and then have a layer to based their decisions on: gold.

Yes, I love diagrams

If you are an architect, you should too. See: https://mermaid.js.org/syntax/architecture.html and https://iconify.design/

- An Operational Data Hub (ODH) is a central, integrated data store that serves operational systems and analytical applications with near real-time or real-time data.

It acts as a single source of truth for operational data, consolidating information from various source systems.

Key characteristics of an ODH 📌

- Real-time or near real-time data ingestion and delivery.

- Data integration and transformation.

- Support for operational analytics and decision-making.

- Lower latency compared to traditional data warehouses.

- Data LakeHouses:

Data Modelling

Design a Data Story

https://jalcocert.github.io/JAlcocerT/data-basics-for-data-analytics/#others

A conceptual data model is the highest level, and therefore the least detailed.

A logical data model involves more detailed thinking about the implementation without actually implementing anything.

Finally, the physical data model draws on the requirements from the logical data model to create a real database.

The Data LifeCycle

Tech

Test_Data = [("James","Sales","NY",85000,34,10000),

("Maria","Finance","CA",90000,24,23000),

("Raman","Finance","CA",99000,40,24000),

("Kumar","Marketing","NY",91000,50,21000)

]

schema = ["employee_name","department","state","salary","age","bonus"]

Test_DF = spark.createDataFrame(data=Test_Data, schema = schema)

Test_DF.printSchema()

#Test_DF.show(5, truncate=False)

Test_DF.limit(5).toPandas().style.hide_index()from pyspark.sql import functions as f

(df

.withColumn("update_month_day", f.date_format(f.col("last_updated"), "MM-dd")) # Extract MM-dd

.groupBy("update_month_day")

.agg(f.count("*").alias("record_count"))

.orderBy("update_month_day")

.show())To work with huge JSON’s, consider these tools

Some TECH/Tools for BIG Data Platforms 📌

- Diagrams: Tools like Mermaid and Excalidraw for visualizing workflows and processes.

- Apache NiFi: Automates and manages data flows between systems, supporting scalable data routing and transformation.

- Apache Iceberg: A table format for large analytic datasets, improving performance and schema handling in big data ecosystems.

- MinIO: Distributed, high-performance object storage, compatible with Amazon S3, commonly used for cloud-based and private storage solutions.

- Boto3: AWS SDK for Python, used for managing AWS services like S3.

- Great Expectations: A Python library for defining data quality checks and validating data integrity.

- Project Nessie: Version control for data lakes, enabling Git-like data management and collaboration.

- Hue: An open-source web interface for interacting with big data systems like Hadoop, simplifying SQL querying and data browsing.

- Argo Workflows: Kubernetes-native workflow engine for orchestrating complex jobs and data pipelines.

- Kubernetes, Helm, HULL: Kubernetes for container orchestration, Helm for packaging applications, and HULL for Helm chart linting.

- Rancher: A platform that simplifies Kubernetes cluster management.

- RabbitMQ: A message broker that enables distributed communication between applications.

- Parquet: Columnar storage format optimized for querying large datasets.

- Avro: A data serialization system with schema evolution capabilities.

- Apache Livy: REST service for remote Spark job execution.

- Pydantic: Data validation and settings management via Python type annotations.

- Celery: Distributed task queue system for managing background processes.

- TRINO: Distributed SQL query engine for large-scale data analysis.

- Open Data Hub: Metadata platform for data management and integration.

- CleverCSV: Python tool for working with messy CSV files.

- StringZilla: Library for high-performance string operations across multiple languages.

These tools span across data flow automation, big data management, Kubernetes workflows, data validation, and distributed computing.

- Information to insight

- Stakeholder management

- Managing expectations

- Estimating tasks

- How to sell ideas

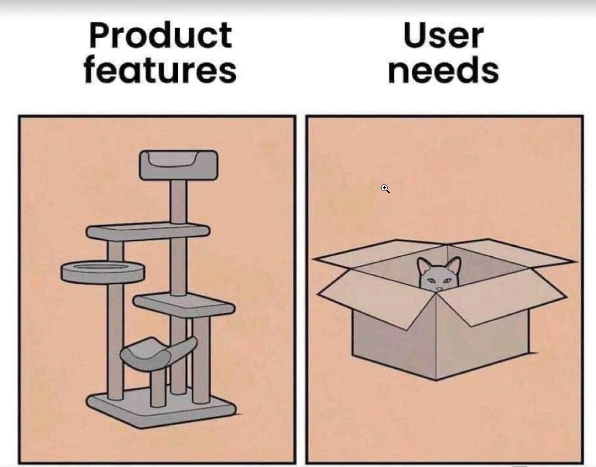

- What we are missing in the product? fomo, loss»>gain

From the Experience

Its not all about tech and hard skills.

Whatever the industry you are working on, make sure to set and improve your workflow for effectiveness:

- What’s going on

- Meeting Scheduler Template

- RCA Template

- MTG Summary Template - What’s your takeaway after the time investment?

For ideas, you can check this weekly planning and then tweak it as per your daily/scrum ceremonies.

Knowing the customer journey or the what this product feels like, always helps! The less friction, the more beautiful funnel you get

PM Skills

Whenever you are involved on a project, make sure that there are clear:

You might hear about the Eisenhower Matrix as well - A time management tool that helps you prioritize tasks by dividing them into four categories based on urgency and importance.

Get ready to organize effective meetings:

There is no good meeting without a proper MoM notes and clear action points.

As a D&A Architect, you will also prototype and create mockups

And as obvious as it might seem, I still heard project managers asking to developers about ETA’s instead of task duration.

If you are a PM, be aware of the Triple Constraint 📌

As a project manager, the variables you can typically “play with” (or, more formally, manage and optimize) are indeed:

- Scope: What needs to be done; the features, functions, and deliverables of the project.

- Resources: The people, equipment, materials, and facilities needed to complete the project.

- Timeline (or Schedule): The duration of the project and the sequence of activities.

- Cost (or Money/Budget): The financial resources allocated to the project.

These four are often referred to as the “Project Management Triple Constraint” (or sometimes Quadruple Constraint, including quality, or even more if you consider risk, etc.).

The classic “triple constraint” usually focuses on scope, time, and cost, with quality being an outcome influenced by how you balance these three.

What’s your goal then? Knowing 3 get the last one right?

In essence, yes, that’s a very good way to think about it, particularly when changes or trade-offs are necessary. Your primary goal as a project manager is to deliver the project successfully, meeting the defined objectives and stakeholder expectations.

This usually means:

- Delivering the agreed-on scope: Ensuring all required features and functionalities are present.

- Within the allocated budget: Not overspending.

- On schedule: Finishing by the agreed-upon deadline.

- To the required quality standards: The deliverables are fit for purpose and meet expectations.

The “knowing 3 get the last one right” concept comes into play during trade-offs. If one variable changes, it almost always impacts at least one of the others. For example:

- If scope increases (you want more features), you’ll likely need more time, more resources, and/or more money.

- If the timeline shortens (you need it faster), you’ll likely need more resources (e.g., more people working in parallel, possibly leading to higher costs) or you’ll have to reduce the scope.

- If the budget is cut, you might have to reduce scope, extend the timeline, or reduce the quality (e.g., using cheaper materials, less experienced staff).

A PM’s job is to manage these interdependencies, communicate the impacts of changes to stakeholders, and make informed decisions (or help stakeholders make them) to keep the project on track for success.

It’s about finding the optimal balance among these constraints to achieve the project’s goals.

Decision Making

Swot and cost-benefit analysis are fundamental and widely used simple frameworks for decision-making.

They each offer a distinct approach and are valuable tools in various contexts.

SWOT vs Satisficing vs CBA 📌

SWOT Analysis:

- Strengths: Its simplicity and broad applicability are major strengths. It provides a structured way to think about both internal capabilities and external factors. It’s a great starting point for strategic planning and can be used for individual decisions as well as organizational ones. The visual representation (often a 2x2 matrix) makes it easy to understand and communicate.

- Weaknesses: SWOT analysis can be subjective and doesn’t inherently provide a way to weigh the different factors. It can also be static, offering a snapshot in time rather than a dynamic view. Without further analysis or prioritization, it might not lead to clear action steps.

- Overall: A valuable initial assessment tool. It helps frame the decision and identify key considerations. However, it often needs to be followed up with more detailed analysis and prioritization techniques.

Satisficing Model:

- Strengths: This model acknowledges the reality of bounded rationality – that decision-makers have limited time, information, and cognitive resources. It’s a practical approach when speed and efficiency are crucial, or when the cost of finding the absolute best solution outweighs the potential benefits. It can prevent “analysis paralysis.”

- Weaknesses: Satisficing might lead to suboptimal outcomes if the minimum criteria are set too low or if potentially much better options are overlooked. It relies heavily on the decision-maker’s judgment in defining “satisfactory.” In complex or high-stakes decisions, settling for the first acceptable option could have significant negative consequences.

- Overall: A realistic and often necessary approach in many situations. However, it’s important to be mindful of the potential trade-offs and to ensure that the “minimum criteria” are thoughtfully considered, especially for important decisions.

Cost-Benefit Analysis (CBA):

- Strengths: CBA provides a quantitative and systematic way to evaluate options based on their economic impact. It helps to make decisions more objective and transparent. By assigning monetary values to both costs and benefits, it allows for a direct comparison of different alternatives. It’s particularly useful for evaluating investments, projects, and policy decisions.

- Weaknesses: Assigning accurate monetary values to all costs and benefits, especially intangible ones (like environmental impact or social well-being), can be challenging and subjective. The results of a CBA are highly dependent on the assumptions made and the discount rate used. It might also oversimplify complex issues by focusing solely on economic factors.

- Overall: A powerful tool for evaluating the economic implications of decisions. However, it’s crucial to acknowledge its limitations and to consider non-economic factors alongside the quantitative results. Transparency in the assumptions and calculations is essential for its credibility.

In Conclusion:

These three frameworks offer different lenses through which to approach decision-making.

They are not mutually exclusive and can even be used in conjunction.

For instance, a SWOT analysis might help identify potential areas for a cost-benefit analysis, or the concept of satisficing might be applied when considering various options identified through a SWOT.

Project Delivery

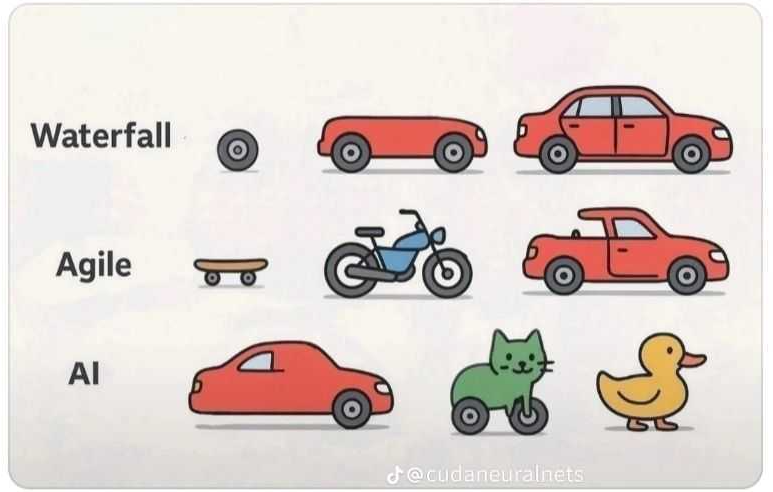

To increase the likelihood of a successfully project delivery, having everyone involved on the same page and working on whats actually valuable is key.

| Artifact | Question / Green Light / Purpose | Who (Primary Responsible) |

|---|---|---|

| Project Charter | “Go/No-Go”: Do we authorize this project and empower the PM? | Project Sponsor, Project Manager (drafts) |

| BRD | “Why are we doing this from a business perspective?” | Business Analyst, Business Stakeholders |

| PRD | “What product features will solve the user/business problem?” | Product Manager / Product Owner |

| FRD | “How will the system specifically behave to deliver these features?” | Business Analyst, Systems Analyst, Technical Lead |

| Project Estimation | “How much time and resources will this project require?” | Project Manager, Development Team, QA Team, Design Team |

| RACI Matrix | “Who is responsible for what specific task/decision?” | Project Manager (with team leads and key stakeholders) |

PPTs

After a successful project, people will want to promote it.

Be ready to create cool presentations.

Remember that nobody is stopping you from using AI to create ppts!

I covered SliDev here, which I got to know here and forked here

To get confortable with SliDev, you can try:

With Streamlit we can instruct the LLM to build those markdowns for Slidev. Now, even by vibe coding.

BA & Elicitation

To tell better stories with data, you have to ask the right questions:

- What are the kinds of changes we are doing?

- What are the needs we are trying to satisfy?

- Who are the stakeholders involved?

- What do stakeholders consider to be of value?

- The BRD vs FRD in the SDLC

Remember the power of 80/20

Be ready to sharp these questions and go from the nice to have to the MUST have features.

My Favourite Questions for Requirement Gathering 📌

Remember about functional and non functional BRs (business requirements):

- What are the key objectives (OKR) and goals of this data product or project?

- Who are the primary end-users or target audience for this data product?

- What are the most important KPIs or metrics that the data product should track and display?

- What level of interactivity and customization is expected in the data product (e.g., dashboards, reports)?

- How will the data product’s model performance be evaluated and validated?

See more questions here

BPMN AND UML

In practice, as a Business Analyst might use both UML and BPMN:

- Use BPMN to model end-to-end business processes, identify areas for improvement, and communicate process flows to business stakeholders.

- You will hear about: Microsoft Visio, LucidCharts, Miro, DrawIO,…

- Use UML (especially use case, activity, and sequence diagrams) to delve deeper into the functional requirements of a system that supports those business processes and to communicate system behavior to the development team.

- Lucidchart, Visio, DrawIO, UMLet,…

While BPMN focuses on process flow, UML can complement this by providing different perspectives:

- Activity Diagrams: Can also be used to model process flows, especially when integrating system interactions.

- Use Case Diagrams: Help define the interactions between users and the RPA bots or the systems they interact with. This clarifies the scope and goals of the automation.

- Sequence Diagrams: Can illustrate the interactions between the RPA bot and various systems over time, showing the sequence of actions and data exchange.

SMART vs INVEST

It’s helpful to understand that INVEST and SMART serve different purposes, though they can be related.

INVEST is a set of guidelines to help you create well-formed user stories.

SMART is a set of criteria to help you define objectives or goals.

Here’s a breakdown of when each is most appropriate:

INVEST: For User Stories

INVEST helps ensure that your user stories are functional, valuable, and manageable within an Agile development process.

- Independent: Can be worked on without dependencies.

- Negotiable: Flexible and open to discussion.

- Valuable: Delivers value to the user.

- Estimable: Can be estimated in terms of effort.

- Small: Can be completed within a sprint.

- Testable: Can be verified through testing.

When to Use INVEST:

- When writing and evaluating user stories for Agile development.

- To ensure stories are actionable, manageable, and deliver value.

A successful user story should be a concise and clear description of a feature or functionality from the end-user’s perspective.

It helps the development team understand who will use the feature, what they want to achieve, and why it’s important to them.

More about INVEST 📌

The key components that a successful user story typically contains, often remembered by the acronym INVEST:

I - Independent:

- The story should be self-contained and not heavily dependent on other stories. This allows the team to prioritize and work on stories in a flexible order. Dependencies can lead to delays and complexities in planning and execution.

N - Negotiable:

- The story is a starting point for a conversation, not a contract. The details of the story can be discussed and refined collaboratively between the product owner, development team, and stakeholders during backlog refinement or sprint planning. It leaves room for technical implementation details and alternative approaches.

V - Valuable:

- The story must deliver value to the end-user or the business. It should clearly articulate the benefit or outcome the user will experience by having this feature. This ensures the team is working on things that matter.

E - Estimable:

- The story should be small enough that the development team can estimate the effort required to implement it. This helps with sprint planning and forecasting. If a story is too large or vague, it becomes difficult to estimate accurately.

S - Small:

- The story should be sized appropriately to be completed within a single sprint. Smaller stories allow for faster feedback loops, easier tracking of progress, and reduced risk. Large epics should be broken down into smaller, manageable stories.

T - Testable:

- The story should be written in a way that makes it clear how to verify if it has been implemented correctly. This often involves defining clear acceptance criteria that can be used to create test cases.

Beyond the INVEST criteria, a well-formed user story often follows a simple template:

As a [type of user], I want [some goal] so that [some reason/benefit].

Example:

- As a registered customer, I want to be able to reset my password so that I can regain access to my account if I forget it.

SMART: For Objectives and Goals

SMART helps you define clear, measurable, and achievable objectives, whether for a project, a sprint, or even a user story’s acceptance criteria.

- Specific: Clearly defined.

- Measurable: Progress can be tracked.

- Achievable: Realistic and attainable.

- Relevant: Aligned with overall goals.

- Time-bound: Has a defined timeframe.

When to Use SMART:

- When defining project goals, sprint goals, or any objectives you want to track.

- When writing acceptance criteria for a user story. (This is a very common and effective use of SMART in Agile.)

- When setting performance targets or any other kind of goal.

How They Relate | While INVEST and SMART are distinct, they can work together 📌

- You use INVEST to write a good user story.

- You use SMART to write the acceptance criteria for that user story. The acceptance criteria are the specific, measurable conditions that must be met to say the user story is complete, and those should be SMART.

In the context of user stories:

- You wouldn’t say “a user story should be SMART” in place of “a user story should be INVEST.”

- Instead, you’d say, “The acceptance criteria for a user story should be SMART.”

In addition to the core structure and INVEST principles, a successful user story often benefits from:

Clear Acceptance Criteria: These are specific, measurable, achievable, relevant, and time-bound (SMART) conditions that define when the story is considered complete and working correctly. They provide clarity for the developers and testers.

- Example Acceptance Criteria for the password reset story:

- Given I am on the “Forgot Password” page.

- When I enter my registered email address and click “Submit”.

- Then I should receive an email with a link to reset my password.

- And the reset password link should be valid for 24 hours.

- And if I enter an invalid email address, I should see an error message.

- Example Acceptance Criteria for the password reset story:

Context and Background: Briefly providing context or background information can help the team understand the user’s needs and the overall purpose of the feature.

Prioritization: While not part of the story itself, clearly prioritizing user stories helps the team understand which ones to work on first based on business value and urgency.

By including these elements, a user story becomes a powerful tool for communication, collaboration, and ensuring that the development team is building the right product for the right users.

These are very helpful on end to end projects - where we went from raw data modelling to BI solutions:

You can also have handy a list of QQ to enable others perform their job.

JIRA Creation

What’s the best way to create tasks for jira?

Make sure to provide:

- Context

- Action/Task

- Success Criteria / Definition of Done

Conclusions

The Information Workflow

Have you ever felt that nobody has doubts during syncs, but then each has very different understandings of the same?

Thats why information is so crucial.

Important from how you handle meetings, to how you write designs, to how you ask from others.

Effective Meetings

Effective Meetings WorkFlow for Effectiveness

WorkFlow for EffectivenessFAQ

D&A Use Case

Avro vs Parquet vs Delta Lake | Data Storage for Big data 📌

Apache Avro, Apache Parquet, and Delta Lake are all data storage formats used in big data ecosystems, but they serve different purposes and have different capabilities.

Delta Lake is built on top of Parquet, adding a transaction layer that provides significant advantages.

Avro vs. Parquet

Avro and Parquet are file formats, and the key difference lies in their data organization.

Avro is a row-oriented format, meaning it stores data record by record. It’s excellent for data serialization and is often used for data exchange between different systems, like in streaming pipelines. Avro has strong support for schema evolution, allowing you to add or remove fields without rewriting the entire dataset, which is a major advantage for evolving data pipelines.

Parquet is a column-oriented format. Instead of storing data row-by-row, it groups data by column. This is highly efficient for analytical and OLAP (Online Analytical Processing) workloads, as it allows query engines to read only the specific columns needed for a query, drastically reducing I/O operations and improving query performance. Parquet also achieves better compression due to the similarity of data within each column.

Avro/Parquet vs. Delta Lake

Delta Lake is not just a file format; it’s an open-source storage layer that sits on top of Parquet files. It combines the benefits of Parquet’s columnar storage with an ACID (Atomicity, Consistency, Isolation, Durability) transaction log.

- ACID Transactions: Delta Lake provides ACID compliance, which means you can perform reliable data modifications like

UPDATE,DELETE, andMERGEoperations on your data lake. These are difficult or impossible to do efficiently with raw Parquet or Avro files. - Time Travel: Yes, Delta Lake has time travel capabilities. Because it maintains a transaction log of all changes, you can query older versions of a table by a specific timestamp or version number. This is invaluable for auditing, reproducing experiments, or recovering from data errors.

- Schema Evolution and Enforcement: While Avro has good schema evolution, Delta Lake offers more advanced features. It can prevent writes that don’t match the schema, helping to maintain data quality.

- Performance Optimization: Delta Lake’s transaction log contains metadata about the underlying Parquet files, allowing it to perform optimizations like file skipping and z-ordering (co-locating related data in the same set of files), which further boosts query performance.

| Feature | Avro | Parquet | Delta Lake |

|---|---|---|---|

| Data Organization | Row-oriented | Column-oriented | Columnar (via Parquet) |

| Primary Use Case | Streaming, data exchange | Analytical queries (OLAP) | Data lakes, ACID transactions, data warehousing |

| ACID Transactions | No | No | Yes |

| Time Travel | No | No | Yes |

| Schema Evolution | Good | Limited | Excellent (with enforcement) |

| Performance | Fast writes, slower reads for analytics | Fast reads for analytics, slower writes | Very fast reads with optimizations, reliable writes |

Storage Location

You can store files in all three formats on HDFS (Hadoop Distributed File System) or other storage systems.

Avro and Parquet are fundamental file formats in the Hadoop ecosystem and are natively supported by HDFS. They are often used as the primary storage format for data lakes.

Delta Lake tables are essentially a collection of Parquet files and a transaction log (JSON files). This structure is designed to be compatible with distributed file systems like HDFS and cloud-based object stores such as Amazon S3, Google Cloud Storage, and Azure Data Lake Storage. The distributed nature of these storage systems is what allows Delta Lake to scale effectively for large datasets.

More T-Shaped Skills

There are 2 kind of knowledge you can have:

- Declarative - To know that something is possible

- Procedural - To know how to do something

A T-shaped individual has 📌

- A deep specialization in one area (the vertical bar of the “T”).

- A broad understanding across multiple related disciplines (the horizontal bar of the “T”).

Here’s how declarative and procedural knowledge fit into this framework:

The Vertical Bar (Deep Specialization)

The vertical bar of the T is where a person’s deep expertise lies. This depth requires a strong blend of both declarative and procedural knowledge, often with a heavy emphasis on the latter.

- Deep Declarative Knowledge: Within their specialization, a T-shaped individual possesses a vast amount of declarative knowledge. They know the foundational theories, the history, the best practices, the common pitfalls, and the nuances of their field. For a software engineer, this would be a deep understanding of data structures, algorithms, system architecture patterns, and the specific APIs of their chosen framework.

- Deep Procedural Knowledge: This is where the “mastery” comes in. They have spent countless hours applying their declarative knowledge through practice. They can do the work efficiently, effectively, and with high quality. They know the shortcuts, the debugging techniques, the refactoring patterns, and how to optimize for performance. For a software engineer, this means they can write clean, efficient code, design robust systems, and troubleshoot complex issues quickly. Their procedural knowledge becomes almost intuitive.

In essence, the vertical bar is where deep declarative knowledge is applied and transformed into highly effective procedural knowledge.

The Horizontal Bar (Broad Understanding)

The horizontal bar represents the breadth of knowledge across various related fields. This broad understanding is primarily driven by declarative knowledge, but it also involves a foundational level of procedural awareness.

- Broad Declarative Knowledge: This is about “knowing what other disciplines do” and “knowing why they do it.”

- For a software engineer, this might mean understanding the basics of UX design principles (knowing what good design looks like and why it’s important for usability), marketing strategies (knowing what marketing does and why it matters for product adoption), or business operations (knowing what the company’s business model is and why certain features are prioritized). They don’t need to be able to do UX design, marketing, or business operations at an expert level, but they understand the concepts and how these areas interact with their own.

- Foundational Procedural Awareness: While not deep, the horizontal bar also implies an appreciation for the how in other areas. The T-shaped individual might not do the work, but they understand the general processes, challenges, and tools involved. This helps them communicate effectively and empathize with colleagues in different roles. For example, a developer might not be a UX designer, but they understand the basic steps of user research and wireframing, which helps them collaborate better with the design team.

The horizontal bar facilitates communication, collaboration, and problem-solving across disciplines, largely by providing a shared declarative vocabulary and an appreciation for different procedural approaches.

The Relationship Summarized:

- Declarative knowledge forms the foundation for both the vertical (deep specialization) and horizontal (broad understanding) bars. You can’t truly do something well without first understanding the underlying what and why.

- Procedural knowledge represents the application and mastery of that declarative knowledge. It’s what allows a T-shaped individual to be highly effective within their specialization (vertical bar) and to understand the practicalities and challenges of related fields (horizontal bar).

- The “T” shape itself highlights the interplay: A strong vertical bar (deep procedural skill rooted in deep declarative knowledge) makes you an expert. A broad horizontal bar (broad declarative knowledge and procedural awareness) makes you a great collaborator, innovator, and adaptable problem-solver.

Essentially, T-shaped individuals leverage their deep procedural knowledge to excel in their primary role, while using their broad declarative knowledge to connect with, understand, and contribute effectively to other parts of the project or organization.

Diagrams and Retros

- In addition to my inconditional love for mermaidJS and general diagrams…

I would recommend you to have a look to:

You can also render mermaidJS and plotly graphs with your aissistant, via streamlit webapp

- I was recently impressed by ChartJS / ApexChart which can be rendered inside Python as well!

Virtual whiteboard for sketching hand-drawn like diagrams

- Having a look back and be able to assess what it worked and what has not can be insightful for further directions.