DevOps Tools

Tl;DR

You might come to this post bc of selfhosting and containers.

or bc you are a system engineer.

And stay when you realize how much you can do with proper devops setup in your homelab.

Intro

Why DevOps?

Because we want to move fast from idea to development and ship to production for your users.

Containers are one of the tools that you can use withing Development Operations.

ARM with github actions (?)

Yes: https://github.blog/2024-06-03-arm64-on-github-actions-powering-faster-more-efficient-build-systems/

sudo docker manifest create fossengineer/trip_planner:latest \

your_username/trip_planner:latest-arm32 fossengineer/trip_planner:latest-arm64- Annotate the manifest list to specify the platform for each image:

sudo docker manifest annotate your_username/trip_planner:latest your_username/trip_planner:latest-arm32 --os linux --arch arm --variant v7

sudo docker manifest annotate your_username/trip_planner:latest your_username/trip_planner:latest-arm64 --os linux --arch arm64 --variant v8Containers

As you know, they are an amazing technology to pack apps so that they work reliably in any computer.

Some people might think that docker = containers, when its containers > docker, podman…

MIT | Cruise is a powerful, intuitive, and fully-featured TUI (Terminal User Interface) for interacting with Docker. Built with Go and Bubbletea, it offers a visually rich, keyboard-first experience for managing containers, images, volumes, networks, logs and more — all from your terminal.

CRON jobs via UI: https://docs.linuxserver.io/images/docker-healthchecks/

Crontab for Docker - https://github.com/mcuadros/ofelia

I love to use containers for SelfHosting

They allow us to package complete applications.

Making the deploy process on other servers kind of copy and paste.

How to Setup Docker? 📌

echo "Updating system and installing required packages..." && \

sudo apt-get update && \

sudo apt-get upgrade -y && \

echo "Downloading Docker installation script..." && \

sudo curl -fsSL https://get.docker.com -o get-docker.sh && \

sudo sh get-docker.sh && \

echo "Docker installed successfully. Checking Docker version..." && \

sudo docker version && \

echo "Testing Docker installation with 'hello-world' image..." && \

sudo docker run hello-world && \

echo "Installing Docker Compose..." && \

sudo apt install docker-compose -y && \

echo "Docker Compose installed successfully. Checking version..." && \

sudo docker-compose --version && \

echo "Checking status of Docker service..." && \

sudo systemctl status docker | grep "Active" && \

sudo docker run -d -p 8000:8000 -p 9000:9000 --name=portainer --restart=always -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer-ceHow to Setup Podman? 📌

echo "Updating system and installing required packages..." && \

sudo apt-get update && \

sudo apt-get upgrade -y && \

echo "Installing Podman..." && \

sudo apt-get -y install podman && \

echo "Podman installed successfully. Checking Podman version..." && \

podman --version && \

echo "Testing Podman installation with 'hello-world' image..." && \

sudo podman run hello-world && \

echo "Installing Podman Compose..." && \

sudo apt-get -y install podman-compose && \

echo "Podman Compose installed successfully. Checking version..." && \

podman-compose --version && \

echo "Checking status of Podman service..." && \

sudo systemctl status podman | grep "Active" && \

sudo podman run -d -p 8000:8000 -p 9000:9000 --name=portainer --restart=always -v /var/run/podman/podman.sock:/var/run/podman/podman.sock -v portainer_data:/data portainer/portainer-ceCI/CD Tools

There are many tools to do CI/CD, like Jenkins or Github Actions.

Github Actions CI/CD Examples: For python projects, but not only

- https://github.com/JAlcocerT/Py_Trip_Planner

- https://github.com/JAlcocerT/Slider-Crank

- Also working with HUGO SSG to build this website: https://github.com/JAlcocerT/JAlcocerT/actions

Github CI/CD

GitHub Actions, a CI/CD framework provided by GitHub, allows you to automate the build, test, and deployment processes for your software projects.

You can do cool things, like:

graph LR

A[Code Commit] --> B{GitHub Actions Workflow};

B --> C{Build/Test/Deploy};

C --> D(GitHub Pages Deployment);

C --> E(Push Container to GHCR);

C --> F(Push Container to Docker Hub);

C --> G(Many things, like test that there are no broken links)How to use Github Actions CI/CD?

With GitHub Actions, you can define workflows to automate tasks such as compiling code, running tests, performing code analysis, and generating build artifacts.

That’s exactly what we are doing when pushing static web pages or built containers.

About GH Actions 📌

It supports various programming languages and offers great flexibility in customizing your CI pipeline.

In addition to Continuous Integration (CI), GitHub Actions also supports Continuous Deployment (CD) by integrating with different deployment strategies and environments.

This allows you to automate the deployment of your application to various platforms and hosting services, such as cloud providers or dedicated servers, ensuring a seamless release process.

This is how I’ve used GH Actions in my projects:

Streamlit MultiChat

Chat across LLM Providers with Python and Streamlit

Trip Planner

Tool to simulate these projects in the browser

Even the Web3 Astro Website

Use Astro with Github Actions to build Websites like a Pro

GitHub Workflows enable automatic Docker container creation given conditions (like a new push).

To start, go to your repository, click on Actions, and then New workflow.

You can select a workflow template that suits your project or follow the steps below to create your own .yml file for CI/CD.

The CI/CD workflow configuration is stored in .github/workflows/ci_cd.yml:

name: CI/CD Pipeline

on:

push:

branches:

- main

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1This setup prepares your Docker image.

Next, you can push the image to a container registry, such as GitHub Container Registry or DockerHub.

Pushing Containers to GitHub Container Registry

To push the created container to GitHub Container Registry, add the following to your workflow:

- name: Login to GitHub Container Registry

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.CICD_TOKEN_For_This_WF }}

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

tags: ghcr.io/your_github_username/your_repo_name:v1.0 You’ll need a CICD_TOKEN_For_This_WF secret so GitHub can authenticate the action.

Obtain this token from GitHub Settings under Developer Settings -> Personal Access Tokens.

Next, add this token as a repository secret under Repo Settings -> Secrets & variables -> Actions -> New repository Secret.

.yml configuration.To make your Docker image publicly accessible, set the package visibility to public under https://github.com/yourGHuser?tab=packages and update the Visibility of the Package.

Now, anyone can pull your Docker image using:

docker pull ghcr.io/your_github_username/your_repo_name:v1.0How to Self-Host Jenkins?

We have all been new to CI/CD at some point.

If you want to tinker with Jenkins, a great way is to Self-Host Jenkins with Docker.

Ansible

It’s all about the playbook!

Chat with Data

Chat with DataAnsible and Docker: Working Together

Ansible is an open-source software provisioning, configuration management, and application-deployment tool. It automates repetitive tasks such as:

- Configuration management

- Application deployment

- Intra-service orchestration

Ansible uses a simple syntax written in YAML called playbooks, which allows you to describe automation jobs in a way that approaches plain English.

Docker, on the other hand, is an open-source platform that automates the deployment, scaling, and management of applications. It does this through containerization, which is a lightweight form of virtualization.

Docker allows developers to package an application with all of its dependencies into a standardized unit for software development.

This ensures that the application will run the same, regardless of the environment it is running in.

Ansible and Docker are both powerful technologies that are widely used in the field of software development and operations (DevOps). They serve different but complementary purposes.

These two tools can work together in a few different ways:

- Ansible can manage Docker containers: Ansible can be used to automate the process of managing Docker containers. Ansible has modules like

docker_imageanddocker_containerwhich can be used to download Docker images and to start, stop, and manage the life cycle of Docker containers. - Ansible can be used to install Docker: You can write an Ansible playbook to automate the process of installing Docker on a server.

- Docker can run Ansible: You can create Docker containers that have Ansible installed. This might be useful if you want to run Ansible commands or playbooks inside a Docker container for testing or isolation.

In a typical DevOps pipeline, Docker can be used for creating a reproducible build and runtime environment for an application, while Ansible can be used to automate the process of deploying that application across various environments.

This combination can help teams create efficient, reliable, and reproducible application deployment pipelines.

apt install ansible

ansible --versionKubernetes

Kubernetes is called sometimes as K8s vs K3s…

Kubernetes - A tool to manage and automate automated workflows in the cloud.

It orchestrates the infrastructure to accomodate the changes in workload.

The developer just need to define a yml with the desired state of your K8s cluster.

Rancher: k3s

Rancher is an open source container management platform built for organizations that deploy containers in production.

Rancher makes it easy to run Kubernetes everywhere, meet IT requirements, and empower DevOps teams.

Setting up a High-availability K3s Kubernetes Cluster for Rancher.

We just need to have Docker installed and thanks to Rancher we can run our own Kubernetes Cluster.

- https://hub.docker.com/r/rancher/k3s/tags

- https://github.com/rancher/rancher

- https://www.rancher.com/community

Master Node

services:

k3s:

image: rancher/k3s

container_name: k3s

privileged: true

volumes:

- k3s-server:/var/lib/rancher/k3s

ports:

- "6443:6443"

restart: unless-stopped

volumes:

k3s-server:

#docker run -d --name k3s --privileged rancher/k3s Using kubectl

kubectl is a command-line tool that allows you to run commands against Kubernetes clusters.

It is the primary tool for interacting with and managing Kubernetes clusters, providing a versatile way to handle all aspects of cluster operations.

Common kubectl Commands:

kubectl get pods: Lists all pods in the current namespace.

kubectl create -f <filename>: Creates a resource specified in a YAML or JSON file.

kubectl apply -f <filename>: Applies changes to a resource from a file.

kubectl delete -f <filename>: Deletes a resource specified in a file.

kubectl describe <resource> <name>: Shows detailed information about a specific resource.

kubectl logs <pod_name>: Retrieves logs from a specific pod.

kubectl exec -it <pod_name> -- /bin/bash: Executes a command, like opening a bash shell, in a specific container of a pod.You will need to understand what are the K8s master/Slave

Monitoring

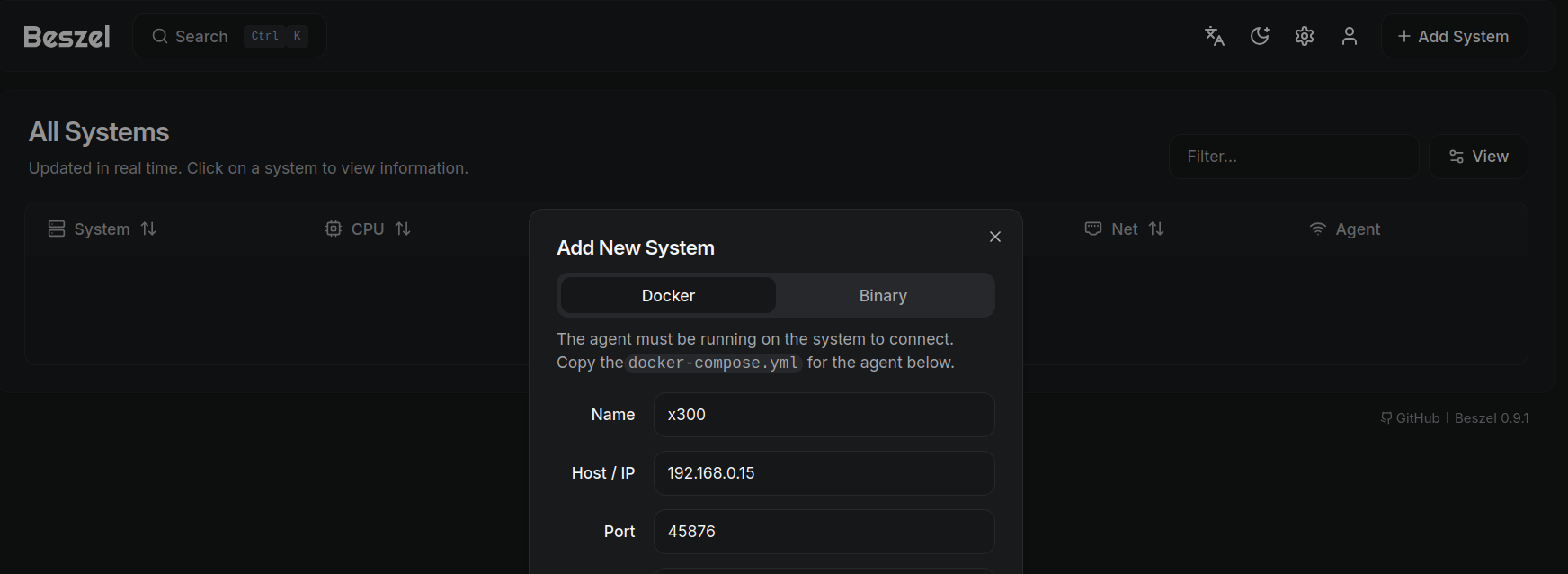

You can try with a custom Grafana dashboard or with Beszel:

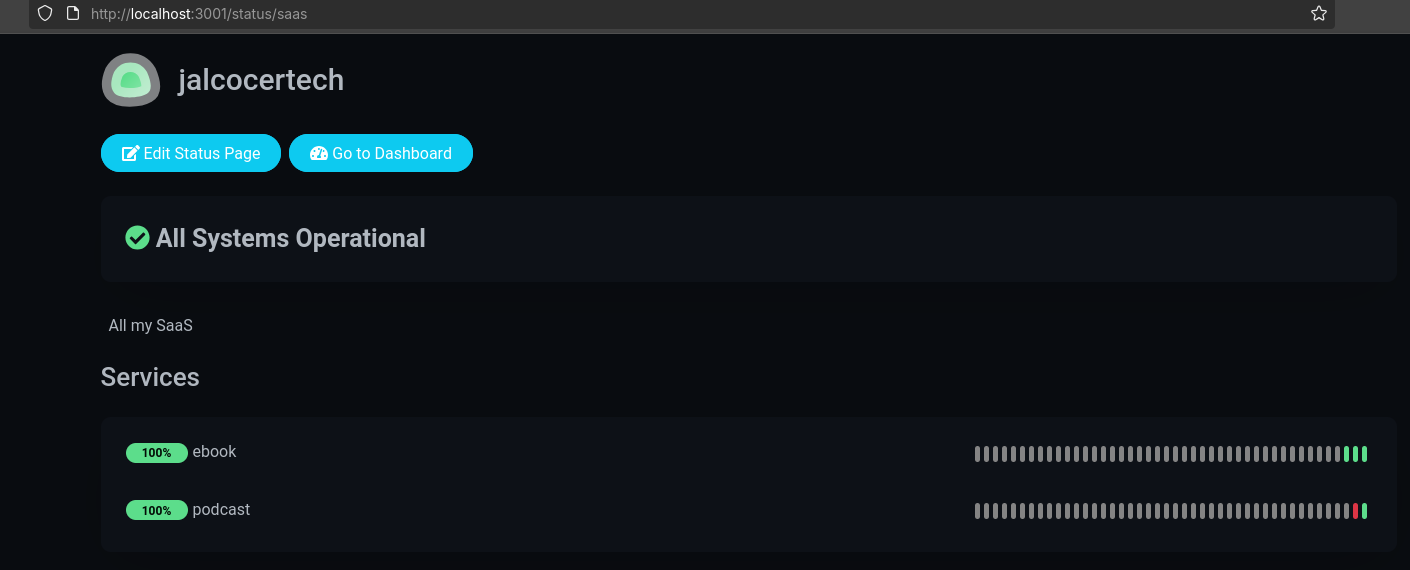

Or with Uptime Kuma:

Be aware of the http status codes: https://it-tools.tech/http-status-codes

With Uptime Kuma, you can get quickly an uptime pages for your services:

Uptime Kuma status pages example: https://status.tromsite.com/status

People build business around this kind of things: https://status.perplexity.com/, see https://instatus.com/pricing

If you want to play deeper with uptime kuma, see their API: https://uptime-kuma-api.readthedocs.io/en/latest/

Server-less Functions - FaaS

Designed to run functions on demand: you upload your code as small, stateless functions, which are triggered by specific events or API calls, produce output, and then terminate until called again.

This model eliminates the need to manage underlying servers, handling scaling and resource allocation automatically.

How Serverless Functions Operate

- Deployment: Developer provides a function (code) and configuration, which is stored and managed by the platform.

- Invocation: Functions are executed in response to specific triggers, such as HTTP requests, event streams, file uploads, or database changes.

- Ephemeral Execution: The function runs only as long as needed to produce a result, after which the runtime environment is deallocated or paused.

- Auto-scaling: The platform can spawn multiple instances as needed to handle concurrent events, scaling from zero up to thousands based on demand.

- Statelessness: Each function execution is typically stateless, with state either externalized (e.g., databases, caches) or passed explicitly between invocations.

OpenFunction is conceptually similar to offerings like Cloudflare Workers, AWS Lambda, Google Cloud Functions, and Azure Functions, as all these platforms provide serverless Function-as-a-Service (FaaS) environments where developers focus on deploying code as functions without managing the underlying infrastructure.

- Cloudflare Workers: Primarily focused on edge computing and low-latency execution at global edge locations, whereas OpenFunction is designed for Kubernetes clusters, enabling local or hybrid-cloud deployment flexibility.

- AWS Lambda / Google Cloud Functions / Azure Functions: These mainstream FaaS platforms are tightly integrated with their respective cloud ecosystems (AWS, Google Cloud, Azure), providing seamless access to proprietary cloud services and autoscaling.

- OpenFunction: offers a more cloud-agnostic approach and integrates with multiple runtimes, making it suitable for Kubernetes users who want to avoid vendor lock-in or run their workloads on-premises, in hybrid clouds, or on any public cloud.

| Platform | Deployment Environment | Cloud Vendor Lock-in | Scaling & Events | Integration Focus |

|---|---|---|---|---|

| AWS Lambda | AWS cloud | High | Native | AWS ecosystem |

| Google Cloud Fn | Google Cloud | High | Native | Google services |

| Azure Functions | Azure cloud | High | Native | Azure ecosystem |

| Cloudflare Workers | Cloudflare edge network | Medium | Native | Edge compute/networking |

| OpenFunction | Kubernetes (cloud/on-prem) | Low | Kubernetes (advanced) | Cloud-agnostic, Dapr, K8s APIs |

OpenFunction is a cloud-native open source Function-as-a-Service (FaaS) platform designed to help developers run serverless workloads efficiently on Kubernetes without managing underlying runtime environments or infrastructure.

The platform allows you to focus solely on business logic by submitting your code as functions, and it can handle both synchronous and asynchronous workloads.

- Cloud-agnostic: Works across multiple cloud providers and decouples from proprietary BaaS ecosystems.

- Pluggable architecture: Supports multiple function runtimes, making it flexible to different backend needs.

- Sync and async support: Handles both types of functions and uniquely allows async functions to consume events directly from sources.

- Container image generation: Can build OCI-compliant container images directly from function code.

- Flexible autoscaling: Includes advanced autoscaling, scaling from zero (no usage) to any number of replicas as needed, with specific metrics-driven async autoscaling.

- Dapr integration: Simplifies Backend-as-a-Service (BaaS) integration for both sync and async functions via Dapr.

- Ingress and events management: Uses Kubernetes Gateway API for managing traffic, and provides its own flexible events management framework.

OpenFunction relies on Kubernetes Custom Resource Definitions (CRDs) to manage function lifecycles, making it a native fit for Kubernetes-based environments.

It also enriches the CNCF (Cloud Native Computing Foundation) Cloud Native Landscape as a sandbox project.

- Predominantly written in Go (94.9% of the codebase), with some Shell, Makefile, and Dockerfile.

OpenFunction is intended for teams or organizations looking to:

- Deploy scalable serverless workloads on Kubernetes.

- Integrate cloud-native function workflows with flexible event-driven patterns.

- Avoid locking into a single cloud BaaS provider.

Cloud Native Function-as-a-Service Platform

Cloud Native Function-as-a-Service Platform (CNCF Sandbox Project)

Thanks to DevOps Toolkit for showing this.

FAQ

How to monitor the Status of my Services?

You can get help from Uptime Kuma with Docker.

Transfering Files - What it is a FTP?

FTP stands for File Transfer Protocol, and an FTP server is a computer or software application that runs the FTP protocol.

Its primary purpose is to facilitate the transfer of files between different computers over a network, such as the internet or a local area network (LAN).

Why FTPs?

In essence, FileZilla acts as a bridge between your local computer and remote servers, making it easy to work with files stored on those servers as if they were part of your own file system.

It’s a popular choice for developers, system administrators, and anyone who needs to transfer files to or from remote servers securely and efficiently.

- Sharing Large Files: FTP servers allow you to share really big files that are too large for email or messaging apps.

- Fast and Efficient: They make it easy for multiple people to download the same file at the same time, so you don’t have to send it to each person individually.

- Access Anywhere: Your friends or family can access the files from anywhere with an internet connection. They don’t have to be at your house.

- Backup and Storage: You can use an FTP server to back up important files and keep them safe in case something happens to your computer.

How to use FTP?

- FileZilla is open-source software (GPL), and it has desktop applications available for both Windows and Linux.

FileZilla is similar to a remote folder connection. It allows you to connect to and manage files on remote servers over the internet or a network. Here’s how it works:

Connecting to Remote Servers: FileZilla allows you to connect to various types of remote servers, including FTP (File Transfer Protocol), SFTP (SSH File Transfer Protocol), FTPS (FTP Secure), and more. You provide the server’s address (like a website URL or IP address), your username, and password (if required) to establish a connection.

- http://192.168.3.200:3090/

- user & pass

Remote File Management: Once connected, FileZilla displays the files and directories on the remote server in one pane and your local computer’s files and directories in another pane.

It lets you navigate through the remote server’s file system just like you would on your own computer.

apt update

apt install filezilla- FTP Servers - Delfer / vsFTP

Other ways - WebDavs and SMB

- Have a look to Nextcloud with Docker

- Samba Server with Docker

- Samba is the de facto open-source implementation of the SMB/CIFS protocol for Unix-like systems, including Linux. You can install and configure Samba on your Linux server to share files and directories with Windows and other SMB clients.

What about Proxmox?

What about OMV?

Open Media Vault - Debian Based NAS

What it is LXC?

LXC (LinuX Containers) is a OS-level virtualization technology that allows creation and running of multiple isolated Linux virtual environments (VE) on a single control host.

How to run Rust w/o OS?

How to develop inside a Docker Container

FAQ

What are K8s PODs?

Master and Nodes with Differente CPU archs?

Rancher Alternatives?

What is it Kubeflow?

- Kubeflow is the machine learning toolkit for Kubernetes:

Kubeflow is an open-source platform for machine learning and MLOps on Kubernetes.

It was introduced by Google in 2017 and has since grown to include many other contributors and projects.

Kubeflow aims to make deployments of machine learning workflows on Kubernetes simple, portable and scalable3.

Kubeflow offers services for creating and managing Jupyter notebooks, TensorFlow training, model serving, and pipelines across different frameworks and infrastructures3.

Purpose: Kubeflow is an open-source project designed to make deployments of machine learning (ML) workflows on Kubernetes easier, scalable, and more flexible.

Scope: It encompasses a broader range of ML lifecycle stages, including preparing data, training models, serving models, and managing workflows.

Kubernetes-Based: It’s specifically built for Kubernetes, leveraging its capabilities for managing complex, distributed systems.

Components: Kubeflow includes various components like Pipelines, Katib for hyperparameter tuning, KFServing for model serving, and integration with Jupyter notebooks.

Target Users: It’s more suitable for organizations and teams looking to deploy and manage ML workloads at scale in a Kubernetes environment.

What it is MLFlow?

Purpose: MLflow is an open-source platform primarily for managing the end-to-end machine learning lifecycle, focusing on tracking experiments, packaging code into reproducible runs, and sharing and deploying models.

Scope: It’s more focused on the experiment tracking, model versioning, and serving aspects of the ML lifecycle.

Platform-Agnostic: MLflow is designed to work across various environments and platforms. It’s not tied to Kubernetes and can run on any system where Python is supported.

Components: Key components of MLflow include MLflow Tracking, MLflow Projects, MLflow Models, and MLflow Registry.

Target Users: It’s suitable for both individual practitioners and teams, facilitating the tracking and sharing of experiments, models, and workflows.

While they serve different purposes, Kubeflow and MLflow can be used together in a larger ML system.

For instance, you might use MLflow to track experiments and manage model versions, and then deploy these models at scale using Kubeflow on a Kubernetes cluster.

Such integration would leverage the strengths of both platforms: MLflow for experiment tracking and Kubeflow for scalable, Kubernetes-based deployment and management of ML workflows.

In summary, while Kubeflow and MLflow are not directly related and serve different aspects of the ML workflow, they can be complementary in a comprehensive ML operations (MLOps) strategy.

Kustomize

- What It Is: Kustomize is a standalone tool to customize Kubernetes objects through a declarative configuration file. It’s also part of kubectl since v1.14.

- Usage in DevOps/MLOps:

- Configuration Management: Manage Kubernetes resource configurations without templating.

- Environment-Specific Adjustments: Customize applications for different environments without altering the base resource definitions.

- Overlay Approach: Overlay different configurations (e.g., patches) over a base configuration, allowing for reusability and simplicity.