Flowise AI Embedded and Gitea

Tl;DR

I dont quite like having some use cases for the enterprise version within n8n.

So putting together a Flowise x Gitea stack that is F/OSS (Apache v2 and MIT)

Intro

Last year Imade this video about Flowise:

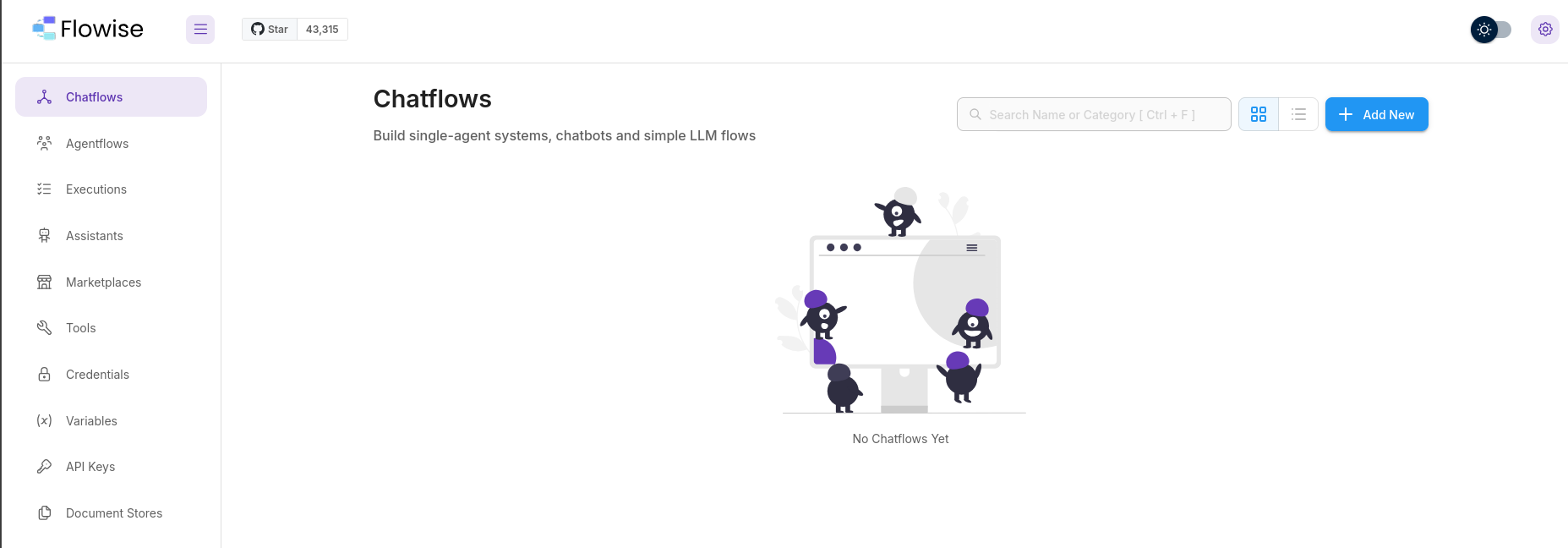

Flowise AI

Most important, among similar low/nocode tools, FlowiseAI is OSS Licensed with Apache v2.

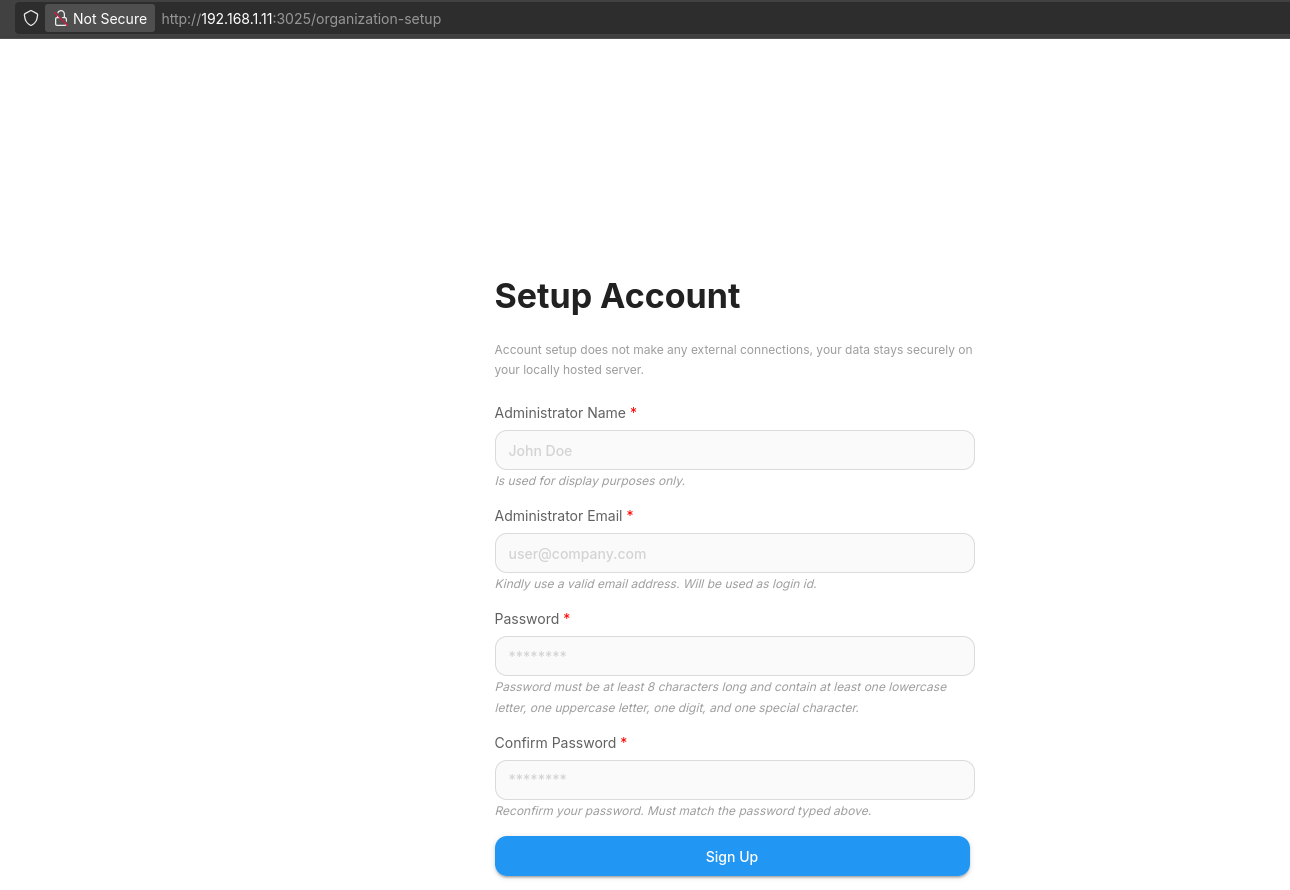

git clone https://github.com/FlowiseAI/Flowise

cd ./Flowise/docker

cp .env.example .env

cat <<EOL >> .env

FLOWISE_USERNAME=teco

FLOWISE_PASSWORD=paco

EOL

sudo docker compose up -d

Flowise API SDK Embed

Extend and integrate to your applications using APIs, SDK and Embedded Chat

- APIs: https://docs.flowiseai.com/api-reference

- Embedded Widget: https://docs.flowiseai.com/using-flowise/embed

- Typescript & Python SDK

Connect to Flowise

If you want, plug your Ollama instance to Flowise:

cd gitea

sudo docker-compose up -d

#sudo docker ps | grep ollama

docker network connect cloudflared_tunnel gitea #network -> container name

#docker inspect gitea --format '{{json .NetworkSettings.Networks}}' | jqOr just use 3rd parties LLMs:

- Groq: https://console.groq.com/keys

- Gemini (Google): https://ai.google.dev/gemini-api/docs

- Mixtral: https://docs.mistral.ai/api/

- Anthropic (Claude) - https://www.anthropic.com/api

- Open AI: https://platform.openai.com/api-keys

- Grok: https://x.ai/api

cd gitea

sudo docker-compose up -d

#sudo docker ps | grep flowise

docker network connect cloudflared_tunnel gitea #network -> container name

#docker inspect gitea --format '{{json .NetworkSettings.Networks}}' | jqWhat to do with Flowise?

How about…Adding Chatbots to Websites using Embed API

FAQ

Flowise x Python SDK

The Flowise SDK for Python provides an easy way to interact with the Flowise API for creating predictions, supporting both streaming and non-streaming responses.

This SDK allows users to create predictions with customizable options, including history, file uploads, and more.

git clone https://github.com/HenryHengZJ/flowise-streamlit

uv init

uv add -r requirements.txt

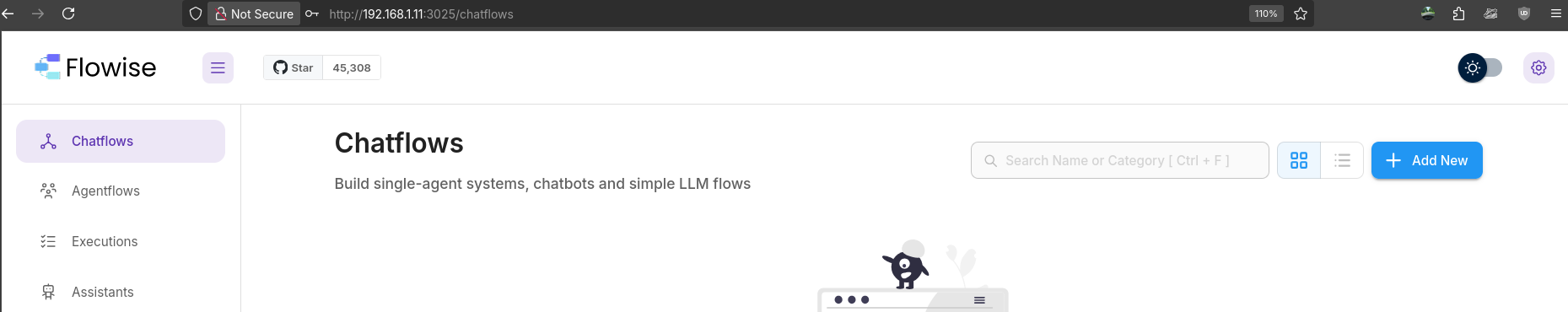

uv syncYou will need a Chatflow:

#cp -n .streamlit/secrets_example.toml .streamlit/secrets.toml && ls -l .streamlit/secrets.toml

cat <<'EOL' >> .streamlit/secrets.toml

APP_URL = "http://192.168.1.11:3025"

FLOW_ID = "123456789"

EOL

uv run streamlit run streamlit_app.py