[AI] LangChain Explained - Providing context to LLMs

Intro

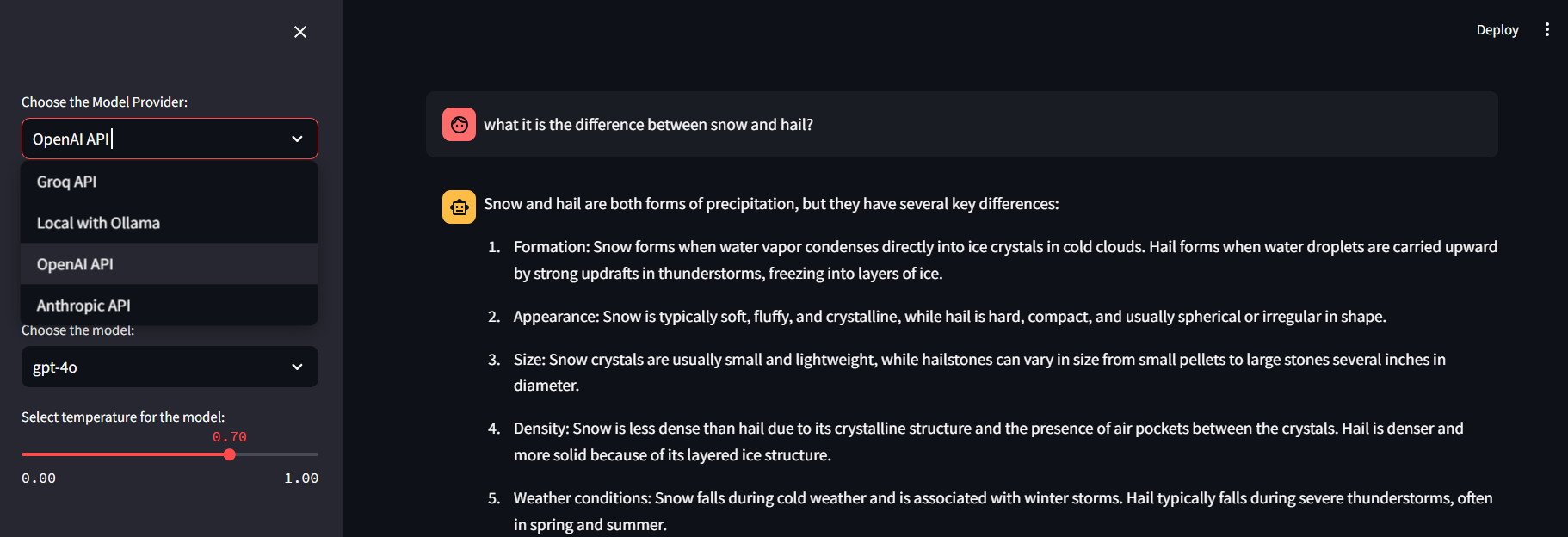

How to create a Streamlit App that uses LangChain to chat with your data.

As commented earlier this year, I was inspired by Alejandro AO and its Python AI projects.

I commented the PDF one here →

But today, it is the time for CSV’s and with Databases!

You guessed it.

We will be using LangChain as RAG framework to provide the CSV context to the LLM.

LangChain is an alternative to PandasAI.

Chat with CSV with LangChain

You will need to have:

- Python installed and a virtual environment ready.

- The OpenAI API keys - https://platform.openai.com/api-keys

In a nutshell, these are the python libraries you will need:

#pip install langchain python-dotenv streamlit openai

#but lets install them relialy with the requirements.txt fileThats why the original Alejandro AO Project has the requirements.txt file specifying the versions.

Its a great practice to install python dependencies with:

pip install -r requirements.txt

#which it is equivalent to this way below

#pip install langchain==0.0.208 python-dotenv==1.0.0 streamlit==1.25.0 openai==0.27.0 transformers==4.28.1 sentence_transformers==2.2.2 torch==2.0.1 tensorflow==2.12.0I just removed most of them as the original file seemed to have many more than required.

Remember to place your OpenAI API key in the .env file.

And run the Streamlit App:

streamlit run main.pyA browser will open with: localhost:8501

Understanding CSV with LangChain App

This is the general workflow of the Python app:

graph TD

A[Load environment variables with dotenv] --> B{Check if OPENAI_API_KEY is set?}

B -- No --> C[Exit program]

B -- Yes --> D[Set Streamlit page config and header]

D --> E[User uploads CSV file]

E --> F{CSV file uploaded?}

F -- No --> G[Wait for CSV upload]

F -- Yes --> H[Create CSV agent using Langchain and OpenAI]

H --> I[User inputs question]

I --> J{Is a question provided?}

J -- No --> K[Wait for question]

J -- Yes --> L[Process the question with agent]

L --> M[Display result using Streamlit]Chat with a DB with LangChain

It’s not that SQL is impossible to learn.

But why not using AI as leverage to help us with D&A tasks?

And it is possible thanks to LangChain with Python and the great work of AlejandroAO

Database Setup

How to Setup MySQL? 📌

The original post from Alejandro allow to select sqlite or Mysql.

I went with mysql as per:

sudo apt update

sudo apt install mysql-server -y

sudo systemctl start mysql

sudo systemctl status mysql

sudo systemctl enable mysql #start at boot

sudo mysql -u root -p#mysql --version #mysql Ver 8.0.40-0ubuntu0.24.04.1 for Linux on x86_64 ((Ubuntu))Now that MYSQL is installed, we can load the sample DB.

Loading the Sample DB - chinook-database 📌

We can get the Chinook DB as example from their releases on Github

CREATE DATABASE chinook;

USE chinook;

SOURCE chinook.sql; --or the name of your SQL file to load the database

SOURCE Chinook_MySql.sql; --or the name of your SQL file to load the database

SHOW TABLES;

SELECT * FROM Album LIMIT 10;

DESCRIBE Artist;

SHOW FULL TABLES;You can see that the DB is loaded and we can see its Schema.

SELECT

TABLE_NAME AS `Table`,

COLUMN_NAME AS `Column`,

DATA_TYPE AS `Data Type`,

CHARACTER_MAXIMUM_LENGTH AS `Max Length`,

IS_NULLABLE AS `Nullable`,

COLUMN_KEY AS `Key`,

COLUMN_DEFAULT AS `Default Value`

FROM

INFORMATION_SCHEMA.COLUMNS

WHERE

TABLE_SCHEMA = 'Chinook'

ORDER BY

TABLE_NAME, ORDINAL_POSITION;Actually, we can make already a MermaidJS ER diagram to represent it:

erDiagram

Album {

int AlbumId PK

varchar Title

int ArtistId FK

}

Artist {

int ArtistId PK

varchar Name

}

Customer {

int CustomerId PK

varchar FirstName

varchar LastName

varchar Company

varchar Address

varchar City

varchar State

varchar Country

varchar PostalCode

varchar Phone

varchar Fax

varchar Email

int SupportRepId FK

}

Employee {

int EmployeeId PK

varchar LastName

varchar FirstName

varchar Title

int ReportsTo FK

datetime BirthDate

datetime HireDate

varchar Address

varchar City

varchar State

varchar Country

varchar PostalCode

varchar Phone

varchar Fax

varchar Email

}

Genre {

int GenreId PK

varchar Name

}

Invoice {

int InvoiceId PK

int CustomerId FK

datetime InvoiceDate

varchar BillingAddress

varchar BillingCity

varchar BillingState

varchar BillingCountry

varchar BillingPostalCode

decimal Total

}

InvoiceLine {

int InvoiceLineId PK

int InvoiceId FK

int TrackId FK

decimal UnitPrice

int Quantity

}

MediaType {

int MediaTypeId PK

varchar Name

}

Playlist {

int PlaylistId PK

varchar Name

}

PlaylistTrack {

int PlaylistId PK

int TrackId PK

}

Track {

int TrackId PK

varchar Name

int AlbumId FK

int MediaTypeId FK

int GenreId FK

varchar Composer

int Milliseconds

int Bytes

decimal UnitPrice

}

%% Relationships

Album ||--o{ Artist : "belongs to"

Track ||--o{ Album : "belongs to"

Track ||--o{ MediaType : "uses"

Track ||--o{ Genre : "categorized as"

PlaylistTrack ||--o{ Playlist : "contains"

PlaylistTrack ||--o{ Track : "contains"

Invoice ||--o{ Customer : "issued to"

InvoiceLine ||--o{ Invoice : "part of"

InvoiceLine ||--o{ Track : "tracks"

Customer ||--o{ Employee : "assisted by"

Employee ||--o{ Employee : "reports to"LangChain Setup to Chat with DB

Make a venv, install the requirements and load the API keys:

python3 -m venv datachat_venv #create the venv Linux

#python -m venv datachat_venv #create the venv W

#datachat_venv\Scripts\activate #activate venv (windows)

source datachat_venv/bin/activate #(linux)

pip install -r requirements.txtLoad your OpenAI API Key 📌

#source .env

#export OPENAI_API_KEY="your-api-key-here"

#set OPENAI_API_KEY=your-api-key-here

#$env:OPENAI_API_KEY="your-api-key-here"

#echo $OPENAI_API_KEYWrapping Up LangChain with DB

I could replicate the working code from AlejandroAO by using:

- The v1.4.5 sample DB version.

- Python 3.12.3 for x86 and venvs

- Im using MySQL 8.0

We can put all of this into containers with this docker-compose:

###docker exec -it mysql_db bash

###docker exec -it mysql_db mysql -u myuser -p chinookConclusions

We have seen how to use LangChain to:

- RAG with CSV

- Query a DB and provide context to the LLM

Chat with PDF

Chat with PDFs Post

Chat with PDFs PostFAQ

More Useful Tools for DBs 📌

ChartDB - Database diagrams editor that allows you to visualize and design your DB with a single query.

Other Tools to Chat over custom data (and locally~) is PrivateGPT

You can also try:

- PandasAI which I covered

- LlamaIndex

What it is LangGraph?

Build resilient language agents as graphs - MIT!

LangGraph is a framework designed for building multi-agent systems, particularly when using large language models (LLMs).