How to use Grok (X) API

How to use Grok (X) API

May 8, 2025

About Grok

ℹ️

Paywalled. You need to top up the account to use the API.

All the learnings are collected:

Flask Intro

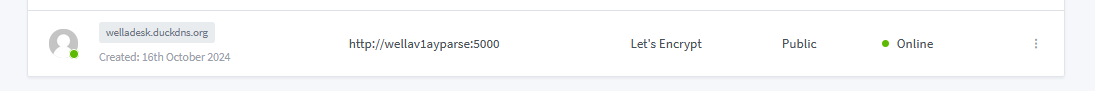

Flask IntroDeployed a Flask WebApp with https and NGINX to Hertzner

Source Code on Github

Using Grok API

After inserting the credits ($) first, and just try that Grok API works:

curl https://api.x.ai/v1/chat/completions -H "Content-Type: application/json" -H "Authorization: Bearer xai-somecoolapikey" -d '{

"messages": [

{

"role": "system",

"content": "You are a test assistant."

},

{

"role": "user",

"content": "Testing. Just say hi and hello world and nothing else."

}

],

"model": "grok-2-latest",

"stream": false,

"temperature": 0

}'But a little bit cleaner:

#source .env

export XAI_API_KEY="xai-somecoolapikey"Then just execute the query to Grok via web request:

curl https://api.x.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $XAI_API_KEY" \

-d '{

"messages": [

{

"role": "system",

"content": "You are a test assistant."

},

{

"role": "user",

"content": "Testing. Which model are you?."

}

],

"model": "grok-2-latest",

"stream": false,

"temperature": 0

}'Ways to Call Grok

- Via curl CLI, as done previously.

- But also you can call Grok with python

ℹ️

From Europe, I could not use the image generation APIs, but it seems available via https://x.com/i/grok

Grok via OpenAI API

from openai import OpenAI

client = OpenAI(

api_key=XAI_API_KEY,

base_url="https://api.x.ai/v1",

)

# ...

completion = client.chat.completions.create(

model="grok-2-latest",

messages=[

{

"role": "system",

"content": f"""You are an expert assistant""",

},

{"role": "user", "content": "What have been the top 5 news in 2025?"}

],

temperature=0.7)

# ...Grok via Anthropic

from anthropic import Anthropic

client = Anthropic(

api_key=XAI_API_KEY,

base_url="https://api.x.ai",

)

# ...

message = client.messages.create(

model="grok-2-latest",

max_tokens=689,

temperature=0,

messages=[

{"role": "user", "content": f"What are the latest trends in tech?"}

]

)Grok via LiteLLM

Conclusions

This is it.

Now you have one more LLM to play with via API queries!

ℹ️