Audio with AI. TTS and Voice Cloning

Intro

We have couple of free to use places to get TTS working out of the box:

- https://platform.openai.com/playground/tts

- https://aistudio.google.com/generate-speech and you could aso via Gemini API Key

- Google AI Studio https://aistudio.google.com/welcome

TTS

Lets see some Text to Speech AI tools!

Including Google and OpenAI solutions

F5-TTS

- Try it live without installing anything via HF and this gradio web-app: https://huggingface.co/spaces/mrfakename/E2-F5-TTS

- https://github.com/SWivid/F5-TTS

Ive tried cloning my own voice:

ffmpeg -i my_wa_audio.ogg my_wa_audio.mp3Once again, thanks to DotCSV I could get to know about this project:

And we can run our voice cloning tool, locally by using PinokioAI:

MIT | AI Browser

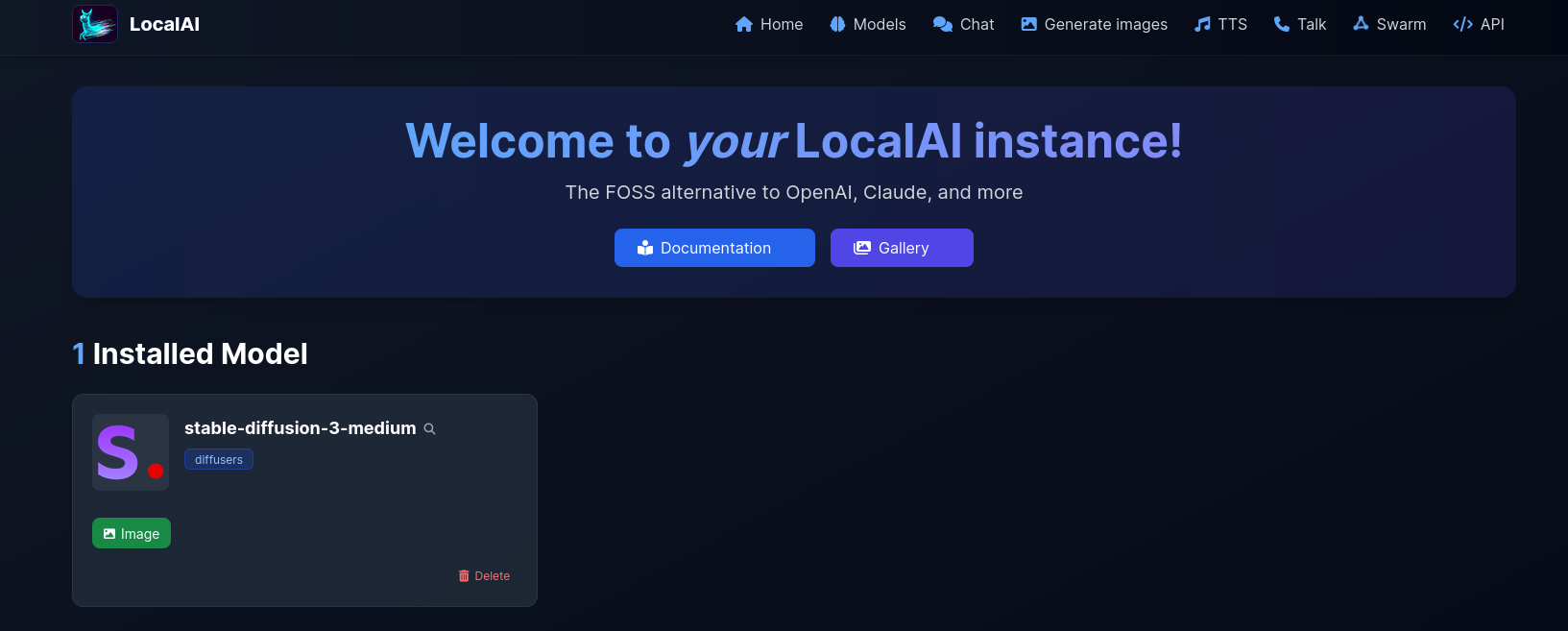

LocalAI TTS

MIT | 🤖 The free, Open Source alternative to OpenAI, Claude and others. Self-hosted and local-first. Drop-in replacement for OpenAI, running on consumer-grade hardware. No GPU required. Runs gguf, transformers, diffusers and many more models architectures. Features: Generate Text, Audio, Video, Images, Voice Cloning, Distributed, P2P inference

- But we came for the text to audio capabilities: https://localai.io/features/text-to-audio/

- And a very interesting API: http://192.168.1.11:8081/swagger/index.html

The UI will be at: http://192.168.0.12:8081/

And it has a swagger API: http://192.168.0.12:8081/swagger/

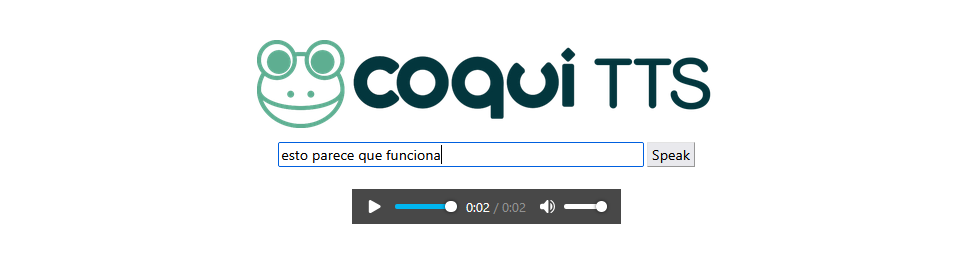

Coqui TTS

Local voice chatbot for engaging conversations, powered by Ollama, Hugging Face Transformers, and Coqui TTS Toolkit

MPL | 🐸💬 - a deep learning toolkit for Text-to-Speech, battle-tested in research and production

Eager to spin a Coqui Text to speech local server?

docker run -d \

--name coquitts \

-p 5002:5002 \

--entrypoint python3 \

ghcr.io/coqui-ai/tts-cpu \

TTS/server/server.py \

--model_name \

tts_models/en/vctk/vitsIt will go with the en/vctk/vits model. But you can change it later on.

The web ui will be at port 5002:

And it works with more language than EN as well!

Deploy with the related docker-compose for CoquiTTS.

Deploy CoquiTTS with Docker | CLI Details 📌

docker exec -it coquitts /bin/bash

docker run --rm -it -p 5002:5002 --entrypoint /bin/bash ghcr.io/coqui-ai/tts-cpu

python3 TTS/server/server.py --list_models #To get the list of available models

python3 TTS/server/server.py --model_name tts_models/en/vctk/vits # To start a server

#python3 TTS/server/server.py --model_name tts_models/es/mai/tacotron2-DDCservices:

tts-cpu:

image: ghcr.io/coqui-ai/tts-cpu

container_name: coquitts

ports:

- "5002:5002"

entrypoint: /bin/bash

tty: true

stdin_open: true

# Optional: Mount a volume to persist data or access local files

# volumes:

# - ./local_data:/dataserver.py is a Flask App btw :)More

RT Voice Cloning

BARK

MIT | 🔊 Text-Prompted Generative Audio Model

See ./Z_YT_Audios folder of this repo and uv run sunoai-bark.py to try it out!

It will download the models locally ~5GB

Important https://pytorch.org/get-started/locally/ get the right PyTorch version!

MIT | The code for the bark-voicecloning model. Training and inference.

OpenVoice

F/OSS Voice Clone

https://pythonawesome.com/clone-a-voice-in-5-seconds-to-generate-arbitrary-speech-in-real-time/

Open Voice + Google Colab

Also locally

XTTS2 Local Voice Clonning

An ui for Coqui TTS

===»> /guide-xtts2-ui

https://www.youtube.com/watch?v=0vGeWA8CSyk

For the dependencies Python < 3.11:

https://github.com/BoltzmannEntropy/xtts2-ui https://github.com/BoltzmannEntropy/xtts2-ui?tab=MIT-1-ov-file#readme

I had to use this as environment…

RVC-Project

Another VC (Voice Clonning) project

https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI?tab=MIT-1-ov-file#readme

https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI/blob/main/docs/en/README.en.md

LocalAI Packaged

Apache v2.0 | Run all your local AI together in one package - Ollama, Supabase, n8n, Open WebUI, and more!

Conclusions

There are many ways to generate AI audio from text.

- Google also offers one from their: https://aistudio.google.com/prompts/new_chat

The Native Speech Generation - https://aistudio.google.com/generate-speech

For which you will need Google API Keys

- And OpenAI also has its own: https://platform.openai.com/playground/tts

Plus, Recently, ive seen this usage of n8n to build AI workflows: using Claude Code to create n8n flows

FAQ

https://github.com/p0p4k/vits2_pytorch https://github.com/p0p4k/vits2_pytorch?tab=MIT-1-ov-file#readme

https://github.com/yl4579/StyleTTS?tab=MIT-1-ov-file#readme

Below are samples for Piper, a fast and local text to speech system. Samples were generated from the first paragraph of the Wikipedia entry for rainbow.

https://github.com/kanttouchthis/text_generation_webui_xtts/?tab=readme-ov-file

With Oobaboga Gradio UI

And its extensions: https://github.com/oobabooga/text-generation-webui-extensions

Voice?

Generally, here you can get many ideas: https://github.com/sindresorhus/awesome-whisper

Also, in HF there are already interesting projects.

ecoute (OpenAI API needed)

Meeper (OpenAI API needed)

Bark

Whisper - https://github.com/openai/whisper

- Free and Open Source Machine Translation API. Self-hosted, offline capable and easy to setup.

Linux Desktop App:

flatpak install flathub net.mkiol.SpeechNote

flatpak run net.mkiol.SpeechNoteT2S/TTS - text to speech tools:

- Elevenlabs - https://elevenlabs.io/pricing

- https://azure.microsoft.com/en-us/products/ai-services/text-to-speech

And now there is even prompt to video at: google veo3

- https://openart.ai/video?ai_model=veo2

- revid.ai

- HeyGen - https://docs.heygen.com/ with avatar videos that are API driven

HeyGen can be combined with MCP - https://github.com/heygen-com/heygen-mcp witht their mcp server

Adding TTS to MultiChat

xTTS2

Text to Speech with xTTS2 UI, which uses the package: https://pypi.org/project/TTS/

Meaning CoquiTTS under the hood

MIT | A User Interface for XTTS-2 Text-Based Voice Cloning using only 10 seconds of speech

The model used

- https://coqui.ai/cpml.txt

- Hardware needed: works with CPU ✅

Installing xTTS2 with Docker. Clone audio locally.

git clone https://github.com/pbanuru/xtts2-ui.git

cd xtts2-ui

python3 -m venv venv

source venv/bin/activateGet the right pytorch installed: https://pytorch.org/get-started/locally/

#pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

pip install -r requirements.txt#version: '3'

services:

audio:

image: python:3.10-slim

container_name: audio

command: tail -f /dev/null

volumes:

- ai_audio:/app

working_dir: /app # Set the working directory to /app

ports:

- "7865:7865"

volumes:

ai_audio:podman exec -it audio /bin/bash

python --versionapt update

apt install git

#git --version

git clone https://github.com/BoltzmannEntropy/xtts2-ui

cd xtts2-ui

#python -m venv venvaudio

#pip3 install torch torchvision torchaudio && pip install -r requirements.txt && pip install --upgrade TTS && streamlit run app2.py

pip3 install torch torchvision torchaudio #https://pytorch.org/get-started/locally/

pip install -r requirements.txt

pip install --upgrade TTS

streamlit run app2.py Streamlit UI

streamlit run app2.py Flask Intro

Flask Introtext_generation_webui_xtts

More

Making these with portainer is always easier:

sudo docker run -d -p 8000:8000 -p 9000:9000 --name=portainer --restart=always -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer-ce

# docker stop portainer

# docker rm portainer

# docker volume rm portainer_data Flask Intro

Flask IntroClone Audio

Taking some help from yt-dlp: https://github.com/yt-dlp/yt-dlp

Unlicensed| A feature-rich command-line audio/video downloader

yt-dlp -x --audio-format wav "https://www.youtube.com/watch?"

yt-dlp -x --audio-format wav "https://www.youtube.com/watch?v=5Em5McC_ulc"Which I could not get working, nor: https://github.com/ytdl-org/youtube-dl

sudo apt install youtube-dl

youtube-dl -x --audio-format mp3 "https://www.youtube.com/watch?v=5Em5McC_ulc"