How to create music with AI Tools

TL;DR

Few years back I helped a friend to create a song and publish it to spotify.

It was ton of fun.

But can we now do music with AI?

Intro

Not a post about Gonic nor Sonixd.

Neither to talk about how I ended up collaborating on a troll song that got uploaded to Spotify.

You have multiple options to create music with AI, including ready-to-use platforms and Python-based tools for custom projects.

General AI Music Creation Options

Online AI Music Generators: Platforms like Suno, AI Song Maker, MusicCreator AI, Soundraw, and Boomy let you create royalty-free music quickly by choosing styles, moods, and instruments. These require no coding and are ideal for instant music creation.

Collaborative AI Platforms: Some tools leverage crowd-sourced or evolving AI models to generate music that you can customize.

Some of the AI audio tools can be installed locally.

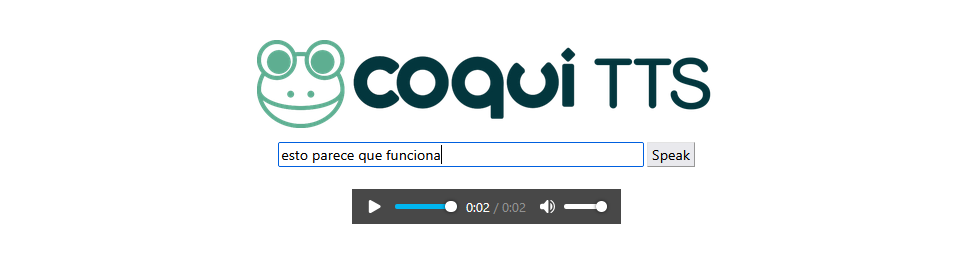

I particularly enjoyed CoquiTTS: https://jalcocert.github.io/JAlcocerT/local-ai-audio/#tts

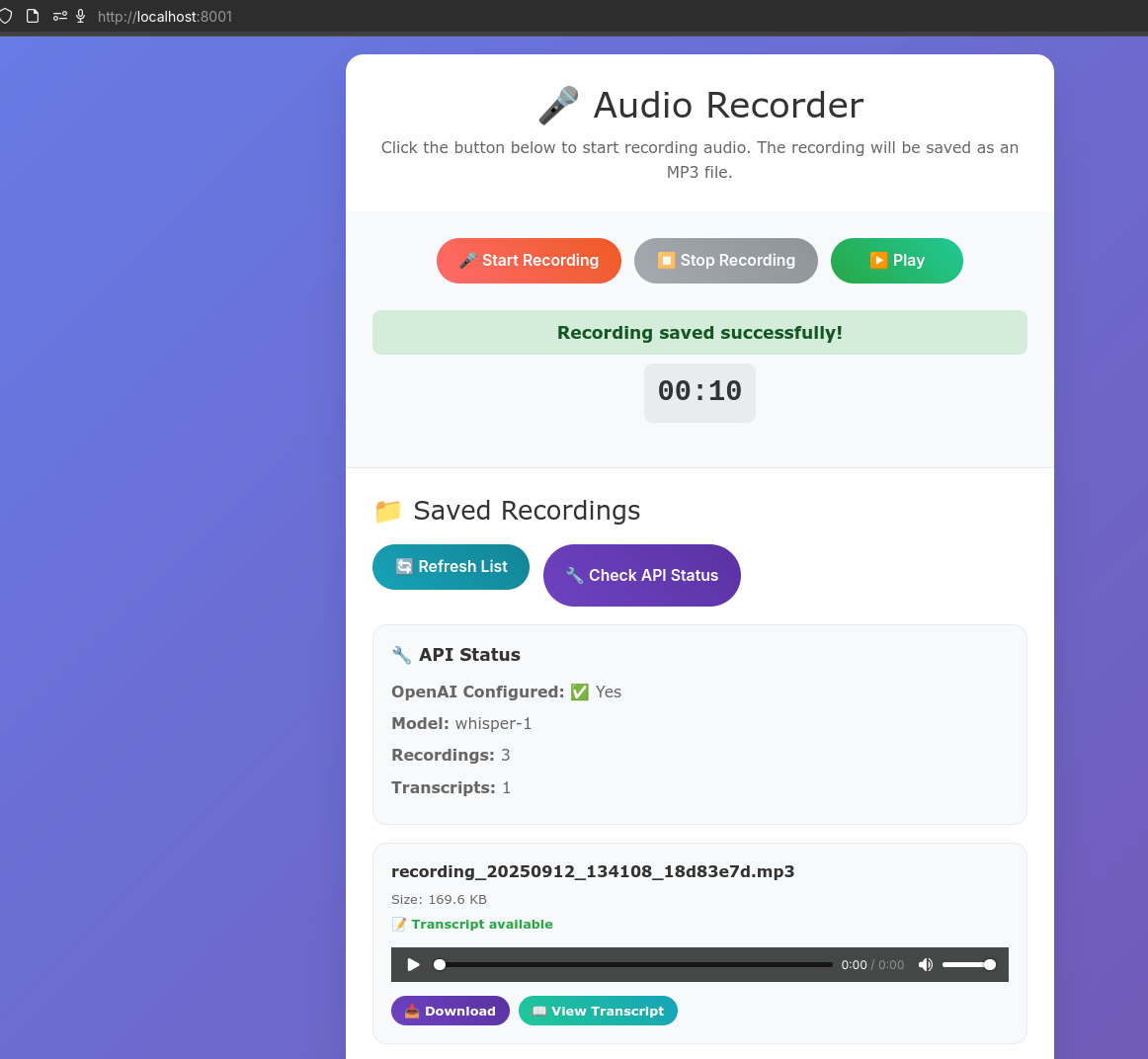

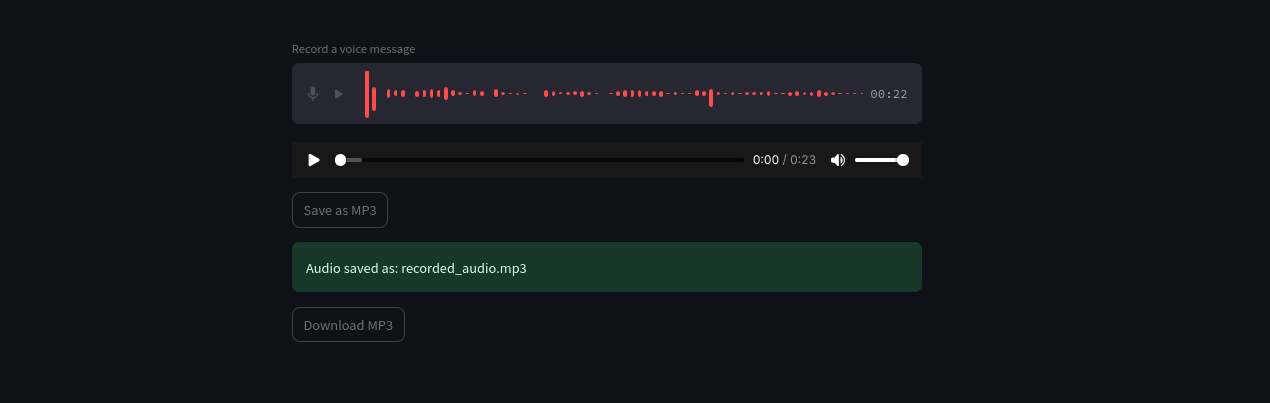

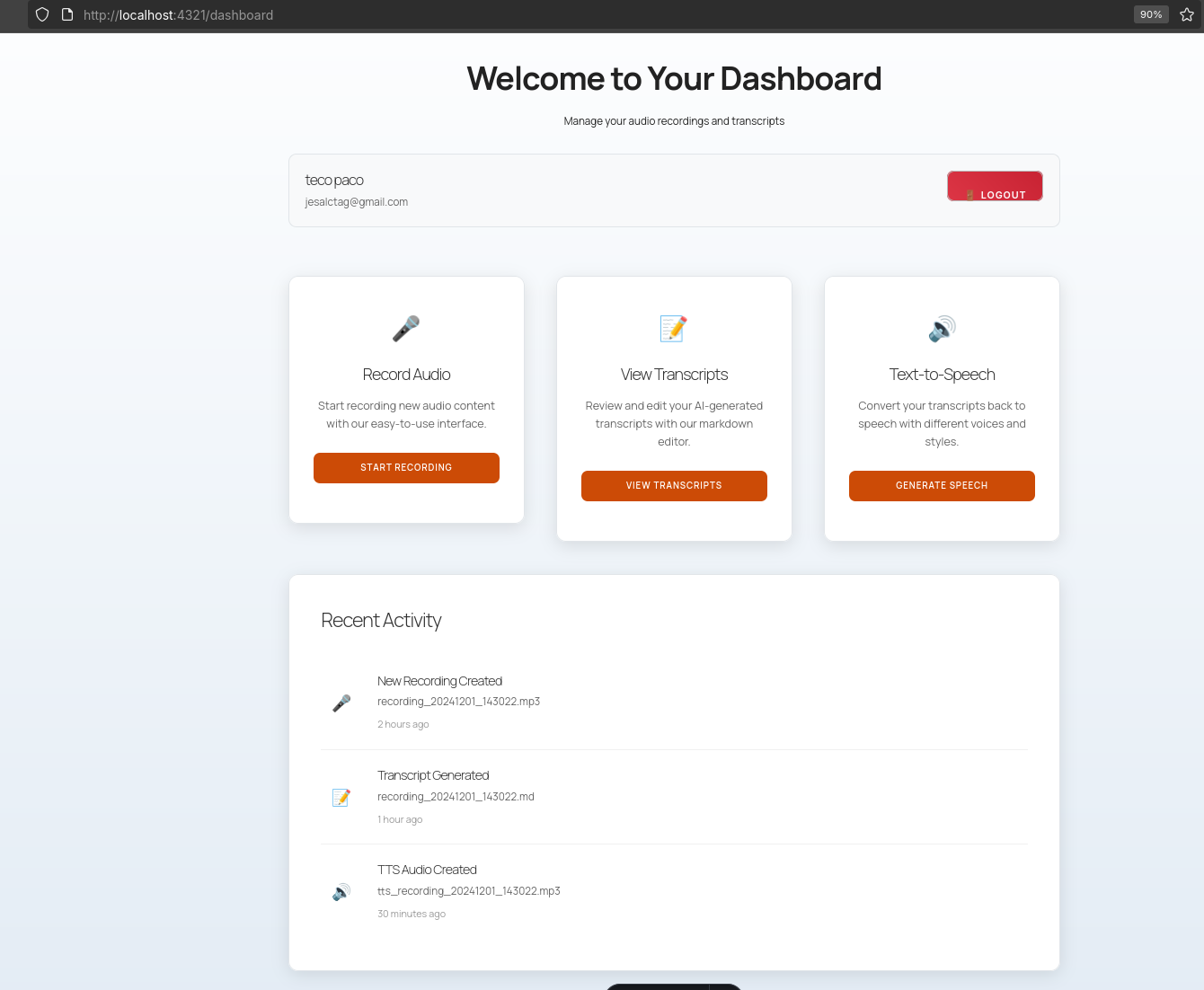

NEW OpenAI TTS and Transcription Project 🐍

NEW OpenAI TTS and Transcription Project 🐍

Some people mention https://lucida.to/, but I prefer to have my own music server: spotify is also great too!

#snap install spotifyGonic is one of the options:

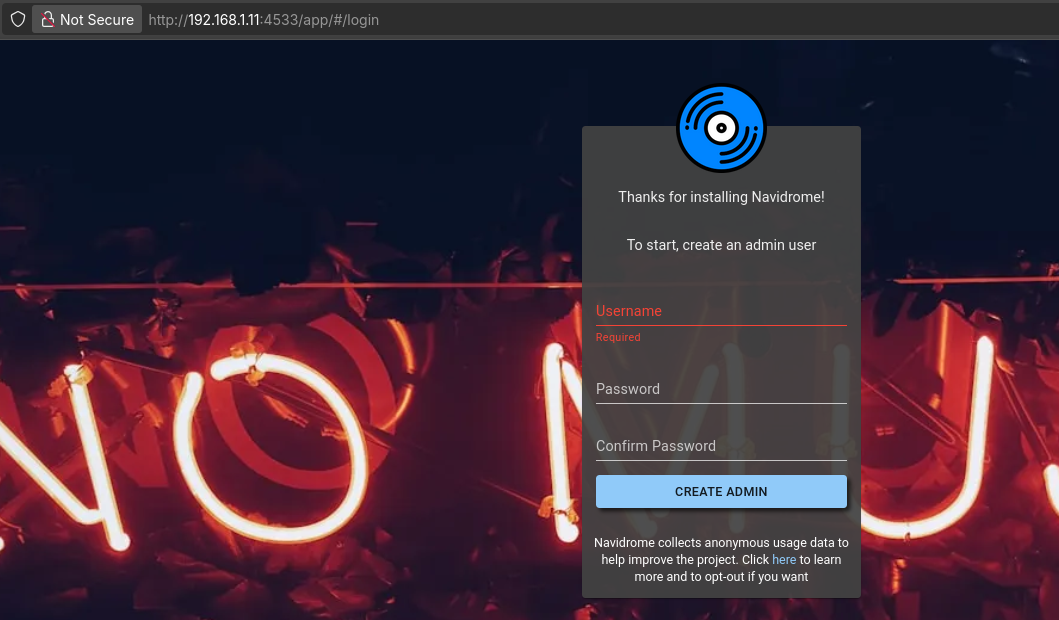

Navidrome is other OSS selfhostable music server:

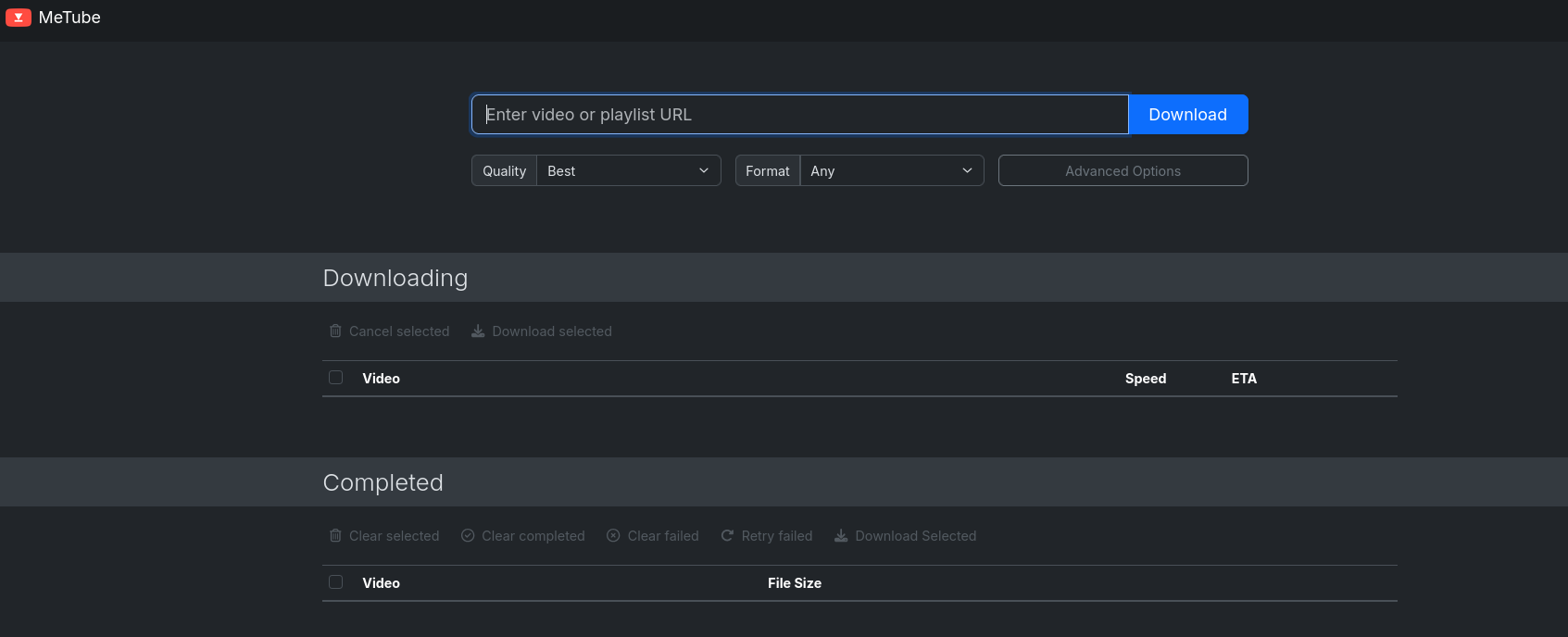

And they both get on pretty well with Metube: specially if you are building a media server

You can add more services to the stack, like other youtube front-ends and create a: metube + jdownloader + qbit stack

Python-Based AI Music Creation Libraries and Frameworks

- Magenta: Google’s open-source project provides powerful tools built on TensorFlow for music generation, including pre-trained models that create melodies, harmonies, and rhythms.

- music21: A Python toolkit for musicology, analysis, and symbolic music processing, commonly used with AI models for data preparation and generation.

- pretty_midi: Handles MIDI creation, manipulation, and conversion, useful for AI-generated MIDI data.

- TensorFlow/Keras: Base libraries to build custom neural networks (e.g., LSTMs, Transformers) for music sequence generation.

- pydub and fluidsynth: Useful for audio manipulation and rendering AI-generated MIDI into sound.

Workflow Example with Python:

- Use datasets or MIDI files for training neural networks.

- Train or use pre-trained models (via Magenta or custom TensorFlow code) to generate music sequences.

- Convert sequences into MIDI with pretty_midi or music21.

- Render or process audio with pydub or fluidsynth.

This approach suits developers keen to customize AI music generation or integrate it with other projects.

In summary, for quick music creation, use ready AI platforms; for deeper programming control, leverage Python AI libraries like Magenta and music21 to generate and manipulate music.[1][2][3]

Open Source AI Tools for Music Creation

This AI Makes you a PRO Singer !

Takes a song, separates voice and beat, then inputs your voice to it (not foss)

https://huggingface.co/spaces/facebook/MusicGen https://gist.github.com/mberman84/45545e48040ef6aafb6a1cb3442edb83

https://gist.github.com/mberman84/afd800f8d4a8764a22571c1a82187bad

https://github.com/facebookresearch/audiocraft

audiocraft How To Install Audiocraft Locally - Meta’s FREE And Open AI Music Gen

https://gist.github.com/mberman84/afd800f8d4a8764a22571c1a82187bad

arm64: apt install ffmpeg

conda install -c pytorch -c conda-forge pytorch

https://www.gyan.dev/ffmpeg/builds/

choco install ffmpeg

ffmpeg –version- The Site

- The Source Code at Github

- License: MIT ❤️

SunoAI

We touched Suno (Bark) some time back here.

MIT | 🔊 Text-Prompted Generative Audio Model

The good things, is

Beats with AI

Arent beats just music?

This can be cool as background music for youtube tech videos.

Conclusions

AI generated audio (and songs) is getting crazy.

And this seems to just be the beginning.

Lyrics with AI?

You can try simply LLMs!

FAQ

Interesting Music Related Projects

GraphMuse - Python 📌

GraphMuse is a Python library designed for symbolic music graph processing, addressing the growing need for efficient and effective analysis of musical scores through graph-based methods.

Problem Solved: Traditional music processing lacks efficient tools for analyzing complex musical scores, which often include various elements beyond just notes.

Functionality:

- Converts musical scores into graphs where:

- Each note is a vertex.

- Temporal relationships between notes define edges.

- Supports deep graph models for music analysis.

- Built on PyTorch and PyTorch Geometric, offering strong flexibility and performance.

- Converts musical scores into graphs where:

Graph Structure:

- Edges are categorized into:

- Onset edges (notes starting simultaneously).

- Consecutive edges (notes starting after others).

- During edges (notes overlapping with others).

- Silent edges (connecting notes separated by silence).

- Edges are categorized into:

Key Features:

- Efficient graph creation (up to 300x faster).

- Built-in utilities for preprocessing musical scores.

- Sampling methods for handling variable graph sizes during training.

Use Case:

- Demonstrates pitch spelling tasks using annotated datasets.

Future Plans:

- Improve installation processes.

- Expand model and data loader support.

- Foster community contributions.

GraphMuse is a promising tool for anyone interested in symbolic music analysis, combining music theory with advanced graph neural networks.

In conclusion, GraphMuse simplifies symbolic music processing through advanced graph techniques, fostering innovation and analysis.

Similar Projects: MusGViz for music visualization and other graph neural network frameworks in music processing.