Testing new LLMs with Streamlit MultiChat

Testing new LLMs with Streamlit MultiChat

May 25, 2025

Avoid LLM lock-in and the recurring subscription bills.

Simply use APIs.

Together with Streamlit!

ℹ️

OpenAI

They have released recently o3-mini model.

GPT-4o-mini has been my go to in terms of value per money.

But we have now o1 (mini also) and o3-mini with higher context window (200K)

pip install openai==1.61.0See the latest openAI models:

import os

from dotenv import load_dotenv

from openai import OpenAI # pip install openai>0.28

# Load environment variables from the .env file

load_dotenv()

client = OpenAI()

models = client.models.list()

for model in models:

print(model.id)Grok

DeepSeek via Ollama

Conclusions

Companions for this multichat.

Agents with Streamlit

With a very interesting article about agents: https://www.anthropic.com/engineering/built-multi-agent-research-system

FULL v0, Cursor, Manus, Same.dev, Lovable, Devin, Replit Agent, Windsurf Agent, VSCode Agent, Dia Browser & Trae AI (And other Open Sourced) System Prompts, Tools & AI Models.

AIssistant

Read the Web!

https://jalcocert.github.io/JAlcocerT/scrap-and-chat-with-the-web/

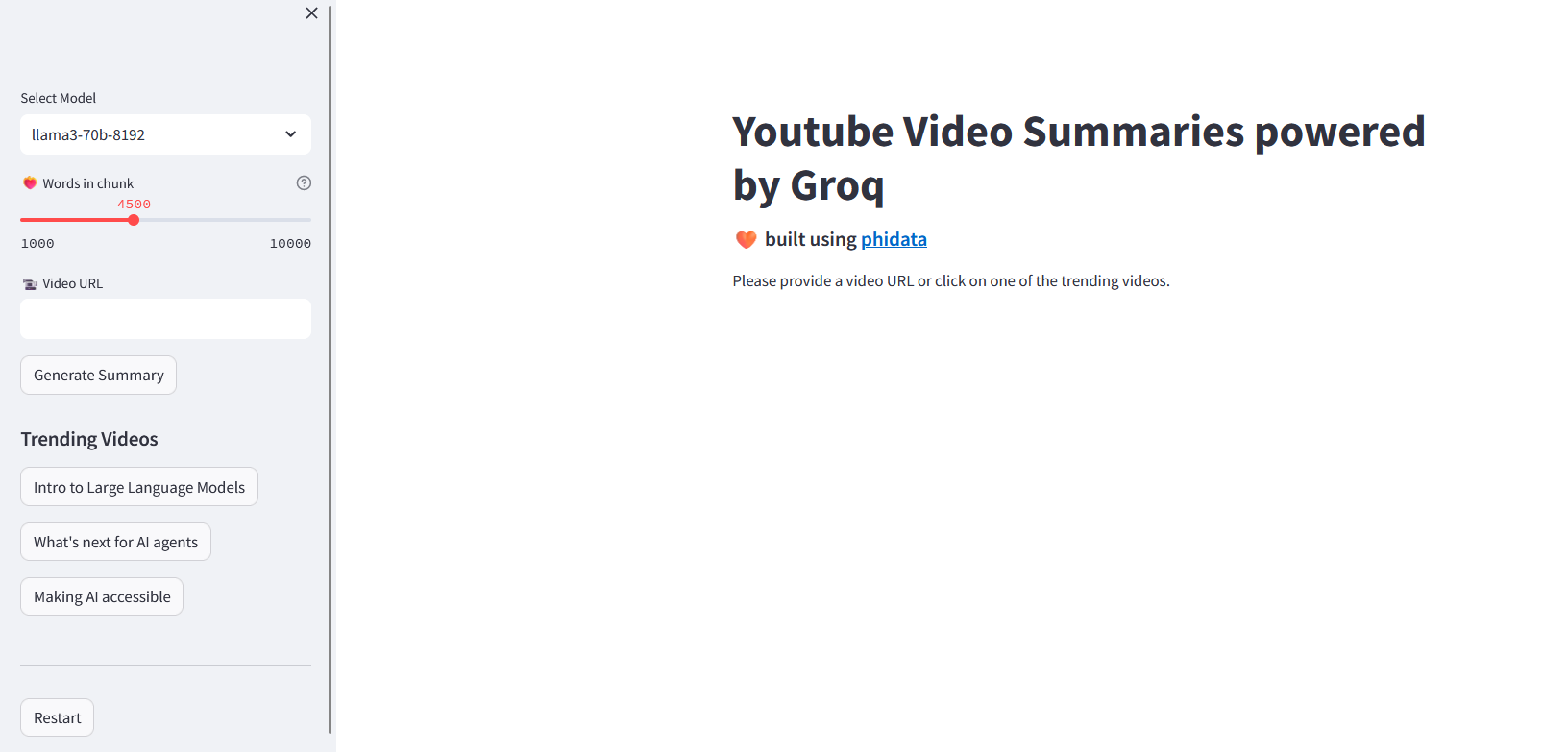

YT Summarizer

version: '3.8'

services:

phidata_service:

image: ghcr.io/jalcocert/phidata:yt-groq #phidata_yt_groq #ghcr.io/jalcocert/phidata:yt-groq

container_name: phidata_yt_groq

ports:

- "8501:8501"

environment:

- GROQ_API_KEY=gsk_your_api_key_here #your_api_key_here 😝

command: streamlit run cookbook/llms/groq/video_summary/app.py

#command: tail -f /dev/null # Keep the container running

# networks:

# - cloudflare_tunnel

# - nginx_default

# networks:

# cloudflare_tunnel:

# external: true

# nginx_default:

# external: true