Summarize Youtube Videos with Groq and Streamlit

TL;DR

Using Streamlit + Groq API LLM inference to summarize YT videos.

A WebApp that you can SelfHost

+++ Understanding Streamlit Auth +++ An overview of other authentication options for streamlit apps, like Logto

Intro

How to avoid falling into click baits with Generative AI.

Use Groq and Streamlit to summarize youtube videos so that you know if its worth your time!

The API offered by ‘Groq, Inc.’ for their Language Processing Unit (LPU) hardware - AI hardware specifically designed to accelerate performance for natural language processing (NLP) tasks.

Thanks to the Groq LPU API we have a programmatic way for developers to interact with the LPU and leverage its capabilities for their applications.

- The PhiData Project is available on GitHub

- Project Source Code at GitHub

- It is Licensed under the Mozilla Public License v2 ✅

- Project Source Code at GitHub

Build AI Assistants with memory, knowledge and tools.

Today, we are going to use Groq API with F/OSS Models to…chat with a Youtube video.

With LLaMa3 and crazy inference speed

| Information | Details |

|---|---|

| API Key from Groq | You will need an API key from Groq to use the project. |

| Model Control | The models might be open, but you won’t have full local control over them - send queries to a 3rd party. ❎ |

| Get API Keys | Get the Groq API Keys |

| LLMs | The LLMs that we will run are open sourced ✅ |

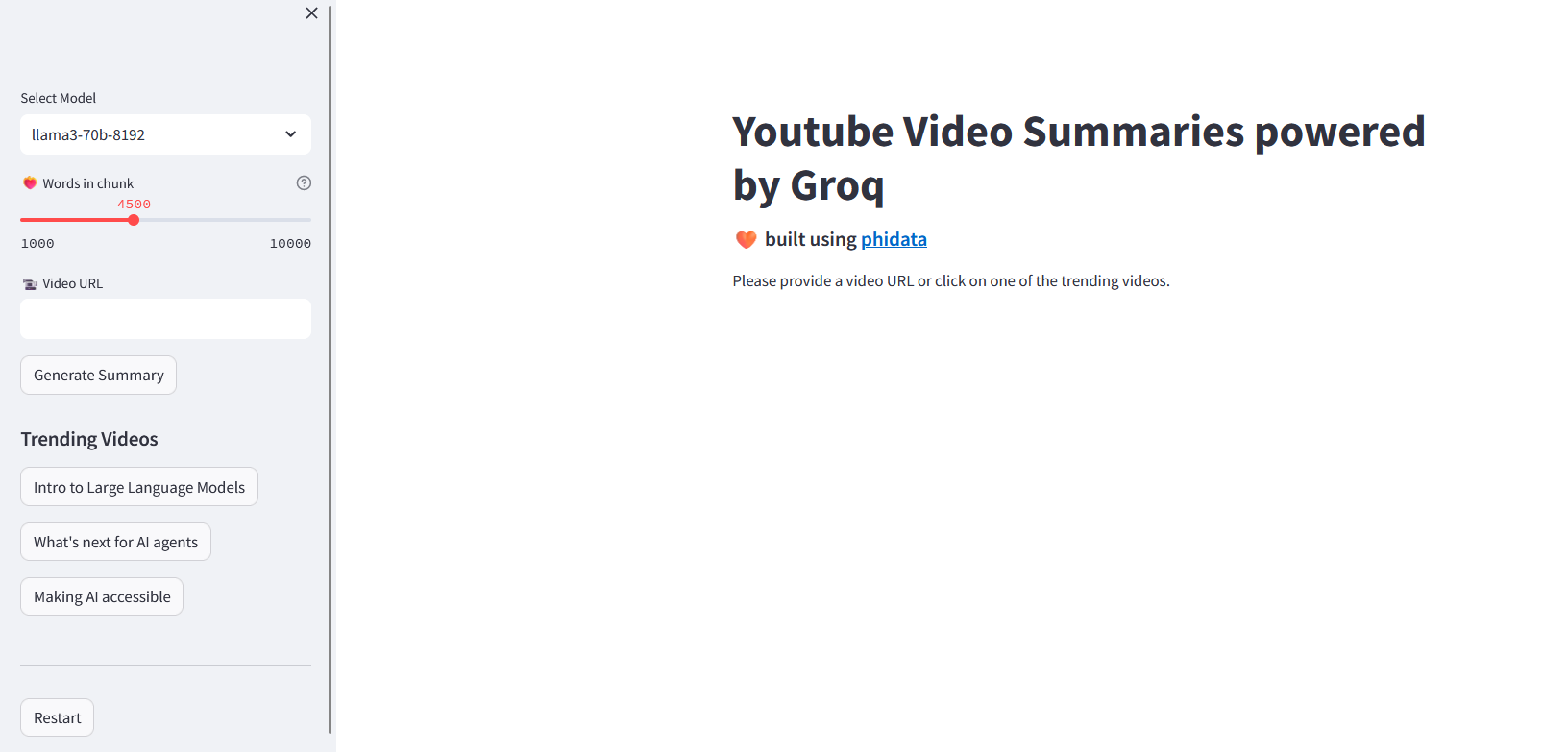

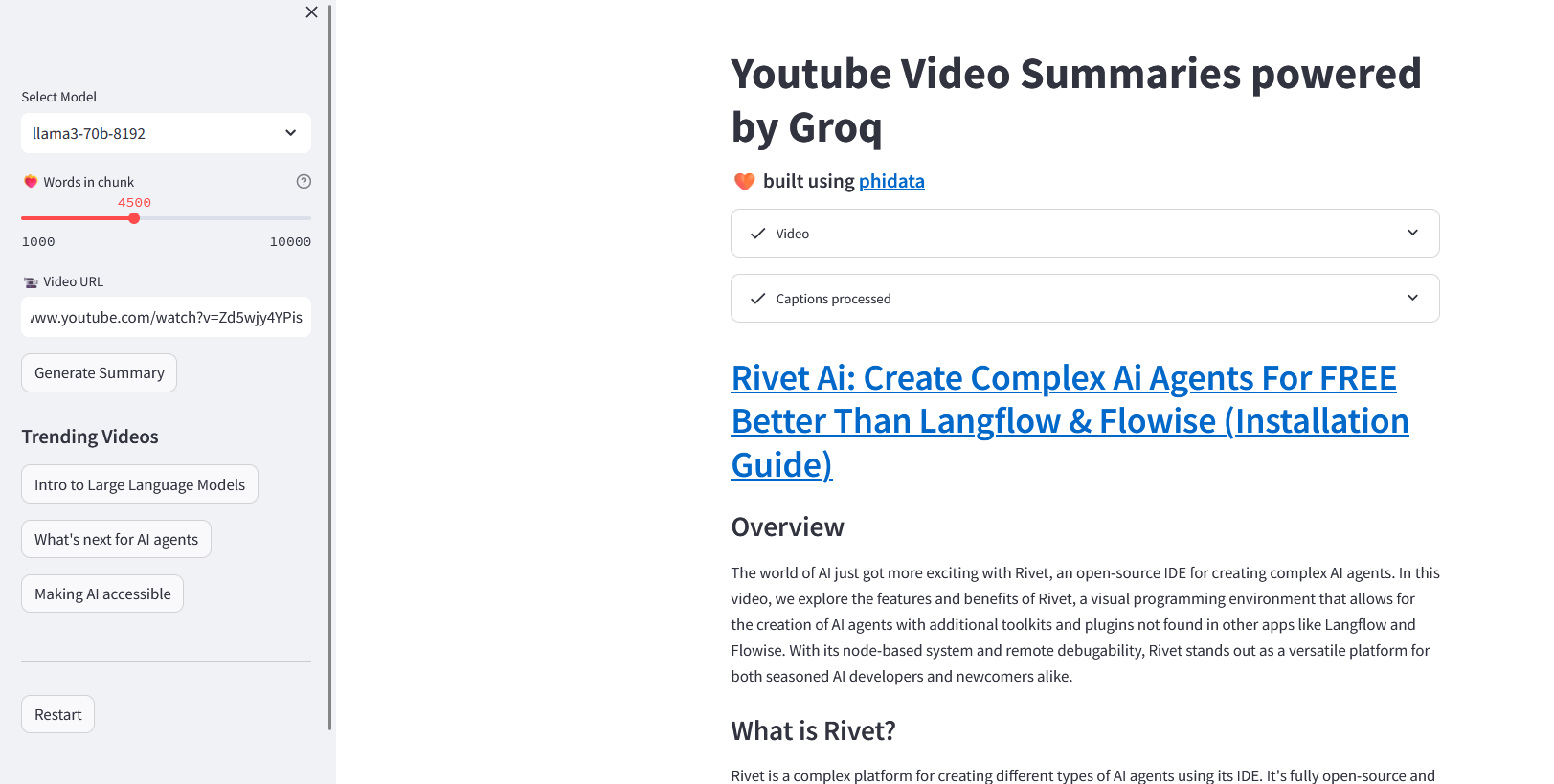

This is what we will get as our Youtube Summarizer Web App UI:

And there are many more interesting projects in their repository!

SelfHosting Groq Video Summaries

The phi-data repository contains several sample applications like this one, but our focus today will be at: phidata/cookbook/llms/groq/video_summary

It is very simple to get Youtube video summaries with Groq, we are going to do it systematically with Docker.

The PhiData Teams has made our life even easier, with some modules:

- See how to query a LLM with groq

- And how to list the available LLMs at groqAPI (because there s more to come..)

The magic happens at the assistant.py module

flowchart TD

A[Start] --> B{Choose Summarizer Function}

B -->|get_chunk_summarizer| C1[Define model and debug_mode]

B -->|get_video_summarizer| C2[Define model and debug_mode]

C1 --> D1[Initialize Assistant with parameters]

C2 --> D2[Initialize Assistant with parameters]

D1 --> E[Pass model parameter to Groq API]

D2 --> E

E --> F[Set Assistant Properties]

F --> G1[Define Assistant Name, Description]

F --> G2[Set Instructions and Template Format]

F --> G3[Enable Markdown & Debug Mode]

G1 --> H[Return Assistant Instance]

G2 --> H

G3 --> H

H --> I[Execute Summary Generation]

I --> J[Generate Report Using Model-Specific Prompt]

J --> K[Add Dynamic Date and Model Info to Report]

K --> L[Generate Final Markdown Report]

L --> M[End]At a higher level:

graph TD

%% Streamlit UI

subgraph Streamlit_App["Streamlit App (app.py)"]

UI1[Select LLM Model & Chunk Size]

UI2[Enter Video URL & Click Generate]

UI3[Render Video & JSON metadata]

UI4[Display Chunk Summaries & Final Summary]

end

%% Authentication

subgraph Auth["Authentication"]

Auth1[Auth_functions.login]

end

%% YouTube ingestion

subgraph YouTubeTools_Lib["YouTubeTools (phi.tools)"]

YT1[get_youtube_video_dataURL]

YT2[get_youtube_video_captionsURL]

end

%% Chunk‐level summarization

subgraph Chunk_Summarization

C1[Split captions into word‐based chunks]

C2[get_chunk_summarizermodel]

C3[chunk_summarizer.runchunk]

C4[Collect chunk summaries]

end

%% Final summarization

subgraph Final_Summarization

F1[get_video_summarizermodel]

F2[video_summarizer.runaggregated info]

end

%% Flow

UI1 --> UI2

UI2 --> Auth1

Auth1 -- success --> UI2

UI2 --> YT1 --> UI3

UI2 --> YT2 --> C1

C1 --> C2 --> C3 --> C4

C4 --> F1 --> F2 --> UI4Really, Just Get Docker 🐋👇

You can install Docker for any PC, Mac, or Linux at home or in any cloud provider that you wish. It will just take a few moments. If you are on Linux, just:

apt-get update && sudo apt-get upgrade && curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

#sudo apt install docker-compose -yAnd install also Docker-compose with:

apt install docker-compose -yWhen the process finishes, you can use it to self-host other services as well. You should see the versions with:

docker --version

docker-compose --version

#sudo systemctl status docker #and the statusWe will use this Dockerfile to capture the App we are interested from the repository and bundle it:

FROM python:3.11

# Install git

RUN apt-get update && apt-get install -y git

# Set up the working directory

#WORKDIR /app

# Clone the repository

RUN git clone --depth=1 https://github.com/phidatahq/phidata && \

cd phidata && \

git sparse-checkout init && \

git sparse-checkout set cookbook/llms/groq/video_summary && \

git pull origin main

WORKDIR /phidata

# Install Python requirements

RUN pip install -r /phidata/cookbook/llms/groq/video_summary/requirements.txt

#RUN sed -i 's/numpy==1\.26\.4/numpy==1.24.4/; s/pandas==2\.2\.2/pandas==2.0.2/' requirements.txt

# Set the entrypoint to a bash shell

CMD ["/bin/bash"]And now we just have to build our Docker Image with the Groq Youtube Summarizer App:

docker build -t phidata_yt_groq .

sudo docker-compose up -d

#docker-compose -f phidata_yt_groq_Docker-compose.yml up -d

docker exec -it phidata_yt_groq /bin/bashNow, you are inside the built container.

Just execute the Streamlit app to test it:

#docker or podman run

docker run -d --name=phidata_yt_groq -p 8502:8501 -e GROQ_API_KEY=your_api_key_here \

phidata:yt_summary_groq streamlit run cookbook/llms/groq/video_summary/app.py

# podman run -d --name=phidata_yt_groq -p 8502:8501 -e GROQ_API_KEY=your_api_key_here \

# phidata:yt_summary_groq tail -f /dev/nullHow to use Github Actions to build a multi-arch Container Image ⏬

If you are familiar with github actions, you will need the following workflow:

name: CI/CD Build MultiArch

on:

push:

branches:

- main

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v2

- name: Set up QEMU

uses: docker/setup-qemu-action@v1

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to GitHub Container Registry

uses: docker/login-action@v1

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.CICD_TOKEN_YTGroq }}

- name: Set lowercase owner name

run: |

echo "OWNER_LC=${OWNER,,}" >> $GITHUB_ENV

env:

OWNER: '${{ github.repository_owner }}'

- name: Build and push Docker image

uses: docker/build-push-action@v2

with:

context: .

push: true

platforms: linux/amd64,linux/arm64

tags: |

ghcr.io/${{ env.OWNER_LC }}/phidata:yt-groq It uses QEMU together with docker buildx command to build x86 and ARM64 container images for you.

And once you are done, deploy the AI youtube summarizer as a Docker Compose Stack:

version: '3.8'

services:

phidata_service:

image: phidata_yt_groq #ghcr.io/jalcocert/phidata:yt-groq

container_name: phidata_yt_groq

ports:

- "8501:8501"

environment:

- GROQ_API_KEY=your_api_key_here #your_api_key_here 😝

command: streamlit run cookbook/llms/groq/video_summary/app.py

#command: tail -f /dev/null # Keep the container running

# networks:

# - cloudflare_tunnel

# - nginx_default

# networks:

# cloudflare_tunnel:

# external: true

# nginx_default:

# external: trueFor Production deployment, you can use NGINX or Cloudflare Tunnels to get HTTPs.

Docker Compose Stack for GenAI Apps: YT Summarizer, Ollama and MultiChat 📌

#version: '3.8'

services:

phidata_service:

image: ghcr.io/jalcocert/phidata:yt-groq #phidata:yt_summary_groq

container_name: phidata_yt_groq

ports:

- "8502:8501"

environment:

- GROQ_API_KEY="gsk_dummy-groq-api" # your_api_key_here

command: streamlit run cookbook/llms/groq/video_summary/app.py

restart: always

networks:

- cloudflare_tunnel

streamlit_multichat:

image: ghcr.io/jalcocert/streamlit-multichat:latest

container_name: streamlit_multichat

volumes:

- ai_streamlit_multichat:/app

working_dir: /app

command: /bin/sh -c "\

mkdir -p /app/.streamlit && \

echo 'OPENAI_API_KEY = "sk-dummy-openai-key"' > /app/.streamlit/secrets.toml && \

echo 'GROQ_API_KEY = "dummy-groq-key"' >> /app/.streamlit/secrets.toml && \

echo 'ANTHROPIC_API_KEY = "sk-dummy-anthropic-key"' >> /app/.streamlit/secrets.toml && \

streamlit run Z_multichat_Auth.py

ports:

- "8501:8501"

networks:

- cloudflare_tunnel

restart: always

# - nginx_default

ollama:

image: ollama/ollama

container_name: ollama

ports:

- "11434:11434" #Ollama API

volumes:

#- ollama_data:/root/.ollama

- /home/Docker/AI/Ollama:/root/.ollama

networks:

- ollama_network

ollama-webui:

image: ghcr.io/ollama-webui/ollama-webui:main

container_name: ollama-webui

ports:

- "3000:8080" # 3000 is the UI port

environment:

- OLLAMA_BASE_URL=http://192.168.3.200:11434

# add-host:

# - "host.docker.internal:host-gateway"

volumes:

- /home/Docker/AI/OllamaWebUI:/app/backend/data

restart: always

networks:

- ollama_network

networks:

cloudflare_tunnel:

external: true

ollama_network:

external: false

# nginx_default:

# external: true

volumes:

ai_streamlit_multichat:Now that you have your Youtube Summarizer running…

…if you are interested - this is the overall App flow in the Streamlit Youtube Summarizer with Groq:

graph TD

A[Start App] --> B[Load .env variables]

B --> C[Check Authentication]

C -->|Authenticated| D[Fetch Models from Groq API]

D --> E{Models Available?}

E -->|Yes| F[Display Model Selection Dropdown]

F --> G[User Selects Model]

G --> H[Set Chunk Size via Slider]

H --> I[User Enters YouTube Video URL]

I --> J{Generate Report Button Clicked?}

J -->|Yes| K[Store Video URL in Session State]

K --> L[Fetch Video Data using YouTubeTools]

L --> M[Fetch Video Captions]

M --> N{Captions Available?}

N -->|Yes| O[Chunk Captions based on Chunk Size]

O --> P{Multiple Chunks?}

P -->|Yes| Q[Summarize Each Chunk with Selected Model]

Q --> R[Combine Chunk Summaries]

R --> S[Display Final Summary]

P -->|No| T[Summarize Single Caption with Selected Model]

T --> S

E -->|No| U[Display No LLMs available Message]

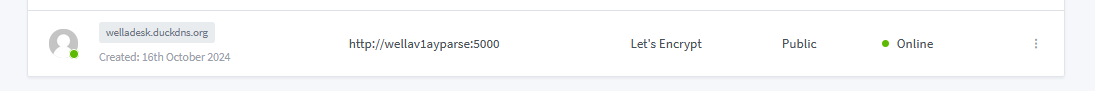

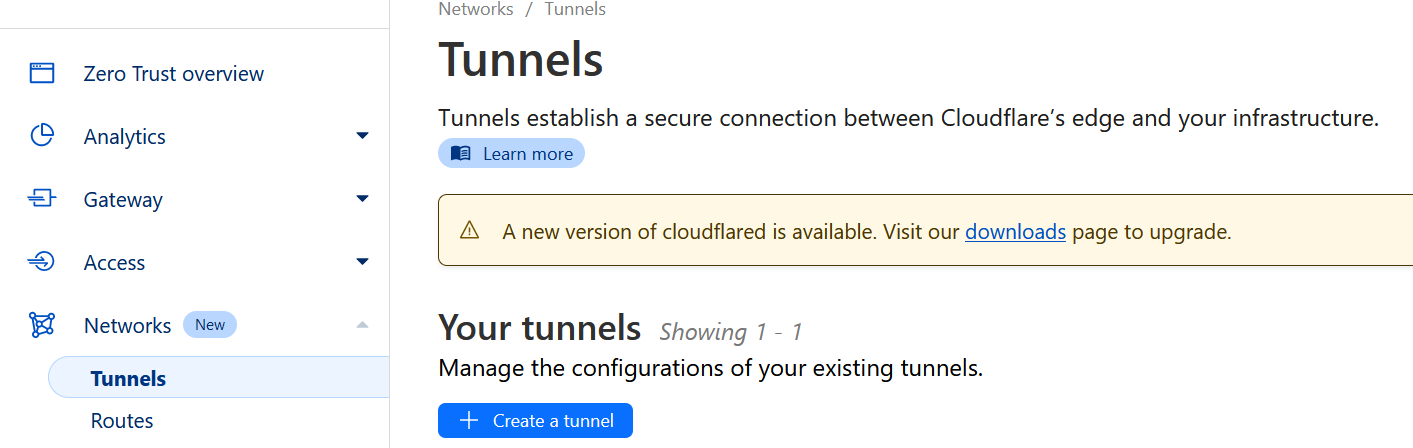

N -->|No| V[Display - Could Not Parse Video - Message]How to SelfHost the Streamlit AI App with Cloudflare ⏬

- Visit -

https://one.dash.cloudflare.com - Hit Tunnels (Network section)

- Create a new Tunnel

And make sure to place the name of the container and its internal port:

Conclusion

Now we have our Streamlit UI at: localhost:8501, thanks to this docker configuration:

Feel free to ask for Summaries about Youtube Videos with Groq:

Similar AI Projects 👇

- Using PrivateGPT to Chat with your Docs Locally ✅

- Using Streamlit + OpenAI API to chat with your PDF Docs

We have also learnt along the way how to query Groq LLMs via API, as per this section

Groq vs Others

| Service | Description |

|---|---|

| Ollama | A free and open-source AI service that features local models, allowing users to host their own machine learning models. Ideal for users who prefer to keep data on their own servers. |

| Text Generation Web UI | A free and open-source AI service using local models to generate text. Great for content creators and writers needing quick text generation. |

| Mistral | AI service specializing in creating personalized user experiences using machine learning algorithms to understand behavior and preferences. |

| Grok (Twitter) | AI service by Twitter for data analysis and pattern recognition, primarily used for analyzing social media data to gain insights into user behavior. |

| Gemini (Google) | Google’s AI service providing APIs for machine learning and data analysis. |

| Vertex AI (Google) | Google’s AI service offering tools for data scientists and developers to build, deploy, and scale AI models. |

| AWS Bedrock | Amazon’s foundational system for AI and ML services, offering a wide range of tools for building, training, and deploying machine learning models. |

| Anthropic (Claude) | Research-oriented AI service by Anthropic, aiming to build models that respect human values. Access their API and manage your keys in their console. |

| API, Console |

Adding Simple Streamlit Auth

We can use this simple package: https://pypi.org/project/streamlit-authenticator/

A secure authentication module to validate user credentials in a Streamlit application.

import streamlit as st

from Z_Functions import Auth_functions as af

def main():

if af.login():

# Streamlit UI setup

st.title("Portfolio Dividend Visualization")

if __name__ == "__main__":

main() It is a referencing a Auth_functions.py in another file, where the sample users are created.

Define Auth_functions.py in a separate file with ⏬

import streamlit_authenticator as stauth

# Authentication function #https://github.com/naashonomics/pandas_templates/blob/master/login.py

def login():

names = ['User Nick 1 🐷', 'User Nick 2']

usernames = ['User 1', 'User 2']

passwords = ['SomePassForUser1', 'anotherpassworduser2']

hashed_passwords = stauth.Hasher(passwords).generate()

authenticator = stauth.Authenticate(names, usernames, hashed_passwords,

'some_cookie_name', 'some_signature_key', cookie_expiry_days=1)

name, authentication_status, username = authenticator.login('Login', 'main')

if authentication_status:

authenticator.logout('Logout', 'main')

st.write(f'Welcome *{name}*')

return True

elif authentication_status == False:

st.error('Username/password is incorrect')

elif authentication_status == None:

st.warning('Please enter your username (🐷) and password (💰)')

return FalseFAQ

| What’s Trending Now? | Description |

|---|---|

| Toolify | A platform that showcases the latest AI tools and technologies, helping users discover trending AI solutions. |

| Future Tools | A website dedicated to featuring the most cutting-edge and trending tools in the tech and AI industry. |

How to use Groq API Step by Step with Python ⏬

Thanks to TirendazAcademy, we have a step by step guide to know how to make queries to Groq API via Python:

- https://github.com/TirendazAcademy/LangChain-Tutorials/blob/main/Groq-Api-Tutorial.ipynb - We can even use Google Colab!

!pip install -q -U langchain==0.2.6 langchain_core==0.2.10 langchain_groq==0.1.5 gradio==4.37.1from google.colab import userdata

groq_api_key = 'your_groq_API'

from langchain_groq import ChatGroq

chat = ChatGroq(

api_key = groq_api_key,

model_name = "mixtral-8x7b-32768"

)

from langchain_core.prompts import ChatPromptTemplate

system = "You are a helpful assistant."

human = "{text}"

prompt = ChatPromptTemplate.from_messages(

[

("system", system), ("human", human)

]

)

from langchain_core.output_parsers import StrOutputParser

chain = prompt | chat | StrOutputParser()

chain.invoke(

{"text":"Why is the sky blue?"}

)Other Interesting Projects with Groq

Ways to Secure a Streamlit App

We have already seen a simple way with the Streamlit Auth Package.

But what if we need something more robust?

How to secure the Access for your AI Apps

F/OSS Apps to manage Application access Management:

- Authentik

- LogTo:

Logto is an open-source Auth0, Cognito and Firebase auth alternative for modern apps and SaaS products, supporting OIDC, OAuth 2.0 and SAML open standards for authentication and authorization.

| Interesting LogTo Resources | Link |

|---|---|

| Protected App Recipe | Documentation |

| Manage Users (Admin Console) Recipe | Documentation |

| Webhooks Recipe | Documentation –> Webhooks URL |

Social sign-in experience with Logto

Authenticate Users via Email: Easily authenticate users through email.

Create a Protected App: Add authentication with simplicity and speed.

- Protected App securely maintains user sessions and proxies your app requests.

- Powered by Cloudflare Workers, enjoy top-tier performance and 0ms cold start worldwide.

- Protected App Documentation

Video Tutorial: Learn how to build your app’s authentication in clicks, no code required.

How to install AI easily

Some F/OSS Projects to help us get started with AI:

AI Browser - MIT ❤️ Licensed!

And if you need, these are some FREE Vector Stores for AI Projects

| Project/Tool | Link |

|---|---|

| Vector Admin Project | Self-Hosting Vector Admin with Docker |

| FOSS Vector DBs for AI Projects | Self-Hosting Vector Admin with Docker |

| ChromaDB | Self-Hosting ChromaDB with Docker |