PI's vs MiniPC for Home Server

PI’s

Some time ago I was making a performance comparison between 2 popular ARM boards.

Now, it is the time to see how they stand when compared with a similar in cost mini-PC.

For benchmarking I’ve used:

- Docker Building Image time for: https://github.com/JAlcocerT/Py_Trip_Planner/

- Sysbench

- Phoronix

- Netdata

The Orange Pi (8gb) idles ~ and the RPi 4 (2gb) ~

The Mini PC - BMAX B4

- Intel N95 (4 cores)

- 16GB RAM 2600mhz

1

lscpu

To connect via ssh I needed:

1

2

3

4

5

sudo apt update

sudo apt install openssh-server

sudo ufw allow ssh

#ssh username@<local_minipc_server_ip>

1

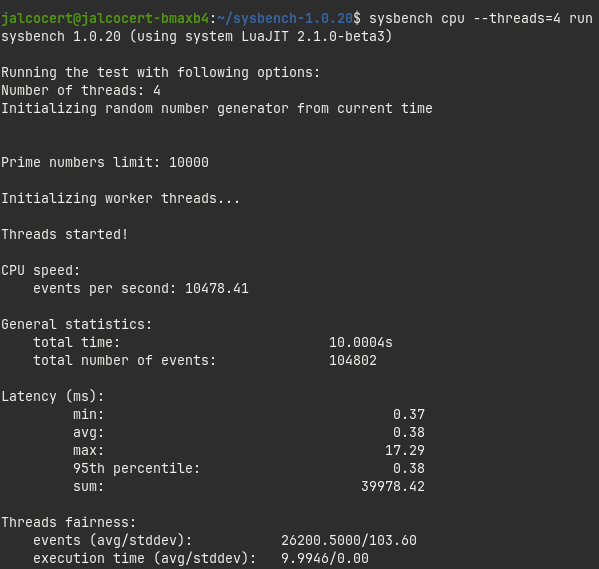

2

sudo apt install sysbench

sysbench cpu --threads=4 run #https://github.com/akopytov/sysbench#general-command-line-options

The BMAX idles around ~9w with Lubuntu 22.4 LTS and the max I observed so far is ~16W. Wifi/Bluetooh and an additional sata ssd included.

1

2

git clone https://github.com/JAlcocerT/Py_Trip_Planner/

docker build -t pytripplanner .

It took ~45 seconds for the N95 - Instead of 3600s and 1700s.

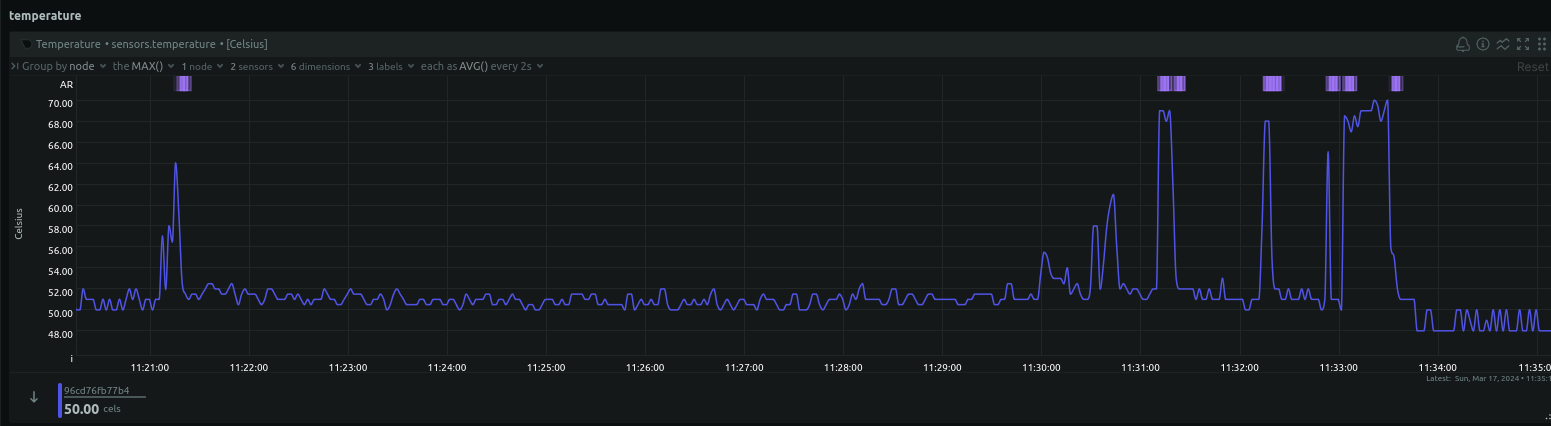

And a max Temp of 64C:

BMAX B4 - Temperature during Docker Build

BMAX B4 - Temperature during Docker Build

And these are the temperatures registered by NetData

BMAX B4 vs Orange Pi 5

Comparing N95 (x86) with the Rockchip RK3588S (ARM64).

Temperatures

1

sudo stress --cpu 8 --timeout 120

- Orange Pi 5 - 80C & 8w peak power (no fan enabled) and it quickly goes back to the ~45C after the test

- BMax B4 (N95, fan enabled that goes to full speed) - 66C and 15W peak power

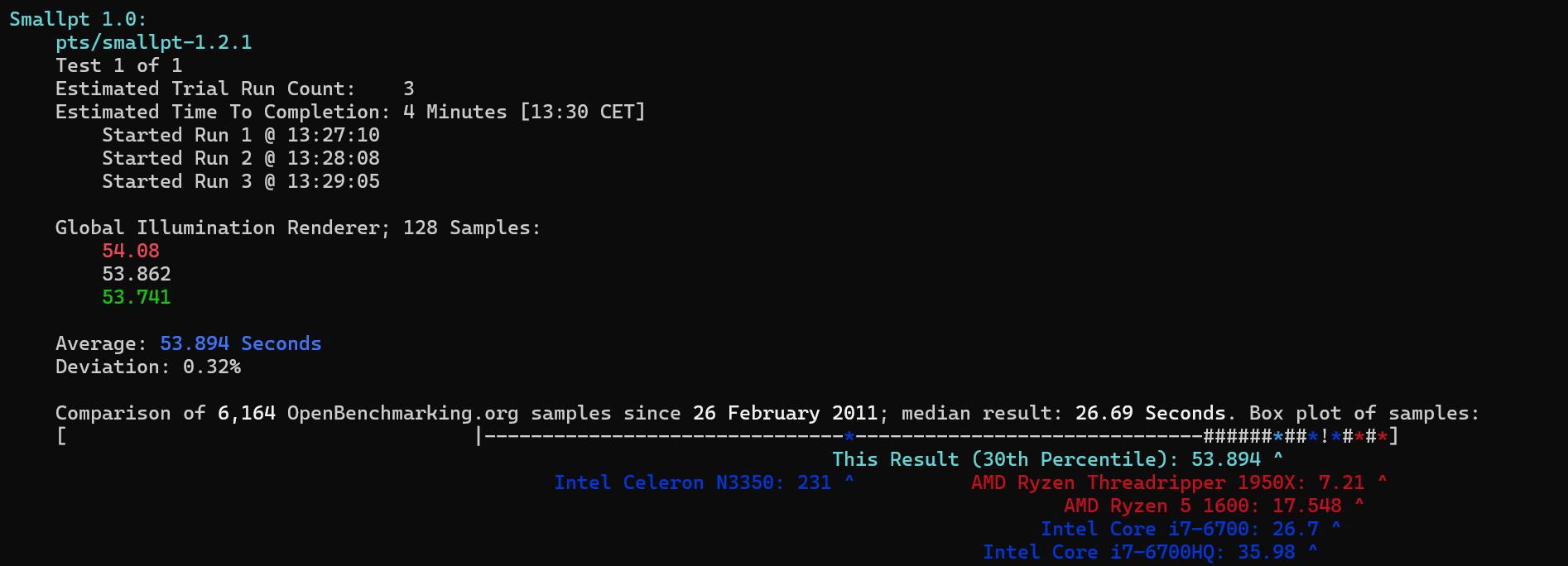

Phoronix

For Synthetic benchmarks I have used phoronix:

Intel N95 4 cores with phoronix Open Source Benchmark

Intel N95 4 cores with phoronix Open Source Benchmark

The Orange Pi 8 Cores is a beast scoring 38s

The Orange Pi 8 Cores is a beast scoring 38s

For reference, I benchmarked bigger CPUs here. Plot twist, both CPUs (specially the Rockchip, has nothing to envy)

FAQ

- Now you can have a DB Less Cloud

Why changing the MiniPC to Linux?

In this case, the BMAX B4 came with W11 fully activated by default - which for the price I would say its a pretty good deal.

I tried it and it moved daily tasks fluently, but the task manager was showing quite high CPU loads the next day (just installed docker with couple containers).

That’s why I decided to switch to a lighter Linux Distribution - Lubuntu starts at ~800mb RAM, instead of the 2.8GB of W11.

The max consumption registered was ~15w in this case

How to Disable Wifi/Bluetooh

To further lower the consumption, you can disable wifi with:

1

2

nmcli radio wifi off #on

#nmcli radio help

Here you improve by ~10% the power efficiency, aka: -1W 😜

And Bluetooth:

1

2

sudo service bluetooth stop #start

#service bluetooth status

Using a MiniPC as Free Home Cloud

- https://fossengineer.com/selfhosting-filebrowser-docker/

- https://jalcocert.github.io/RPi/posts/selfhosting-with-docker/

How to use LLMs in a MiniPC

We can use Ollama together with Docker and try one of the small models with the CPU.

1

2

3

4

5

docker run -d --name ollama -p 11434:11434 -v ollama_data:/root/.ollama ollama/ollama

#docker exec -it ollama ollama --version #I had 0.1.29

docker exec -it ollama /bin/bash

ollama run gemma:2b

BMAX B4 - Trying LLMs with a MiniPC

BMAX B4 - Trying LLMs with a MiniPC

The system was using 12/16GB (im running couple other containers) and the replies with Gemma 2B Model were pretty fast.

You can see how for the python question, which answer was pretty detailed, took ~30s and a max Temp of ~70C (fan full speed).

BMAX B4 - MiniPC Performance while LLM inference

BMAX B4 - MiniPC Performance while LLM inference

How to Benchmark?

- Using Sysbench

- Monitor with Netdata

with Phoronix Test Suite

We can have an idea by being part of openbenchmarking by using the F/OSS Phoronix Test Suite

1

2

3

wget https://github.com/phoronix-test-suite/phoronix-test-suite/releases/download/v10.8.4/phoronix-test-suite_10.8.4_all.deb

sudo dpkg -i phoronix-test-suite_10.8.4_all.deb

sudo apt-get install -f

Then, just use:

1

2

phoronix-test-suite benchmark smallpt

#phoronix-test-suite system-info